Deep Learning model architecture aligns with a specific structure of the data e.g RNN or LSTM for linear data like text, CNN for grid data like image. The structure of the data in these cases are specialized kind graph structure. Linear data like text is a linear graph and grid data like like image is a grid graph. Graph Neural Network(GNN) is very powerful because it can process data with any arbitrary graph structure. Data with generic graph structure abound in real life e.g social network, paper citation graph. In this post, we will find out how GNN can be used to discover subject matter experts from email communication data.

We will use a type of GNN called Graph Convolution Network (GCN) for the solution. A no code GCN implementation based on PyTorch is available in my Github repo whkapai. it’s also available as part of Python package in TestPyPi

Graph Neural Network

A GNN operates on data associated with nodes and edges. Like any Deep learning model, representations are learnt for the nodes and edges. Prediction can be made at various levels of granularities as follows

- Whole graph classification e.g type of molecule represented as a graph

- Node level classification e.g whether a person is an SME for our problem

- Edge level e.g predicting some property of a social network connection

- Link prediction e.g whether 2 users are likely to connect in social media

- Node clustering e.g categorizing social media users

- Discovering influencers e.g finding influential users in social media

There are various kinds of GNN. Here is a list of GNN types supported by PyTorch. They are characterized based whether they support

- Edge weight

- Edge attributes

- Message passing

- Bipartite graph

They differ on how embeddings for neighboring nodes are aggregated for a given node. All GNN go through message passing cycles when embeddings from neighboring nodes are used to define the embedding for a given node. The no of cycles define the reach of the nodes influencing a given node. A message passing cycle corresponds to a layer in a conventional neural network. After all the message passing cycles we get embeddings for each node. For node classification, a feed forward network takes the node embeddings as input predicts the node label

A GCN for node classification works as follows. All the nodes go through several message passing cycles and finally a classification head for node classification. Please refer to the link for details.

- Node input is the initial node embedding

- For any message passing cycle the embedding of a node from the previous cycle is passed through a weight matrix (A). The embedding from from all the neighboring nodes in previous cycle is averaged and passed through another weight matrix (B)

- The averaging could be weighted by edge weights. For averaging for each neighboring node previous cycle embedding is divided by the square root of the product of the degree of the current node and and the neighboring node. This quantity is added up for all the neighboring nodes

- The 2 components A and B are added and passed through an activation. This is the embedding for a node in the current cycle. This process is repeated for all nodes in each cycle.

- After the final cycle we have all the node embeddings. They are connected to a feed forward network classification head with as many output nodes as the number of classes.

Email Data

The goal for an organization is to discover experts with deep knowledge about the business. Some are known publicly as experts. The organization wants to discover people who have expert knowledge about the business but not widely known.

The email users in an organization are modeled as the nodes in the GCN. If there was ever any email communication between 2 users, there will be a corresponding edge in the GCN. The node features are as follows

- Node ID (not a feature)

- Total no of messages (sent and received)

- Average sent message size

- Average received message size

- Bag of word binary vector of size 50

- Node label (expert(0), junior(1), others(2))

The bag of word vector needs some clarification. it characterizes the nature of of message content. The intuition is it will reflect the kind of role someone is playing within an organization. A vocabulary of 50 words are selected that are related to the nature of business. From all the texts in sent and received messages for a user a word frequency distribution of those 50 words is created. By using a threshold value, the frequency distribution is converted to a binary vector. If the frequency value is above some threshold value the binary value is 1 and 0 otherwise. Instead of bag of words vector, you could have also used embedding from a neural language model like BERT.

The nodes corresponding to people known to experts, juniors or other categories are used used as the labelled nodes for training purpose. The model following training will predict labels of the remaining nodes.

The data is generated synthetically in CSV format. The data has 3 parts corresponding to nodes, mask for node data used for training, validating and testing and edges. The firsts set of records node features, once row per node. Then there is a row that specifies the nodes to be used for training. it contains a list of range of indexes of the node records. The last rows are for the edges. each row has 2 node indexes, corresponding to the the 2 nodes of an edge. Edges are bi directional.The same edge is defined twice with the node orders flipped. the 3 types of data are as follows

EXI280AMC7,519,207,762,1,1,1,0,1,1,0,0,0,0,0,0,0,0,0,1,1,0,0,1,0,1,0,1,1,1,0,1,1,1,1,0,0,1,0,1,0,1,1,1,0,0,0,1,1,1,0,0,1,0,0 EV3FY27ZKM,492,213,734,1,1,1,0,1,1,0,0,0,0,1,0,1,1,0,1,1,0,0,1,0,1,0,1,1,1,0,1,1,1,0,0,0,1,0,1,0,1,1,1,0,0,0,1,1,1,0,0,1,0,0 E971JN3CPK,511,212,855,1,1,1,0,1,1,0,0,0,0,1,0,1,1,0,1,1,0,0,1,0,1,0,1,1,1,1,1,1,1,0,1,1,1,0,1,0,0,1,1,0,0,0,1,1,1,0,0,1,0,0 E3DMP49N8U,487,194,691,1,1,0,0,1,1,1,0,0,0,1,0,0,1,0,1,1,0,0,1,0,1,0,1,1,1,1,1,1,1,0,1,1,1,1,1,0,0,1,1,0,0,0,1,1,1,0,0,1,0,0 EM46QZ5893,476,162,753,1,1,0,0,1,1,1,0,0,0,1,0,0,1,0,1,1,0,0,1,0,1,0,1,1,1,1,1,1,0,0,1,1,1,1,1,0,0,0,1,0,0,0,0,1,1,0,0,0,0,0 J9U9IQEXGK,382,599,299,1,1,0,0,1,1,0,0,0,0,1,0,0,1,0,1,1,0,0,1,1,1,0,1,1,1,1,1,1,0,0,0,1,1,1,1,0,0,1,1,0,0,0,0,1,1,0,0,0,0,1 J5HE3V60AI,431,679,329,0,1,0,0,1,1,0,0,0,1,1,0,0,1,0,1,1,0,0,1,1,1,0,1,1,1,1,1,0,0,1,0,1,1,1,1,0,0,1,1,0,0,0,0,1,1,0,0,0,0,1 J9553BHUMS,359,654,337,0,1,0,0,1,1,0,0,0,1,1,0,0,1,0,1,1,0,0,1,1,1,0,1,0,1,1,1,0,0,1,0,1,1,1,1,0,1,1,1,0,1,0,0,1,1,0,0,0,1,1 J4AHHO4V5R,353,737,313,0,0,0,0,1,1,0,0,0,1,0,0,0,1,0,1,1,0,1,1,1,1,0,1,0,1,1,1,0,0,0,0,1,1,1,1,0,1,1,1,0,1,0,0,1,1,0,0,0,1,1 JQ540WEB6V,368,677,323,0,0,0,0,1,1,0,0,0,1,0,0,0,1,1,1,1,0,1,1,1,1,0,1,0,1,1,1,0,1,0,0,1,1,1,1,1,1,1,1,0,1,0,1,1,1,0,0,0,1,1 ................... mask 985 0:63 71:117 123:167 172:345 440:613 .................. 172,22 22,172 172,4 4,172 172,34 34,172

GCN Training and Prediction with No Code Framework

The GCN model is characterized as follows.

- Two cycles of convolutions with output of size 16. So the influencing node reach is 2 hops away from a given node

- Activation id RELU for convolution. Dropout is used for convolution

- A feed forward network as the classifier. It’s input size is 16 and the output size is 3, corresponding to 3 categories of email users. Activation is softmax

- Optimizer is adam

I’m using a no code framework for GCN from my GitHub repo. It’s also available as a Python package called Torvik. in TestPyPi. A Python class wraps the native PyTorch. It’s driven by an elaborate configuration file where you provide all data related to your model. to use it all you have to do is to call various method related to training, testing and predicting. Here is the driver code for our use case which can be used to learn how to use the no code framework

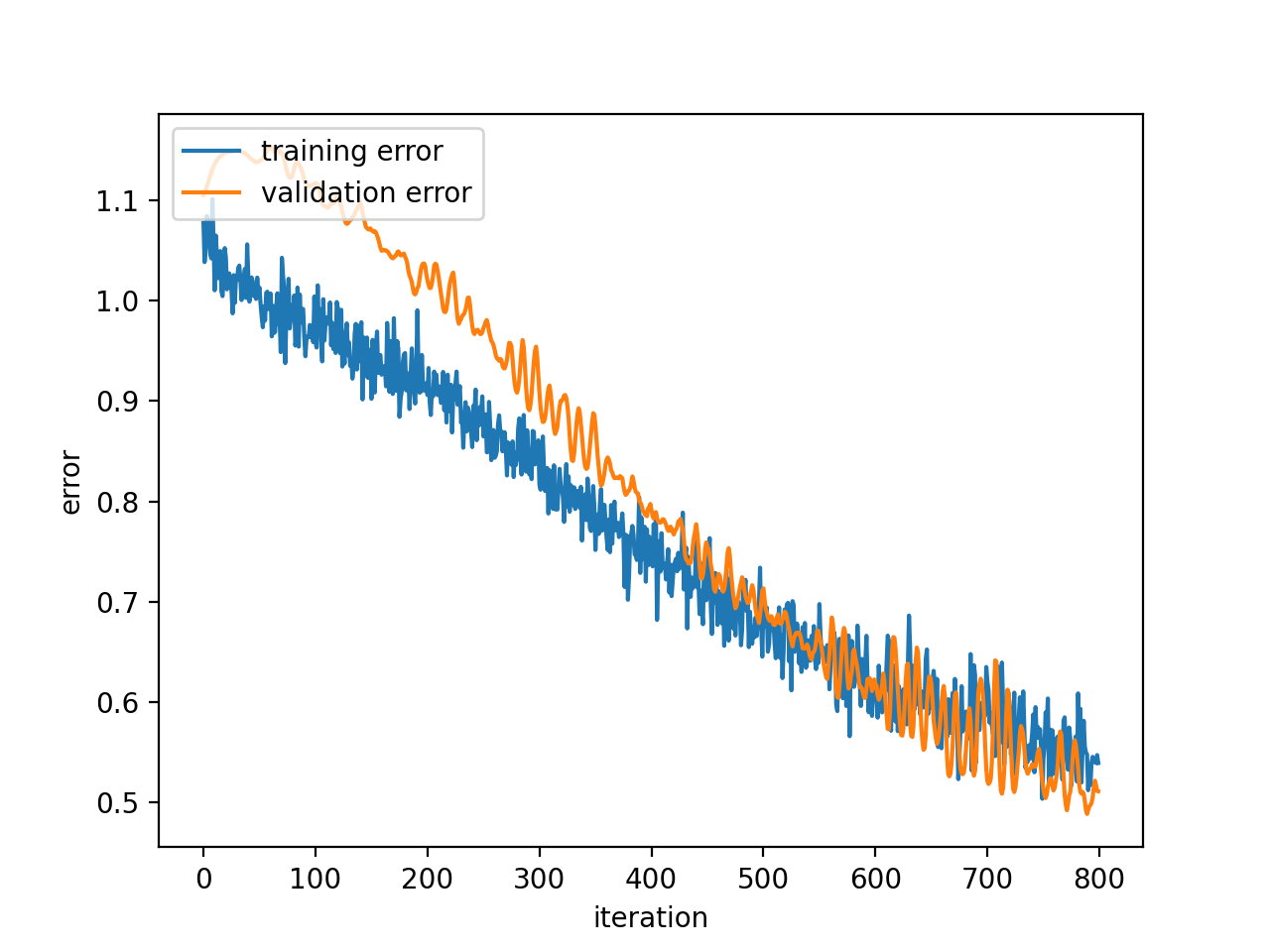

Here is trailing end of the training output and the train and validation error plots. No of epochs used is 1000. All the details can be found in the config file.

epoch 950 loss 0.946304 val error 0.592342 epoch 960 loss 0.932747 val error 0.648826 epoch 970 loss 0.937377 val error 0.570499 epoch 980 loss 0.946073 val error 0.570633 epoch 990 loss 0.937508 val error 0.603429 ..saving model checkpoint model saved test perf score 0.840

Training and validation error

Unlike other neural network, where the test data or data being used for prediction is independent of the training data, in GNN there is one data set. In that data set you have to mark what subset is used for training, testing, prediction etc. Here is some output for node prediction.

['J1LN04XGED', '426', '714', '308', '0', '1', '1', '1', '1', '1'] prediction 1 ['J7VE0I1TCZ', '369', '707', '280', '0', '1', '1', '0', '1', '1'] prediction 1 ['JX40Z4OVZ5', '384', '671', '334', '0', '1', '0', '0', '1', '1'] prediction 1 ['JKAUK937M7', '376', '698', '291', '0', '0', '0', '0', '1', '1'] prediction 0 ['J12L87P1C7', '384', '681', '305', '0', '0', '0', '0', '1', '1'] prediction 0 ['JQR8DKD2P8', '387', '648', '320', '0', '0', '0', '0', '0', '1'] prediction 0

it shows partial node data followed by the prediction which is either 0, 1 or 2 for the different categories of people in the organization. Please refer to the tutorial document for detailed steps for execution of this use case

Summing Up

GNN is a powerful neural model that operates on on data with arbitrary graph structure. In this post we have gone through a solution based on Graph Convolution Network (GCN). Since in graph data, the nodes have relationship with other nodes, it’s static. You can not train a GNN and then make prediction for some new node. This takes some re orientation of our mind. You have to retrain the whole GNN model if there is any change to the graph structure e.g an employee leaving or going the organization for our use case.