RAG Applications Development

Improving LLM Outputs' Reliability

We build RAG applications to make LLM outputs reliable, verifiable, and usable inside enterprise environments. These applications connect language models with trusted enterprise knowledge and ensure responses are grounded in real data. Our focus is on accuracy, trust, and adoption at scale.

Business Problems We Solve with RAG Applications

At ThirdEye Data, we do not treat RAG as a technical add-on to LLMs. We use it to solve practical business problems that arise when enterprises try to use LLMs on real data.

Below are the key challenges we address through RAG-based applications.

Enterprises quickly lose trust in LLM tools when answers sound confident but are incorrect. This creates decision risk and limits adoption beyond experimentation.

We design RAG applications that ground every response in approved enterprise sources. Answers are traceable, constrained, and aligned with business context.

Critical information is spread across PDFs, SharePoint, ticketing systems, data platforms, and internal tools. Employees struggle to find the right information when they need it.

Our RAG applications connect these sources and allow users to access trusted knowledge through a single interface without manual searching.

Risk, legal, and compliance teams often block LLM rollouts due to lack of transparency and control. Enterprises cannot justify decisions based on unverified AI outputs.

We build RAG systems with controlled data access, clear source attribution, and audit-ready outputs that align with enterprise governance requirements.

Many internal LLM tools fail because employees do not trust the answers or find them useful in daily work. Tools remain disconnected from workflows.

Our RAG applications are designed around real user needs and embedded into existing systems, improving relevance and adoption.

Enterprises rely heavily on a small number of experts to answer recurring questions and validate information. This creates bottlenecks and slows execution.

RAG applications help scale expert knowledge across teams while keeping humans involved where judgment is required.

Enterprises Trust Our Generative AI Development Expertise

Core Functions Where Our RAG Applications Add Business Value

Our RAG applications are built to support business functions where accurate information, context, and speed directly impact decisions and execution. We focus on functions that rely heavily on institutional knowledge and structured reasoning.

Operations and Process Execution

Our RAG applications provide grounded, role-specific answers within operational workflows, helping teams act faster while staying aligned with approved processes.

Customer Support and Service Enablement

We build RAG systems that deliver verified responses sourced from approved documentation, enabling faster resolution and consistent service quality.

Risk, Compliance, and Internal Controls

Our RAG applications ensure that responses are backed by controlled sources and can be reviewed, traced, and audited when required.

Sales, Pre-Sales, and Account Teams

We enable sales teams with RAG-based assistants that provide reliable, up-to-date information without exposing sensitive or unapproved content.

Knowledge Management and Internal Enablement

Our RAG applications turn static repositories into active knowledge systems that evolve with the business and remain usable at scale.

Leadership and Decision Support

We design RAG applications that surface verified information to support informed decision-making while maintaining transparency.

Our RAG Applications Development Services

Our RAG services focus on building production-ready systems that deliver accurate, grounded responses from enterprise knowledge. Each service is designed around a recurring business need we have addressed across industries.

Enterprise Knowledge Assistants

We build knowledge assistants that provide reliable answers from approved enterprise sources such as policies, manuals, and internal documentation. These assistants reduce manual searching and ensure information is consistent across teams.

Document-Grounded AI Applications

Enterprises manage large volumes of unstructured documents that are difficult to use in daily operations. We develop RAG applications that extract, retrieve, and reference relevant content from documents in a controlled manner.

Compliance and Policy Q&A Systems

We build RAG systems designed specifically for compliance-sensitive environments. These applications ensure that answers are grounded in approved policies and regulations, reducing risk and supporting audit requirements.

Support Enablement and Agent Assist Systems

Support and operations teams require fast access to accurate information during live interactions. Our RAG solutions provide real-time, context-aware answers without relying on memory or guesswork.

Research and Analysis Assistants

We develop RAG applications that support analysts and domain experts by retrieving verified information from trusted sources and summarizing it for faster analysis and reporting.

Internal Enablement and Onboarding Systems

New hires and cross-functional teams often struggle to navigate complex internal knowledge. Our RAG applications help scale institutional knowledge and reduce onboarding time.

Our Computer Vision Project References

Developed a multi-agent system that transforms how loyalty programs are managed and experienced for a leading marketing company.

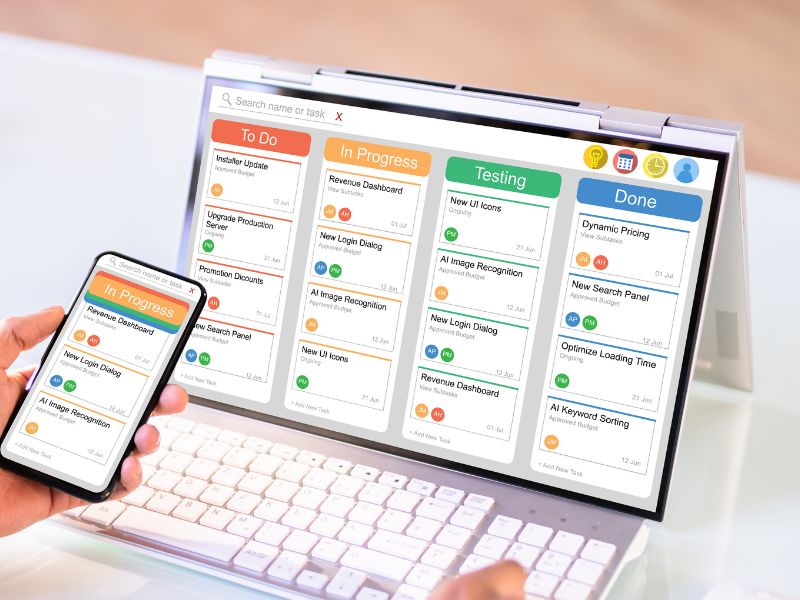

Developed an LLM-based Help Center Assistant for a leading project management solutions provider. The assistant offers an intuitive, conversational interface that allows users to easily search and retrieve information from product documentation, PDFs, and the company’s website

Consult with Our Experts for RAG Applications Development

Consult with our developers to start building your RAG-based applications.

Answering FAQs on RAG Applications

Standard LLMs generate responses based on training data and prompts. RAG ensures responses are grounded in enterprise-approved sources, making outputs reliable and defensible.

RAG significantly reduces hallucinations but does not eliminate risk by default. Proper source selection, retrieval design, and response constraints are required for dependable results.

RAG can work with documents, knowledge bases, structured data, and internal systems. The key is identifying authoritative sources rather than indexing everything.

We work with business and risk teams to define approved sources. Content relevance, ownership, update frequency, and sensitivity are considered before inclusion.

Yes. We design ingestion and update pipelines that keep content current without disrupting users or requiring manual rework.

RAG applications can be designed with strict access controls, role-based retrieval, and private deployments to meet enterprise security standards.

No. RAG is particularly effective with unstructured and semi-structured data. We design retrieval pipelines to handle real-world data conditions.

We implement testing against real scenarios, source attribution, and feedback mechanisms. Validation focuses on business accuracy, not just model scores.

Fine-tuning changes model behavior. RAG controls what information the model uses at runtime. RAG is often safer and more flexible for enterprises.