LLM Applications Development

Leveraging LLMs to Automate Knowledge-heavy Workflow

We build enterprise-grade LLM applications that support stakeholders in making faster decisions and automating knowledge-heavy work. These applications are designed to operate inside business systems, use trusted data, and deliver consistent outcomes. Our focus is not on experimentation. It is on production-ready applications that enterprises can rely.

Business Challenges or Problems We Address with LLM Application Development

At ThirdEye Data, we do not build LLM applications as standalone chat interfaces or experimental tools. We build them to address specific business problems where knowledge, decisions, and communication break down at scale. Our focus is on situations where information exists but cannot be used effectively.

Below are the key business challenges we address through custom LLM applications.

Enterprises store critical knowledge across documents, emails, systems, and platforms. Employees spend significant time searching, validating, and reconciling information before taking action. This slows execution and increases dependency on individuals.

We build LLM applications that connect to enterprise knowledge sources and present context-aware answers within existing workflows. This allows teams to access reliable information when and where decisions are made.

Many early LLM deployments fail because outputs vary across users and situations. When answers cannot be trusted, teams fall back to manual verification. Adoption drops quickly.

We design LLM applications with grounding, validation rules, and clear boundaries. This ensures consistency and builds confidence in day-to-day usage.

Teams across operations, finance, sales, and support repeatedly perform similar analysis, summarization, and documentation tasks. This work consumes skilled time without adding proportional value.

Our LLM applications alleviate this burden by automating repetitive cognitive tasks, allowing humans to focus on judgment and accountability.

Many LLM tools operate outside enterprise systems. Employees must switch tools, copy information, and manually trigger actions. This breaks flow and limits impact.

We build LLM applications that operate inside existing systems and workflows, enabling insights to directly support execution.

Enterprises hesitate to scale LLM usage due to unclear data access, audit gaps, and regulatory risk. Without governance, initiatives stall.

We design LLM applications with controlled data access, logging, and review mechanisms aligned with enterprise security and compliance requirements.

Enterprises Trust Our Large Language Models Expertise

Core Business Functions Where We Add Value with LLM Applications

Our LLM applications are designed to support business functions where decisions depend on timely access to accurate information. We focus on functions where manual effort, delays, and inconsistency create operational and financial impact.

Operations and Process Management

Our LLM applications provide operational teams with instant, context-aware insights drawn from enterprise data. This reduces response time, improves consistency, and supports faster decision-making.

Finance, Risk, and Compliance

We develop LLM-based applications that assist with document analysis, policy interpretation, and internal reporting. These applications help teams work faster while maintaining accuracy and control over sensisitive data.

Sales and Revenue Enablement

Our LLM applications help sales teams prepare, respond, and follow up with confidence. They surface relevant information, summarize account context, and support consistent communication.

Customer Support and Service Operations

We build LLM applications that assist support teams with accurate, grounded responses. These systems reduce handling time while preserving quality and escalation control.

Human Resources and Internal Services

Our LLM applications enable employees to access accurate information quickly, allowing HR teams to focus on higher-value tasks. These applications automate the entire workflow.

Engineering, Product, and Research Teams

We develop LLM applications that help teams retrieve, summarize, and reason over technical information, supporting faster execution without replacing expert judgment.

Our LLM Application Development Services

We deliver LLM applications as focused solutions aligned to how enterprises actually work. Each service offering addresses a distinct type of business need and operating model.

Enterprise Knowledge & Information Access Applications

We develop LLM applications that allow teams to access enterprise knowledge through natural language queries. These applications connect to internal documents, data platforms, and systems while respecting access controls and data boundaries.

Decision Support and Internal Copilot Applications

We develop LLM-powered copilots that assist managers and teams with analysis, summaries, and recommendations. These applications are designed to support decisions, not automate authority.

Workflow-Integrated LLM Applications

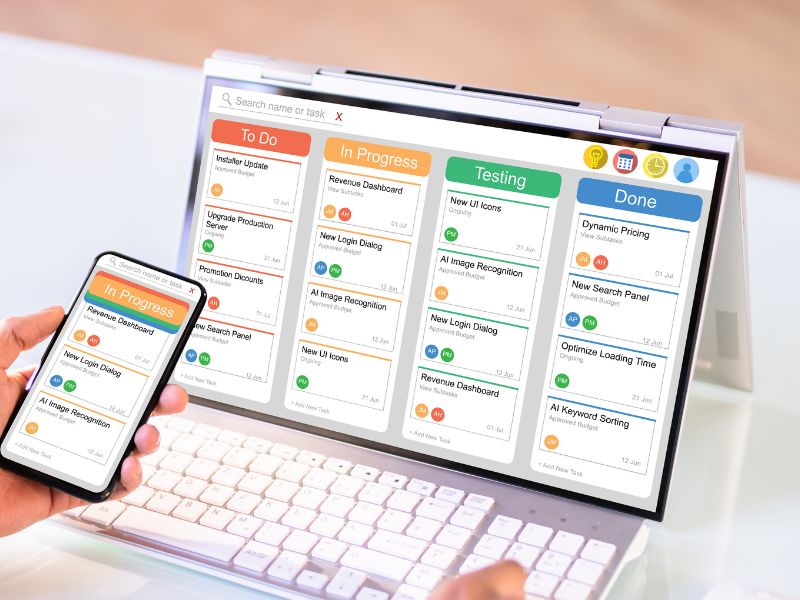

Our LLM applications are embedded directly into business processes. They trigger actions, generate structured outputs, and support execution across systems such as CRM, ERP, and service platforms.

Customer-Facing LLM Applications

We design controlled customer-facing LLM applications for support, onboarding, and self-service. These systems use approved knowledge sources and escalate to humans when needed.

Document Intelligence and Content Processing Applications

We build LLM applications that read, classify, summarize, and extract insights from large volumes of documents. These systems support finance, legal, compliance, and operations teams.

Domain-Specific LLM Applications

For complex industries and functions, we develop domain-aware LLM applications trained on specialized data and terminology. These applications align closely with business language, rules, and constraints.

Our LLM-based Project References

Implemented a Natural Language Processing (NLP) solution for a leading project management software provider. The solution addressed the customer’s need for an advanced software help system.

Implemented a cognitive computing application leveraging IBM Watson services on the IBM Bluemix cloud infrastructure to identify subject matter experts for a BFSI customer.

Developed and deployed an intelligent billing assistant chatbot powered by LLMs and a robust data intelligence platform to streamline billing query resolutions.

Developed an LLM-based Help Center Assistant for a leading project management solutions provider. The assistant offers an intuitive, conversational interface that allows users to easily search and retrieve information from product documentation, PDFs, and the company’s website.

Our Solution Approach to LLM Applications Development

Our approach to building LLM applications is not model-driven or tool-led. It is use-case driven. We design each application based on how information is consumed, how decisions are made, and how work actually flows inside the enterprise.

Before selecting any technology, we focus on outcomes, risk, and long-term usability.

Open Source LLM Application Approach

We recommend an open source approach when enterprises require maximum control and flexibility. This is common for internal applications, regulated environments, and domain-specific use cases.

Open source models allow customization of reasoning logic, tighter control over data flow, and deployment in private environments. This approach is suitable when accuracy, ownership, and adaptability matter more than rapid rollout.

Commercial Platform-Based LLM Applications Approach

We use commercial platforms when enterprises prioritize speed, standardization, and managed operations. These platforms provide strong governance, security controls, and monitoring capabilities.

Our deepest experience is with Microsoft’s LLM ecosystem, including Azure OpenAI, Azure AI Studio, and Microsoft Fabric. These platforms work well for enterprises already invested in Microsoft technologies.

Hybrid LLM Applications Approach

Most enterprise LLM applications benefit from a hybrid approach. Core reasoning or domain logic can be built using open source models, while commercial platforms handle deployment, security, and lifecycle management.

This approach balances flexibility with enterprise-grade stability and allows systems to evolve without locking into a single path.

Consult with Our LLM Developers

Consult with our LLM developers to select the optimal approach and maximize ROI for your enterprise.

Our Technology Stack for LLM Applications Development

Open Source Technologies

LLM Application Frameworks

- LangChain

- LangGraph

- LlamaIndex

Open Source Language Models

- LLaMA

- Mistral / Mixtral

Retrieval and Knowledge Systems

- FAISS

- Chroma / Weaviate

Evaluation and Reliability

- MLflow

- Custom Evaluation Pipelines

Commercial Tools & Platforms

Microsoft Ecosystem (Primary Partner Stack)

- Azure OpenAI

- Azure AI Studio

- Azure Machine Learning

- Microsoft Fabric

- Power Platform (Power Automate, Copilot Studio)

Cloud-Native LLM Platforms (When Required)

- AWS Bedrock

- Google Vertex AI

Enterprise Data Platforms

- Snowflake

- Databricks

API-Based Commercial LLM Providers

- OpenAI (API-based usage)

- Anthropic Claude

- Cohere

Enterprise Search & Knowledge Platforms

- Azure Cognitive Search

- Elastic Search

Insights Our Developers Shared on LLM Applications Development

Answering Frequently Asked Questions

LLM applications are systems that use large language models to support business decisions and automate knowledge-heavy tasks. They are integrated into enterprise data, systems, and workflows. They are not standalone chat tools.

Copilots and chat tools often operate in isolation. LLM applications are designed to work within business processes, follow defined rules, and produce outputs that lead to actions. The difference is operational impact.

Problems involving large volumes of documents, complex information access, repetitive analysis, and decision support are ideal. LLMs work best where human expertise needs to scale without losing consistency.

Yes. We design LLM applications to connect with existing data platforms, document repositories, and systems. Data access is governed and aligned with enterprise policies.

We use grounding techniques, validation rules, restricted scopes, and review mechanisms. LLMs are not given unlimited freedom. Trust is built through design, not prompts.

Yes, when built correctly. We design applications with access controls, audit logs, and private deployments where required. Data security is addressed from day one.

A focused pilot can be delivered in a few weeks. Production-grade deployments depend on integration depth, governance requirements, and scale. We plan delivery in phases.

Yes. Integration with ERP, CRM, ticketing systems, and internal tools is a core part of our work. LLMs deliver value only when embedded into workflows.

We design applications with traceability, logging, and controlled data access. Outputs can be reviewed and audited as required by compliance teams.

Perfect data is not required. We work with real enterprise data conditions and design systems that improve over time through feedback and refinement.

Success is measured through reduced manual effort, faster decisions, improved consistency, and better use of expert time. ROI is defined upfront.

ROI is measured through reduced manual effort, fewer errors, faster processing time, and improved compliance. We define success metrics before development begins.

We focus on systems that work in production. Our experience across enterprise environments helps us avoid common pitfalls. Clients trust us because we are practical, transparent, and accountable.

We begin with a discovery discussion to understand the problem, data, and constraints. From there, we define the right scope and approach.