Enterprise AI Strategy: Azure OpenAI as Your Model Foundry

The Enterprise AI Arms Race is Over. You Won.

In today’s C-suite, the word AIhas moved from a shiny future concept to a mission-critical mandate. We’re not just talking about simple chatbots anymore; we’re talking about massive, intelligent systems that can reason, generate code, and summarize global knowledge in a flash.

For years, accessing this top-tier intelligence—the kind packed into models like GPT-4and DALL·E—felt like a privilege reserved for a handful of Big Tech giants. The cost? Astronomical. The complexity? Enough to make your IT team weep.

Microsoft Azure has just torn down that wall.

By integrating the OpenAI Servicedirectly into its secure cloud ecosystem, Azure isn’t just offering a service; it’s providing an AI Model Foundry. It’s the ultimate assembly line where enterprises can safely access, customize, and operationalize the world’s most powerful AI, turning months of R&D into weeks of deployment.

The promise is simple:You get the intelligence of the giants, without sacrificing the control you need to sleep at night.

Azure OpenAI

Image Source: nanfor.com

Azure OpenAI: The Iron-Clad Security Blanket

What separates Azure OpenAI from simply calling an open API? Trust, governance, and the rulebook.

Think of OpenAI’s direct API as a public highway—fast, but exposed. Azure OpenAI is a private, armored convoymoving on that same highway. It delivers the same, cutting-edge intelligence (GPT-4, Codex, DALL·E) but wrapped in an iron-clad layer of enterprise protection:

- Data Residency:Your sensitive data—prompts, completions, proprietary insights—never leave your Azure tenant.They are not exposed to the public internet, nor are they used by Microsoft or OpenAI to retrain the underlying models. Your data remains yours.

- Built-in Compliance:For highly regulated industries, this is non-negotiable. Azure provides instant compliance with global standards like HIPAA, GDPR, ISO, and FedRAMP.

- Fine-Grained Access:Control exactly who in your organization can interact with these models, managed directly through Azure Active Directory (AAD).

This isn’t just about speed; it’s about responsible scaling. You can build mission-critical AI knowing your compliance officer won’t stage a revolt.

The Foundry Concept: Why We Don’t Build from Scratch Anymore

The term Foundation Model(or “Foundry Model”) is crucial. These are the AI Goliaths—massive models pre-trained on staggering amounts of internet data—designed to be the base layerfor everything.

Azure’s genius is turning these giants into modular building blocks. You’re not pouring a concrete slab (training from scratch); you’re installing a pre-fabricated, high-tech engine and customizing the chassis:

| Traditional AI Approach | Azure Foundry Approach |

| Start Time:Years | Start Time:Weeks |

| Cost:Millions in R&D | Cost:Optimized usage of pre-trained intelligence |

| Challenge:Building domain expertise | Advantage:Customizing global expertise with local data |

This is the power of the Foundry: rapidly adapting general intelligence for specific enterprise use cases, whether it’s powering predictive insights, running sophisticated conversational bots, or tackling intelligent document processing.

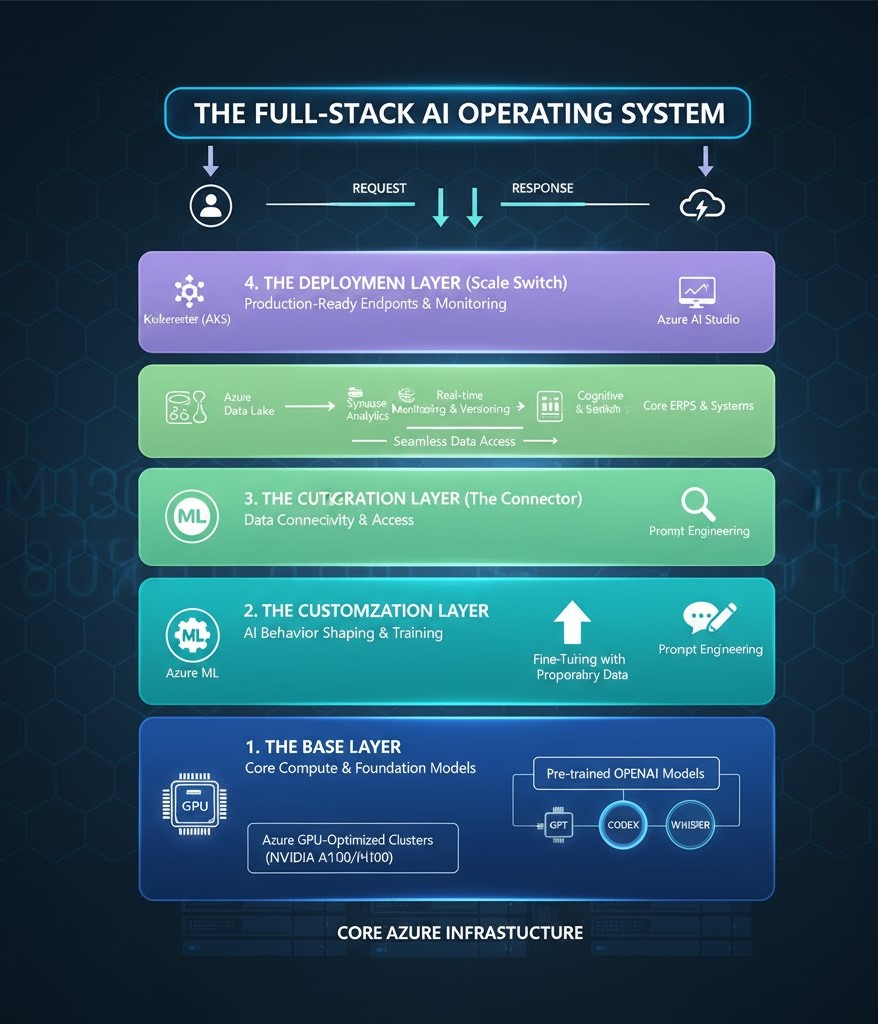

The Architecture: A Full-Stack AI Operating System

Azure doesn’t just host the models; it provides the entire stacknecessary for enterprise deployment. This layered architecture turns Azure into an AI operating system:

- The Base Layer (The Engine):Pre-trained OpenAI models (GPT, Codex, Whisper) run on Azure’s bleeding-edge GPU-optimized clusters(think NVIDIA A100s and H100s)—compute power that ensures blazing-fast inference.

- The Customization Layer (The Fine-Tuner):This is where you inject your corporate DNA. Use Azure MLfor fine-tuning the base models with your proprietary data and Prompt Engineeringto guide the AI’s behavior.

- The Integration Layer (The Connector):The AI can’t live in a silo. This layer seamlessly connects the model to your data sources: Azure Data Lake, Synapse Analytics, Cognitive Search,and your core ERPs.

- The Deployment Layer (The Scale Switch):Production-ready, globally scalable inference endpoints managed by Azure AI Studioand Kubernetes (AKS), complete with real-time monitoring and versioning.

Key Capabilities That Deliver Real ROI

Beyond security, the Foundry model delivers three key capabilities that dramatically accelerate ROI:

- Fine-Tuning and Proprietary Data

Your company’s proprietary data is your competitive edge. Azure ML allows you to fine-tunethe base models, making them “smarter” about your specific industry lexicon, product names, and internal processes, all while keeping the data safely behind your firewall.

- Retrieval-Augmented Generation (RAG)

This is the hallucination killer. RAG combines the generative power of a model like GPT-4 with the precise data retrieval capabilities of Azure Cognitive Search. Instead of pulling an answer from its general training data (and potentially making things up), the model is forcedto ground its responsein the verifiable facts stored in your internal documents and databases. Accuracy goes up; risk goes down.

- Seamless MLOps

Scaling AI isn’t just about flipping a switch; it’s about disciplined operations. Azure Machine Learning’s MLOps pipelinesautomate everything from deployment and version control to continuous monitoring. If your model starts to drift(perform less accurately over time), your team is alerted instantly.

The Challenges You Need to Manage

Let’s be clear: Implementing world-class AI is powerful, but it’s not a magic wand. There are real-world complexities you need to anticipate and manage to ensure success at scale.

We call these the “Gotchas” of Enterprise AI:

- The Expertise Gap:While Azure makes the models accessible, turning them into a high-performing tool often requires specialized skills. Fine-tuninga model—teaching it the nuances of your business—isn’t a simple drag-and-drop task; it requires dedicated AI engineering and data science expertise.

- The Cost Cliff:Scaling these powerful models quickly multiplies your usage costs. Cost managementis critical. Without proper governance and optimization, your proof-of-concept can balloon into an unexpected line item on your monthly bill.

- The Context Conundrum (Hallucinations):Even GPT-4 can be confidently wrong! Keeping AI responses factually accurate and grounded in your specific company data demands robust Retrieval-Augmented Generation (RAG)pipelines. RAG is the critical bridge between general intelligence and enterprise truth.

- The Ethical Watchdog:AI systems, trained on vast human-generated data, inevitably carry biases. Monitoring ethical and bias issuesis not a one-time setup; it’s an ongoing responsibility that requires continuous auditing and governance frameworks.

The Showdown: Azure OpenAI vs. The Competition

When it comes to high-stakes, enterprise-grade AI, you have choices, but they aren’t all created equal. Choosing the right platform means weighing raw access against security, flexibility, and compliance.

Here’s a simplified breakdown of the major players:

| The Platform | Key Strength (The Vibe) | Security Level (The Armor) | Model Access (The Engine) | Customization (The Toolkit) |

| Azure OpenAI | The Enterprise Fortress.Built for scale and audit-readiness. | Very High.Data stays in your Azure tenant. | The full OpenAI suite (GPT, Codex, DALL·E). | Strong (Deep integration with Azure ML/RAG). |

| OpenAI Direct | The Speed Demon.Fast, raw API access. | Moderate. Best for public data/non-sensitive tasks. | GPT, DALL·E. | Limited (Mostly prompt engineering). |

| AWS Bedrock | The AI Marketplace.A buffet of model choices. | High. A secure, multi-vendor approach. | Anthropic, Stability AI, AI21, etc. | High (via AWS SageMaker). |

| Anthropic | The Responsible AI Pioneer.Focus on ethical design. | Moderate. Focused on Claude’s safety standards. | Claude models. | Moderate. |

Frequently Asked Questions on Azure Open AI Foundry Models

- What model architectures are supported in Azure Foundry?

Foundry supports transformer-based architectures, including GPT-family models (GPT-3.5, GPT-4 variants) and encoder-decoder models optimized for tasks like summarization, classification, and embedding generation. Custom model deployment supports fine-tuned transformer checkpoints.

- How is fine-tuning implemented in Foundry?

Foundry allows fine-tuning via supervised fine-tuning and reinforcement learning from human feedback (RLHF). Fine-tuning leverages Azure Blob storage for datasets and supports JSONL, CSV, or Parquet formats with schema validation.

- What are the input/output token limits for Foundry models?

Token limits vary by model:

- GPT-3.5 variant: ~16k tokens per request.

- GPT-4 variant: ~32k tokens per request.

Requests exceeding these limits must be chunked using sliding windows or streaming APIs.

- How does Foundry handle batching and throughput optimization?

The SDK supports async calls, request batching, and streaming outputs. You can configure max concurrency per endpoint and adjust max_tokens, temperature, and top_pfor performance tuning without latency spikes.

- How are embeddings generated and stored?

Foundry generates vector embeddings via specific embedding endpoints. These embeddings can be normalized, truncated, or quantized. They are compatible with Azure Cognitive Search or vector databases like Pinecone or Milvus.

- How are latency and inference times optimized?

- Choose appropriate instance types (GPU-accelerated like NDv4 or NVv5).

- Use streaming API for partial outputs.

- Enable batching and caching for repeated prompts.

- Preload fine-tuned weights for hot endpoints to reduce cold-start latency.

- How is model versioning and rollback handled?

Each fine-tuned model in Foundry gets a unique version ID. Rollback is supported at the endpoint level; you can switch versions without redeploying the application. Azure CLI and SDK support programmatic version management.

- How is security enforced at the model and data level?

- Network isolation via VNet integration.

- Data encryption at rest (AES-256) and in transit (TLS 1.2+).

- Role-based access control (RBAC) for endpoint management.

- Optional prompt redaction and logging filters to prevent sensitive data leakage.

- How are multi-turn conversations handled?

Foundry supports session-based memory with conversation IDs. Developers can store and pass context tokens to maintain state, while controlling context length to stay within token limits. Advanced strategies include rolling summaries for long conversations.

- What monitoring and debugging tools are available for developers?

- Azure Monitor and Application Insights for latency, throughput, and error tracking.

- SDK supports logging request/response payloads (with PII redaction).

- Profiling tools allow developers to inspect attention maps, token utilization, and request-level performance metrics.

Conclusion: Azure as the AI OS for the Next Decade

The convergence of Azure’s secure cloud, its sophisticated tooling, and the sheer power of OpenAI’s models is redefining enterprise technology. Azure isn’t just hosting the future of AI; it’s providing the operating systemthat allows every business to build, own, and operate its own specialized intelligence.

The future of AI in your business isn’t about building one massive model; it’s about strategically assembling a custom Model Foundry.

Are you ready to move your AI strategy from a complex cost center to a scalable engine for innovation?