Azure AI Services: The Engine of Intelligent Enterprise Innovation

In the fast-evolving landscape of artificial intelligence, every organization faces the same challenge — how to move from AI experimentation to real-world impact.

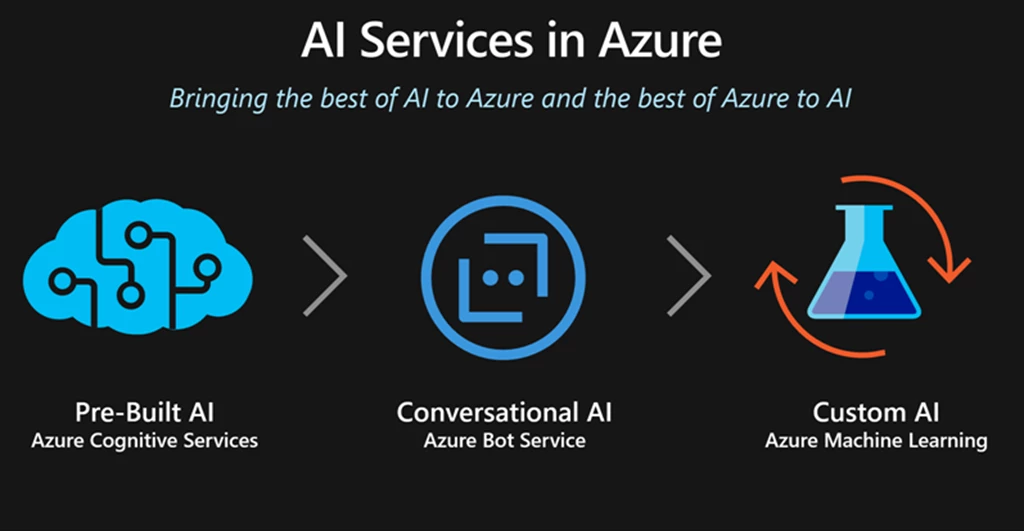

Microsoft’s Azure AI Services (previously part of Azure Cognitive Services) is designed exactly for that purpose. It unifies all of Microsoft’s pre-built and customizable AI offerings under one powerful umbrella, giving teams the tools to build intelligent, responsible, and scalable AI solutions — without starting from scratch.

From recognizing speech and translating languages to deploying enterprise-scale generative AI applications, Azure AI Services brings together the best of Microsoft’s AI innovations in one integrated ecosystem.

Azure AI Services

Image Courtesy: azure.microsoft.com

What Is Azure AI Services?

Think of Azure AI as Microsoft’s all-in-one AI toolbox — a suite of pre-trained APIs, foundation models, and development platforms that allow you to infuse intelligence into any application, no matter the use case or scale.

Whether you’re a developer building a chatbot, a data scientist training a domain-specific model, or an enterprise deploying custom AI agents, Azure AI provides everything you need — from infrastructure to governance — within a single, secure environment.

Why Azure AI Matters

Modern businesses don’t just need AI; they need AI that’s ready to deploy, adaptable, and trustworthy.

Azure AI Services bridges that gap by combining Microsoft’s powerful research-backed models with enterprise-grade scalability and security. This means you can go from concept to production faster — and with full confidence that your data stays protected.

The Core Pillars of Azure AI Services

Azure AI is structured around four main pillars that together make AI development both powerful and practical:

- Prebuilt AI Capabilities

Instant intelligence for everyday applications.

Azure offers a library of ready-to-use AI APIs that let you add intelligence to apps with just a few lines of code — no deep ML expertise required.

These APIs handle common AI tasks like:

- Vision – Image recognition, object detection, and content moderation.

- Speech – Speech-to-text, text-to-speech, and real-time transcription.

- Language – Sentiment analysis, summarization, and natural language understanding.

- Translator – Multilingual translation at enterprise scale.

- Search – Semantic and vector-based search experiences.

- Content Safety – Detecting harmful or inappropriate content for responsible AI use.

These prebuilt APIs are designed for instant integration — perfect for teams that want AI functionality now, not weeks from now.

- Custom and Foundation Models

Tailor-made intelligence, built on world-class AI.

For organizations ready to go beyond off-the-shelf AI, Azure AI provides the flexibility to customize and fine-tune models to match your business data and domain expertise.

Using services like Azure OpenAI, Azure AI Foundry, and Azure Machine Learning, developers can work directly with state-of-the-art foundation models such as GPT-4, CLIP, or Phi — and adapt them with their own datasets.

This opens up limitless possibilities:

- Fine-tune GPT models for industry-specific conversations.

- Train custom vision models for manufacturing defect detection.

- Create intelligent recommendation engines tailored to your business.

Azure handles the infrastructure, so you can focus on innovation — not GPUs and deployment pipelines.

- Agentic Workflows, Prompts, and RAG

From models to intelligent systems.

The newest wave of Azure AI capabilities revolves around agent-based architectures and retrieval-augmented generation (RAG) — enabling you to build systems that think, reason, and retrieve information like humans.

Through Azure AI Studio and Prompt Flow, you can design, orchestrate, and test these workflows with no-code or low-code tools:

- Build AI agents that combine multiple skills (like summarizing, searching, and reasoning).

- Use RAG pipelines to enhance generative models with your company’s private knowledge base.

- Experiment with prompt engineering in a safe, auditable environment.

This turns static AI models into dynamic systems capable of answering nuanced questions, searching documents, and automating decision-making.

- Infrastructure, Security, and Governance

Enterprise-grade foundation for trustworthy AI.

Behind the scenes, Azure ensures that all AI operations run on a secure, compliant, and scalable foundation.

Every component of Azure AI Services is backed by the same enterprise-grade infrastructure that powers Microsoft’s global cloud.

Key features include:

- Private networking & secure endpoints – Keep your data traffic isolated and safe.

- Integrated monitoring & observability – Track model performance, usage, and costs.

- Resource management & scaling – Automatically allocate resources to meet demand.

- Compliance and governance – Built-in adherence to major global standards like GDPR, ISO, HIPAA, and SOC.

In short, Azure AI doesn’t just help you build smart applications — it helps you build responsible ones.

The Bigger Picture: Azure AI’s Unified Vision

What makes Azure AI unique is how seamlessly all these parts come together.

It’s not just a set of APIs or ML tools — it’s a complete ecosystem for building AI-first solutions, from prototype to production.

Imagine this workflow:

- A developer uses Azure AI Vision to extract text from scanned invoices (OCR).

- The extracted data feeds into an Azure OpenAI model fine-tuned for financial reasoning.

- The model generates insights or summaries, stored securely in Azure SQL Database.

- The entire system runs serverlessly through Azure Functions, scaling on demand.

That’s the power of integration — a single cloud, a unified AI layer, and infinite possibilities.

Azure AI and the Future of Intelligent Applications

As enterprises move toward AI-first architectures, Azure’s unified AI ecosystem is becoming the backbone of innovation.

It democratizes access to cutting-edge models while keeping enterprise controls intact — a balance few platforms achieve.

With the ongoing evolution of Azure AI Foundry, Copilot Studio, and Fabric integration, Microsoft is clearly signaling its next vision:

“AI everywhere — safely, responsibly, and seamlessly integrated into every app, workflow, and decision.”

From intelligent customer service bots to autonomous analytics pipelines, Azure AI Services is helping businesses turn imagination into production-ready intelligence.

Problem Statements That Can Be Solved

Here are technical, real-world problems that Azure AI Services addresses well:

- Multimodal Content Understanding

- Problem: You have images, documents, videos; need to extract structured info (text, tables, faces, objects) and combine them.

- Solution: Use Azure Vision, Document Intelligence, Video Indexer, combined with Language services for summarization, translation.

- Conversational Agents / Virtual Assistants

- Problem: Building a support bot that needs to understand natural language, maintain context, fetch data, take actions.

- Solution: Use Azure OpenAI (LLM), augmented with Azure AI Search or vector search for knowledge base, plus agent frameworks.

- Generative AI for Business Content

- Problem: Automatically generating reports, summaries, draft content, code snippets, etc., custom to domain.

- Solution: Use Azure OpenAI foundation models with fine-tuning or prompt engineering via Prompt Flow in AI Foundry; integrate with content safety.

- Speech / Voice Interfaces

- Problem: Applications needing speech recognition, voice-bots, or real-time speech translation.

- Solution: Use Speech services (speech-to-text, text-to-speech, translation) combined with language APIs for domain adaptation.

- Search & Retrieval / RAG

- Problem: Users query across large corpora (documents, structured data, public & private knowledge) and need relevant, contextual responses.

- Solution: Use Azure AI Search / vector search, integrate with Azure OpenAI to produce responses based on retrieved context.

- Ensuring Responsible / Safe AI Deployment

- Problem: Generative AI may produce harmful/bias content; there are compliance/security concerns.

- Solution: Content Safety services, guardrails, identity & role-based access, logging & monitoring.

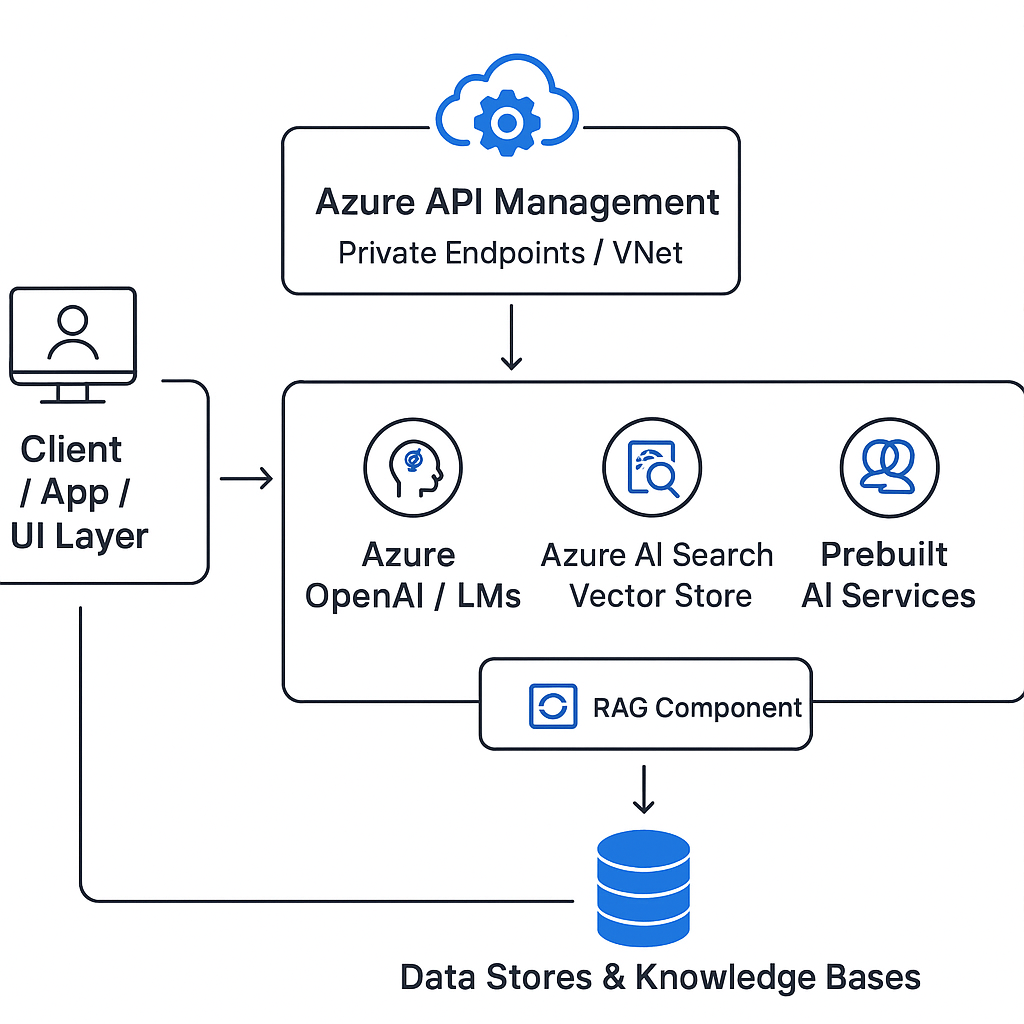

Architecture Diagram: Azure AI Foundry + Agent / RAG System:

Explanation of components:

- Client / UI: Could be a web front-end, mobile app, voice assistant, or chatbot.

- API Management & VNet / Private Endpoints: Acts as ingress, ensuring requests are secure, managed, throttled.

- Azure OpenAI / LLMs: The “brain” for generative tasks—text generation, summarization, dialogue. Can include fine-tuning.

- Azure AI Search / Vector Search: Retrieval component for RAG. Stores embeddings / semantic indexes; can do hybrid search (keyword + vector).

- Prebuilt AI Services: For tasks not needing custom models—OCR, image-analysis, speech processing, translation.

- Prompt / Agent Flow: Tools and orchestration for building prompt pipelines, agent workflows, integrating custom logic (e.g. calling external services).

- Data Stores / Knowledge Bases: Blob storage, vector databases, SQL/NoSQL, document stores. Used to store inputs, context, prompt data, user data, logs.

- Security / Monitoring / Governance: Key Vault, role-based access, content safety, logging (e.g. Application Insights), cost/usage monitoring.

Building a RAG-Powered AI Workflow on Azure

Let’s look at how a real-world Azure AI application might come together — not as abstract theory, but as a practical workflow that developers can actually use.

Below is a simplified, Python-style pseudocode (not production code) that shows how retrieval-augmented generation (RAG) can be implemented using Azure OpenAI and Azure Cognitive Search.

The Logic Behind the Code

The general idea is simple:

- A user asks a question.

- The system looks for relevant information in a vector database (Azure AI Search).

- It builds a smart prompt with that context.

- Finally, it sends the prompt to an Azure-hosted GPT model to generate an answer

Governance & Monitoring — The Often Overlooked Backbone

A robust AI system isn’t just about clever prompts and APIs; it’s about control, accountability, and visibility.

In production, organizations usually integrate:

- Usage logging (who asked what, and when)

- Token and cost tracking

- Content safety checks (to flag bias or policy violations)

- Latency and scale monitoring through Azure Monitor or Application Insights

This layer ensures compliance, security, and performance — the trifecta every enterprise AI deployment needs.

Why Developers Love Azure AI Services

- Rapid Time-to-Value

Azure AI provides plug-and-play APIs across vision, speech, and language. Developers can spin up smart features in hours, not weeks. - Enterprise-Grade Scale & Performance

Running on Microsoft’s optimized infrastructure — from GPU silicon to global edge networks — means applications can scale without breaking a sweat. - Flexible Deployment Options

Whether your workloads run in the cloud, on-premises, or at the edge, Azure lets you deploy AI services wherever they’re needed. Private endpoints and VNets provide the isolation and compliance many industries demand. - Unified Governance and Management

Through Azure AI Studio (formerly Cognitive Services + AI Foundry), teams get dashboards for model monitoring, responsible AI evaluation, and usage insights — all in one place. - Customization Made Easy

Developers can fine-tune foundation models, design custom prompts, and even build full-fledged AI agents powered by their own private data. - Multi-Modal Power

Azure combines vision, speech, and text in a unified environment — perfect for applications like customer support bots that “see,” “listen,” and “respond” naturally.

Challenges You Should Plan For

Even the best tools come with trade-offs. Some common hurdles include:

- Cost Management: Large models can burn through compute credits fast. Each layer — embeddings, search, and inference — adds to the bill.

- Latency Concerns: Real-time scenarios (like chatbots or live transcription) can face cold-start delays unless optimized.

- Integration Complexity: RAG pipelines demand careful data handling — embedding updates, database syncs, and workflow orchestration.

- Security and Compliance: Sensitive data used in embeddings or fine-tuning must be properly encrypted and governed (GDPR, HIPAA, etc.).

- Vendor Lock-In Risks: Azure’s ecosystem is powerful but proprietary; portability to other clouds can be challenging.

- Model Limitations: Even GPT-class models can hallucinate. Human validation or automated checks are essential.

How Azure AI Stands Against Competitors

🟦 Azure AI (Microsoft)

Best for organizations that already run in the Microsoft ecosystem (Azure, Power BI, Dynamics, Office). Excellent governance, security, and API variety.

🟧 AWS AI/ML

Amazon’s SageMaker, Comprehend, and Lex are strong in infrastructure flexibility. However, integrating them with enterprise identity systems often needs extra work.

🟩 Google Cloud AI

Vertex AI shines in model training, multilingual processing, and video analytics. But Azure leads in prebuilt API maturity and compliance readiness.

🟨 Open-Source Alternatives

Hugging Face, LLaMA, and Mistral offer unmatched control — ideal for those who want to self-host. Yet they require DevOps investment and constant maintenance.

🟪 Hybrid / Edge Deployments

In industries where milliseconds matter — like healthcare or manufacturing — edge AI still reigns supreme. Azure supports containers and Arc-based deployments for such low-latency use cases.

Final Thoughts

Azure AI Services have matured far beyond “just APIs.”

They now represent a full-stack ecosystem for enterprise-ready AI — spanning prebuilt intelligence, generative capabilities, and governance tools that meet real-world business needs.

For developers, that means less time wrestling with infrastructure and more time innovating — building agents, copilots, and intelligent applications that actually make a difference.

Frequently Asked Questions:

Q1. What is the difference between Azure Cognitive Services and Azure AI / Azure AI Services / AI Foundry?

- Cognitive Services was the earlier branded name for Azure’s pre-built AI APIs.

- “Azure AI Services” is the modern umbrella (prebuilt + custom + foundation models).

- AI Foundry is a more recent hub / portal for building generative AI/agent workflows, prompt flows, model fine-tuning etc.

Q2. How do I ensure data privacy when using Azure AI for sensitive content?

- Use private endpoints / VNets.

- Use Azure Key Vault for secrets.

- Use Data encryption at rest/in transit.

- Check compliance offerings for region (e.g. GDPR, HIPAA).

- Apply content safety and filter models to prevent leakage of private info.

Q3. Can I bring my own model or custom model into Azure AI Services?

Yes — via Azure OpenAI fine-tuning or deploying custom models, via Azure ML / AI Studio. You can also use own model artifacts and serve them as inference endpoints.

Q4. What are the limits (throughput, latency)?

It depends on the chosen service & tier: premium vs standard, whether private endpoints are used, whether you provision dedicated compute, etc. Benchmarking in dev/prod region is essential.

Q5. What languages / SDKs are supported?

Azure offers SDKs in Python, C#, JavaScript/TypeScript, Java; REST APIs also available. You can use CLI, Terraform, ARM/Bicep for infra.

Conclusion — ThirdEye Data’s Take on Azure AI Services

From our experience at ThirdEye Data, Azure AI Services are rapidly becoming the foundation layer for intelligent enterprise systems. For organizations that:

- require fast go-to-market for AI-enabled apps (chatbots, document processing, etc.),

- need strong governance, security, and compliance,

- want to build custom agents, RAG pipelines, or refine domain-specific models,

Azure AI Services (with AI Foundry, OpenAI, prebuilt APIs, search, etc.) provide a rich, mature, well-integrated toolkit.

However, success depends on careful architecture—balancing cost vs performance, ensuring reliable data pipelines, managing prompt engineering, and embedding responsible AI practices.

In summary: Azure AI Services are not just “bells and whistles” but are substantial infrastructure for enterprises looking to build scalable, responsible, intelligent systems. At ThirdEye Data, we see them as key enablers for innovation, speed, and competitive differentiation.