Generative AI Development Services

Building Generative AI Solutions that Deliver Real Business Value

We help enterprises develop and deploy custom generative AI models and solutions that automate workflows, improve knowledge management, personalize customer engagement, and support data-driven decision-making. Our time-efficient and cost-effective development approach ensures tangible business impact and higher ROI from generative AI initiatives.

Business Challenges or Problems We Solve with Generative AI Solutions

Enterprises are proactively exploring generative AI solutions to address core challenges in productivity, knowledge access, customer experience, and decision-making. As an experienced generative AI development company, we build purpose-driven solutions that solve these business-critical challenges.

ThirdEye Data helps enterprises to resolve the following real-world problems:

Most enterprises have realized that manual workflows for documentation, reporting, content creation, and data analysis are time-consuming and heavily resource-dependent. We develop generative AI solutions that automate these repetitive processes to save time and money.

Critical knowledge often remains trapped in disconnected systems, emails, and documents, making discovery difficult. We build RAG-based knowledge management systems that allow users to query and retrieve accurate, contextual insights instantly from large internal repositories.

Modern sales and marketing processes demand personalized communication across channels, but legacy systems lack context awareness. We develop generative AI models for enhanced customer engagement by personalizing responses, offers, and support content in real-time.

Business Decision-makers struggle with fragmented data and delayed insights. We build GenAI-based decision-support systems that summarize reports, simulate scenarios, and generate analytical insights from both structured and unstructured data, helping to take faster data-based decisions.

Building generative AI solutions from scratch is costly, time-consuming, and resource-intensive. We apply optimized development frameworks and hybrid approaches to accelerate experimentation, reuse existing models, and reduce the total cost of ownership (TCO) for PoC and MVP development.

Enterprises often lack a unified foundation for seamless AI adoption due to siloed data systems. We build integrated generative AI architectures that connect data sources, APIs, and business applications for creating an intelligent, cohesive ecosystem ready for continuous AI innovation.

Enterprises usually handle massive amounts of unstructured data, including emails, chats, PDFs, images, and videos that remain underutilized. We develop multimodal generative AI systems capable of interpreting and generating insights from text, images, audio, and video.

Critical knowledge often remains trapped in disconnected systems, emails, and documents, making discovery difficult. We build RAG-based knowledge management systems that allow users to query and retrieve accurate, contextual insights instantly from large internal repositories.

Many organizations struggle to operationalize AI due to limited in-house expertise. Our end-to-end generative AI development approach bridges this skill gap for businesses. From model design to deployment, governance, and monitoring & fine-tuning, we take care of everything.

Enterprises Trust Our Generative AI Development Expertise

Adding Values to Core Business Functions with GenAI Capabilities

At ThirdEye Data, we develop and deploy purpose-built generative AI solutions that address industry-specific challenges and accelerate digital transformation across core business functions.

Financial Services & Banking

- Advanced Research & Analytical Copilots

- AI-driven Document Summarization and Contextual Query.

- AI Agents for Improved Customer Engagement

Retail & E-Commerce

- AI-generated product descriptions, campaign content, and recommendations

- Predictive demand planning and dynamic pricing optimization

- Conversational AI shopping assistants

Manufacturing & Industrial Operations

- Generative design and simulation using GANs and VAEs

- Automated defect detection reports and vision-based quality control

- Predictive maintenance copilots leveraging multimodal data

Healthcare & Life Sciences

- Personalized patient interactions, virtual health assistants, and AI-driven care recommendations

- Automated medical documentation, trial summary generation, and compliance reporting

- AI copilots for rapid medical literature analysis and insights extraction

Energy, Utilities & Sustainability

- Generative forecasting for energy demand and consumption anomalies

- AI copilots for inspection and maintenance workflows

- Automated ESG report generation and data summarization

Media, Publishing & Entertainment

- Scriptwriting, copy generation, and visual content creation

- Automated translation and tone adaptation

- Audience insight and engagement optimization using LLMs

Tourism & Hospitality

- AI-generated itineraries and travel recommendations

- Intelligent booking and reservation assistants

- Automated review summarization and sentiment monitoring

Supply Chain & Logistics

- AI-generated shipment summaries and documentation

- Generative route optimization and demand forecasting

- Supplier intelligence copilots and contract summarization

AdTech & Marketing

- Generative text, image, and video ad variations

- Automated performance summaries and strategy recommendations

- AI-driven segmentation and message personalization

Primary Offerings of Our Generative AI Development Services

As an end-to-end generative AI development company, we combine our hands-on experience in model engineering, application development, and strategic consulting to help enterprises transform their operations through generative AI applications.

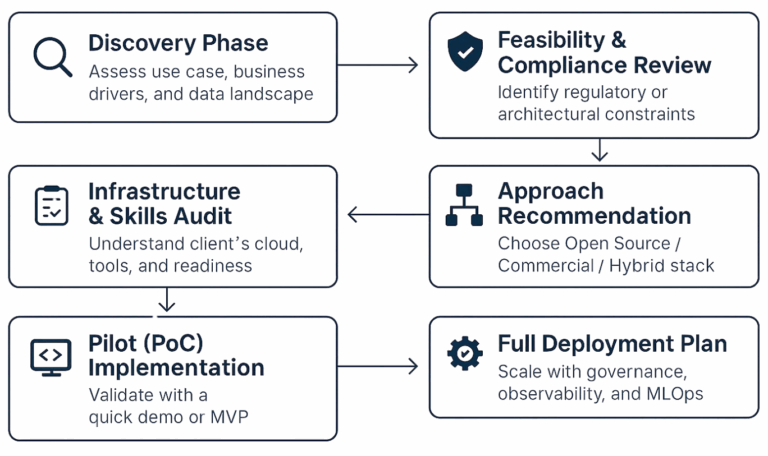

Generative AI Consulting & Strategy

We guide enterprises in identifying high-impact GenAI use cases, defining roadmaps, and selecting the right approach, whether open-source frameworks, commercial tools, or hybrid for maximum business value.

Generative AI Model Development & Fine-Tuning

We develop, customize, and fine-tune Generative AI and LLM models to reflect domain-specific knowledge, workflows, and data for ensuring accuracy, relevance, and compliance.

Custom Generative AI Application Development

We build custom generative AI applications that automate processes, generate contextual content, summarize insights, and power decision intelligence; fully aligned with enterprise needs.

AI Agents & Custom Copilot Development

We create autonomous AI agents and enterprise copilots that can analyze data, execute multi-step workflows, and collaborate across business functions to improve efficiency and knowledge reuse across teams.

OpenAI & Commercial Platform-Based Development

We design and deploy solutions leveraging APIs from OpenAI, Anthropic, Gemini, and Azure OpenAI. This is ideal for rapid PoCs or production-grade deployments with trusted platforms.

Low Code / No Code Generative AI Application Development

We enable faster time-to-market through low code and no code Generative AI solutions, allowing enterprises to build AI-driven apps, dashboards, and assistants with minimal engineering effort.

RAG & Knowledge Integration Systems

We implement Retrieval-Augmented Generation (RAG) systems to connect models with your enterprise data for enabling secure, real-time knowledge access across documents, CRMs, and data lakes.

Generative AI Platform Integration

We integrate Generative AI capabilities into your existing enterprise systems, data platforms, and workflows using microservices, APIs, and orchestration layers for seamless scalability.

Multimodal Generative AI Solutions

We design multimodal systems capable of understanding and generating text, image, audio, and video for expanding automation and creativity across enterprise functions.

Our Generative AI Development Project References

Designed and implemented a Multi-Agent Investment Research Tool, a Copilot-based assistant that automates the end-to-end process of investment discovery, data collection, analysis, and reporting.

Developed a multi-agent system that transforms how loyalty programs are managed and experienced for a leading marketing company.

Designed and implemented a Copilot-based Supplier Chatbot integrated into a comprehensive Warehouse Management System (WMS) built on Microsoft Power Platform.

Developed an AI-powered knowledge repository chatbot application designed to transform how IT professionals access and interact with organizational knowledge.

Developed a Generative AI-powered travel planning platform as MVP for busy professionals seeking well-curated itineraries.

Developed a Generative AI-based document analytics platform to extract pertinent entities from a variety of file formats, such as .pdf, .xls, and .doc, originating from multiple sources.

Our Flexible, Enterprise-Centric Approach to Generative AI Development

At ThirdEyeData, we recognize that every enterprise is at a unique stage of its generative AI journey. That’swhy we don’ttake a one-size-fits-all approach. Our team brings deep, hands-on expertisein developing GenAI systems using diverse technology stacks and development methodologies, including open-source frameworks, commercial tools, cloud-based AIsolutions, managed AI services, low-code/no-code platforms, and hybrid models. Each approach is carefully crafted to align with the client’s priorities, use-case objectives, data governance requirements, and infrastructure maturity.

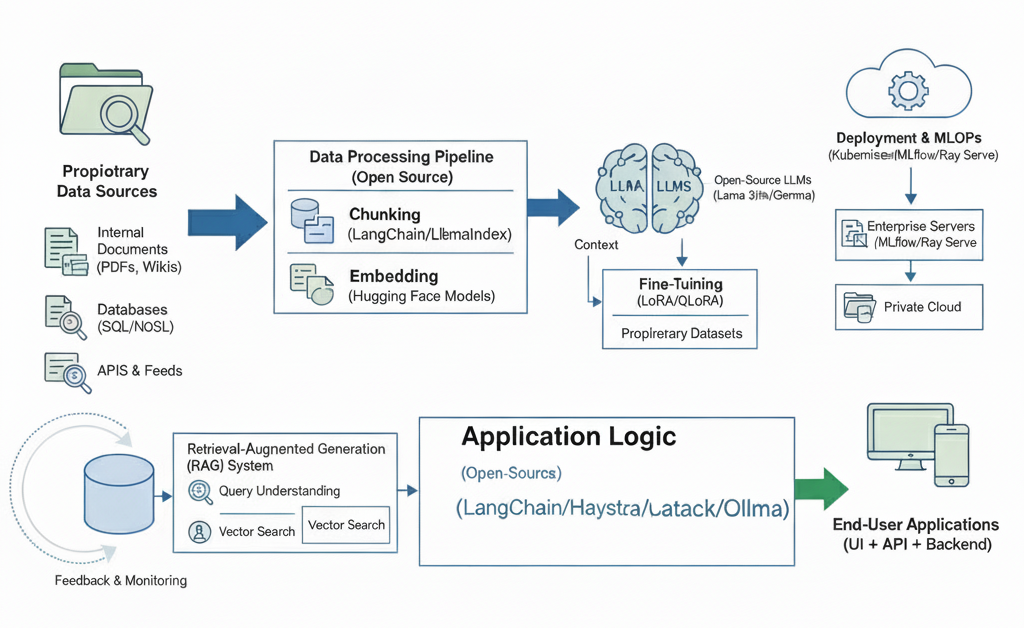

Generative AI Development with Open Source Frameworks

We use open-source frameworks and technology stacks for enterprises that need full control, transparency, and long-term cost efficiency. This approach is ideal for organizations with strict data privacy needs or on-premises deployment requirements.

Our team works with community-driven frameworks, libraries, and tools that provide greater flexibility and control. Enterprises benefit from our deep expertise in open-source ecosystems to build scalable and customizable Generative AI solutions.

At ThirdEye Data, we recommend this approach when ownership, data security, and extensibility are top priorities.

What Our Open-source-based GenAI Development Approach Involves

- Using open-source LLMs and frameworks, such as Llama 3, Mistral, Falcon, Gemma, Dolly, Vicuna, etc.

- Building pipelines using LangChain, Haystack, LlamaIndex, or Ollama.

- Leveraging vector databases like Milvus, Chroma, FAISS, or Weaviate.

- We use open-source deployment & orchestration tools like Kubernetes, MLflow, Ray Serve.

- Integration with open APIs, private data connectors, and RAG pipelines.

Our Role in Developing GenAI Applications Using Open-source Frameworks

- Custom LLM fine-tuning on proprietary datasets.

- RAG system development with open-source models.

- End-to-end GenAI app development (UI + API + backend), fully open-source.

- Deployment on enterprise servers or private cloud environments.

Business Benefits or Value We Deliver with This Approach

- Full control over data, IP, and deployment.

- High customizability for enterprise-specific workflows.

- Lower recurring costs and no vendor lock-in.

- Seamless integration with internal systems and security layers.

Trade-Offs of Using Open-Source Tech Stack

- Requires strong in-house or partner AI engineering capability.

- Longer development cycles for complex use cases.

Generative AI Development Using Commercial Tools & Platforms

We use commercial tools and platforms for enterprises that need speed, reliability, and compliance when developing a quick PoC or MVP.

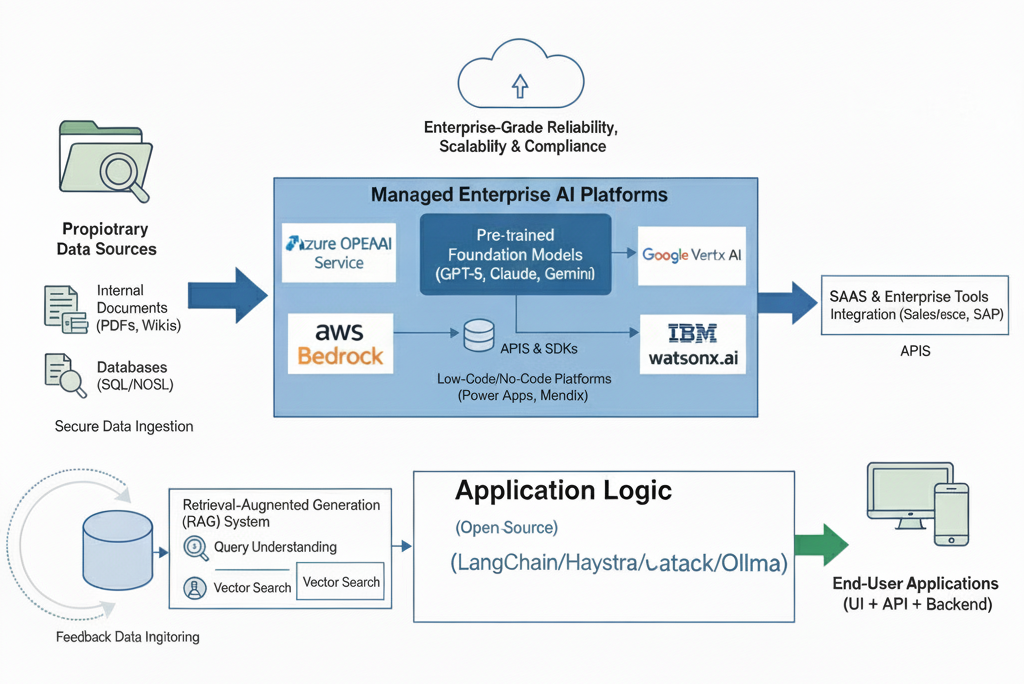

Our team builds on trusted, vendor-managed AI platforms and pre-trained models from OpenAI, Anthropic, Google Vertex AI, Azure OpenAI, AWS Bedrock, and IBM WatsonX. This helps us accelerate development while maintaining strong performance and enterprise-grade standards.

With commercial tech stacks, we access ready-to-use models, APIs, SDKs, enterprise support, SLAs, and effortless scalability. This shortens development cycles and enables rapid movement from concept to deployment.

At ThirdEye Data, we recommend this approach for building custom copilots, chat-based tools, and workflow automation systems. It’s how we deliver Generative AI solutions within a secure, managed, and compliant enterprise environment.

What This Commercial Tools & Platforms Using Approach Involves

- Leveraging commercial foundation models and APIs: OpenAI (GPT-4/5), Anthropic (Claude), Google Gemini, Microsoft Copilot Studio, AWS Bedrock, etc.

- Using enterprise AI platforms like:

– Azure OpenAI, AWS Bedrock, Google Vertex AI

– C3.ai, DataRobot, IBM watsonx.ai

– Low Code/No Code platforms (e.g., Microsoft Power Platform, Mendix, Appian, Dataiku, Akkio, Peltarion).

- Integrating with SaaS and enterprise tools like Salesforce, SAP, ServiceNow via APIs.

Our Role in This Approach

- Model selection consulting.

- Integration and orchestration using commercial APIs.

- Low-code solution customization for domain-specific use cases.

- Hybrid workflow automation where LLMs connect with existing enterprise systems.

Business Advantages

- Faster time to market and prototyping.

- Enterprise-grade compliance, scalability, and SLAs.

- Easier collaboration between IT and business teams.

- Robust ecosystem and third-party integration readiness.

Trade-Offs of Using Commercial Tools & Platforms

- Vendor dependency and recurring licensing costs.

- Limited visibility into model internals and fine-tuning flexibility.

Hybrid Approach (Open Source + Commercial)

We consider this the most practical approach for modern enterprises. It delivers the right balance of flexibility, speed, and control.

Our hybrid approach is designed for businesses seeking co-development or expert augmentation. It is well-suited for generative AI use cases that require both open-source and commercial model orchestration, combining efficiency with reliability.

We integrate commercial APIs for inference and reasoning with open-source pipelines for embeddings, vector search, and governance. This hybrid setup ensures that sensitive enterprise data remains secure while leveraging commercial models for advanced reasoning and language understanding.

What This Approach Involves

- Using commercial APIs for LLM inference + Open-source tools and pipelines for data handling, embeddings, storage, and orchestration.

- Combining enterprise cloud infrastructure with custom microservices and vector DBs.

- Deploying models in a modular way: some internal (on-prem) and some via API.

Our Role in This Approach

- Multi-model architecture blending GPT, Claude, Llama, and internal models

- Hybrid RAG pipelines development (private vector DB + commercial embeddings)

- Cloud-native deployment with on-prem data control

- Multi-model orchestration

- Adding Governance & observability layers for performance and cost control

Business Advantages

- Balanced flexibility, compliance, and performance.

- Smart cost optimization using open source for heavy data handling, and commercial tools for inference.

- Perfect combination to build future-proof architecture that is easy to switch or upgrade models.

- Better governance and observability features for AI operations.

Trade-Offs of Adopting a Hybrid Approach

- Requires thoughtful orchestration to manage dependencies.

- Slightly higher complexity during setup.

Consult with Our Generative AI Developers

Partner with our experts to choose the right Generative AI development approach and maximize ROI for your enterprise.

Technology Stack We Use for Developing Generative AI Solutions

Commercial Tools & Platforms

- OpenAI: GPT-4, GPT-4o, fine-tuning APIs

- Anthropic: Claude 3, Claude 3.5

- Google: Gemini 1.5 Pro, Vertex AI

- Microsoft: Azure OpenAI Service, Copilot Studio

- Amazon: AWS Bedrock (Anthropic, Cohere, Meta, Mistral, Stability AI models)

- IBM: watsonx.ai and Granite Models

- Cohere: Command-R, Embed

- DataBricks MosaicML for managed model training

- Azure AI Studio

- IBM watsonx

- Oracle AI Services

- DataRobot

- C3.ai

- H2O.ai Cloud

- Dataiku

- SAS Viya

- Snowflake Cortex AI

- Appian

- Mendix

- Akkio

- Peltarion

- Cognitivescale

- Automation Anywhere

- UiPath GenAI Connectors

- Salesforce Einstein GPT

- SAP Joule

- ServiceNow Now Assist

- Atlassian Intelligence

- Adobe Firefly

- Sensei GenAI

Open-Source Frameworks & Tools

- Meta: LLaMA 3

- Mistral AI: Mistral 7B, Mixtral

- Falcon

- Gemma

- Dolly

- Vicuna

- RedPajama

- TII Falcon

- Phi-3 Mini

- Yi-Large

- Command-R+

- LlamaIndex

- Haystack

- Ollama

- Dust.tt

- Text Generation Inference

- VLLM

- Ray Serve

- Gradio

- Chainlit

- Vector Databases: Milvus, Weaviate, Chroma, FAISS, Qdrant, Pinecone (commercial API hybrid)

- Embeddings: SentenceTransformers, InstructorXL, OpenAI embeddings, Cohere embeddings

- VAEs (Variational Autoencoders)

- Autoregressive Models

- Diffusion Models

- PEFT

- LoRA

- QLoRA

- Deepspeed

- Hugging Face Accelerate

- Transformers Trainer

- PyTorch Lightning

- Keras

- JAX

- Kubeflow

- Weights & Biases (Community)

- Neptune.ai

- ClearML

- ZenML

- Evidently AI

- TruLens

- Guardrails AI

- Kubernetes

- Docker

- Ray

- Triton Inference Server

- ONNX Runtime

- VLLM

- TGI (Text Generation Inference)

Explore Our 20+ Pre-built Generative AI Applications

We have built 20+ generative AI applications for various use cases across domains. You can explore them if you like.

Related Blogs on Our Generative AI Expertise & Findings

Answering Frequently Asked Questions

Generative AI development services refer to the comprehensive process of designing, developing, and deploying AI-powered systems capable of producing content, insights, or predictions based on enterprise data.

At ThirdEye Data, we help organizations identify high-impact use cases, select appropriate models such as GPT, PaLM, Claude, DALL-E, LLaMA, or NeMo Megatron, and fine-tune them for enterprise-specific datasets to ensure outputs are relevant and actionable.

Our services extend beyond model development to creating full-fledged applications that can automate tasks like report generation, content creation, knowledge management, and decision support. We focus on seamless integration with existing workflows, ERP/CRM systems, or custom software, ensuring minimal disruption. Continuous monitoring, governance, and risk mitigation are embedded in our process, enabling businesses to adopt AI confidently while realizing measurable operational efficiency and ROI.

Traditional AI applications primarily analyze, classify, or predict based on historical or structured data. Examples include fraud detection, demand forecasting, and customer segmentation. Generative AI, in contrast, creates new content or insights by learning patterns from existing data, enabling tasks such as drafting reports, generating images, producing code, or synthesizing complex business insights.

At ThirdEye Data, we often combine these paradigms to deliver hybrid solutions. For instance, an enterprise may use predictive models to identify risk factors while generative AI simultaneously produces stakeholder-specific reports, accelerating decision-making. This dual approach ensures that enterprises not only gain analytical intelligence but also actionable, creative outputs, making AI a strategic enabler rather than just a supporting tool.

Enterprises adopt generative AI to accelerate operations, reduce manual effort, and gain actionable insights at scale. From a technical standpoint, generative AI automates processes that are labor-intensive or repetitive, such as content creation, document summarization, coding assistance, or insight generation from unstructured data. From a business perspective, it enables faster decision-making, enhances personalization in customer-facing operations, and improves overall productivity.

ThirdEye Data emphasizes modular, incremental deployment, ensuring that AI adoption does not disrupt day-to-day operations. Our experience shows that enterprises achieve maximum value when generative AI is customized to their domain, fine-tuned on proprietary data, and integrated strategically into workflows, delivering measurable ROI in both efficiency and innovation.

Implementing generative AI in enterprise environments poses both technical and operational challenges. Technically, large-scale models can require significant computational resources, leading to higher costs, and may produce inaccurate outputs if not carefully fine-tuned.

Integrating AI with legacy systems adds another layer of complexity, as it must coexist with existing software without disrupting processes. On the business side, companies face adoption challenges, employee training requirements, and the need to demonstrate clear ROI while ensuring compliance with industry regulations.

ThirdEye Data addresses these challenges through cost-optimized model selection, incremental deployment strategies, user training, and robust monitoring. By embedding AI in a controlled and gradual manner, we mitigate operational risks while maximizing impact and business value.

Generative AI creates tangible business value by automating complex and repetitive processes, enhancing content scalability, and generating insights that support better decision-making.

Enterprises can streamline operations such as report generation, marketing content creation, coding, or research synthesis. Additionally, generative AI enables personalization at scale, improving customer engagement and satisfaction.

ThirdEye Data ensures that these AI solutions are aligned with enterprise-specific goals by fine-tuning models with proprietary data and integrating them seamlessly into workflows.

This approach not only drives operational efficiency but also enables organizations to capture measurable ROI in terms of time saved, increased productivity, reduced costs, and accelerated strategic decision-making.

Enterprises can lower the cost of generative AI implementation by strategically selecting models and deployment strategies.

ThirdEye Data emphasizes using task-specific or fine-tuned models instead of always deploying the largest models, reducing compute and storage requirements. We leverage cloud-native, hybrid, or edge deployments to optimize infrastructure costs and allow pay-per-use scaling.

Incremental adoption, starting with proofs of concept or MVPs, ensures that resources are invested only where clear value is demonstrated. Additionally, we create reusable AI assets such as prompts, templates, and workflow modules, which further reduce redundant work and accelerate deployment. This cost-conscious strategy ensures that organizations can adopt AI without overspending while still capturing significant business benefits.

Smooth integration of generative AI into existing operations requires careful planning and modular deployment.

At ThirdEye Data, we adopt a phased approach, introducing AI capabilities gradually, starting with non-critical or highly repetitive tasks.

AI modules are containerized and designed to interface with ERP, CRM, or custom enterprise applications without interfering with existing workflows. Employees are trained to interact with AI through familiar interfaces, ensuring a seamless transition. Continuous monitoring and feedback loops are implemented to verify AI outputs and maintain quality, compliance, and relevance.

This methodology enables organizations to realize the benefits of AI without experiencing downtime or disruption, fostering adoption across teams.

The choice of generative AI models depends on the type of task and the desired output. For text generation, models like GPT, Claude, LLaMA, and PaLM excel at producing reports, summaries, chatbots, and content automation. For image generation, DALL-E and other multimodal models support design, marketing visuals, and product visualization. Audio and multimodal generation, using models like Gemini or NeMo Megatron, allow enterprises to generate speech, video scripts, and multimedia content.

ThirdEye Data tailors model selection based on cost, performance, and integration feasibility, and often fine-tunes models with enterprise-specific data to maximize output relevance.

By aligning the model capabilities with business objectives, we ensure that AI deployment delivers meaningful and measurable outcomes.

ROI from generative AI is realized when AI outputs directly impact productivity, cost savings, or revenue generation.

ThirdEye Data begins by identifying high-impact use cases where automation or AI-assisted insights can deliver measurable results.

Metrics are established to quantify efficiency gains, cost reductions, or speed of decision-making. By starting with proofs of concept and gradually scaling to full deployment, we validate value before significant investments.

Fine-tuning models for enterprise data ensures that outputs are actionable rather than generic, improving adoption and effectiveness. Continuous monitoring and optimization further ensure that AI continues to deliver maximum value over time.

Enterprises benefit from measurable improvements without incurring unnecessary costs or operational disruption.

Generative AI finds applications across multiple industries and functions.

- In finance, it can automate report generation, client communication, and predictive analytics.

- Retail and eCommerce businesses leverage generative AI for personalized marketing content, dynamic product descriptions, and visual merchandising.

- Healthcare organizations use AI to synthesize research, summarize patient data, and provide virtual assistant support.

- Manufacturing and logistics benefit from process documentation, predictive maintenance insights, and resource optimization.

- In media and entertainment, generative AI assists with scriptwriting, advertising content creation, and AI-assisted design workflows.

At ThirdEye Data, we ensure that these solutions are tailored to enterprise-specific workflows, integrating seamlessly with operational systems to deliver practical, actionable, and measurable value.

Integrating generative AI into enterprise workflows requires a careful balance between innovation and operational stability.

At ThirdEye Data, we use a layered deployment approach where AI modules are introduced incrementally.

This begins with automating low-risk, repetitive tasks and gradually scales to more critical operations. Our teams ensure that AI interacts with existing ERP, CRM, or custom software through APIs or containerized modules, preventing interference with day-to-day activities.

Comprehensive training is provided for employees so they can leverage AI outputs effectively, while monitoring systems continuously validate model performance and output quality.

This approach allows enterprises to adopt generative AI seamlessly, unlocking its value without operational downtime or disruption.

Integrating generative AI into enterprise workflows requires a careful balance between innovation and operational stability.

At ThirdEye Data, we use a layered deployment approach where AI modules are introduced incrementally.

This begins with automating low-risk, repetitive tasks and gradually scales to more critical operations. Our teams ensure that AI interacts with existing ERP, CRM, or custom software through APIs or containerized modules, preventing interference with day-to-day activities.

Comprehensive training is provided for employees so they can leverage AI outputs effectively, while monitoring systems continuously validate model performance and output quality.

This approach allows enterprises to adopt generative AI seamlessly, unlocking its value without operational downtime or disruption.

Deploying AI in legacy systems requires careful planning to avoid disruption and maximize value.

ThirdEye Data advocates for a modular and hybrid deployment strategy, where AI is implemented incrementally in isolated modules that can interact with legacy systems without altering critical processes. This includes using containerized services, microservices, or API integrations, enabling new AI capabilities without requiring a full system overhaul.

Legacy data is preprocessed and validated to ensure AI models perform accurately, and continuous monitoring ensures alignment with business rules and compliance requirements. This strategy allows enterprises to modernize operations and benefit from AI capabilities while preserving existing investments in legacy infrastructure.

The deployment timeline for generative AI solutions varies depending on complexity, scale, and integration requirements. For a proof of concept (PoC) or minimum viable product (MVP), ThirdEye Data typically delivers results in 4–6 weeks, allowing rapid validation of business value with minimal investment. Full-scale deployment, including model fine-tuning, workflow integration, and governance setup, usually ranges from 3–6 months. Our approach emphasizes phased rollout, enabling enterprises to start capturing benefits early while continuously optimizing performance and integration. By balancing speed with quality, we ensure enterprises realize measurable ROI without compromising system stability or operational continuity.

The choice of deployment strategy depends on enterprise priorities such as cost, scalability, compliance, and latency requirements. Cloud deployment offers flexibility, scalability, and pay-per-use pricing, making it ideal for fast-moving projects. On-prem deployment ensures maximum data control and compliance, which is crucial for sensitive industries like finance or healthcare. Hybrid deployment blends both approaches, allowing enterprises to run critical workloads on-prem while leveraging the cloud for compute-intensive tasks. ThirdEye Data evaluates enterprise infrastructure, regulatory environment, and cost considerations to recommend a deployment strategy that maximizes both performance and ROI without disrupting operations.

Low Code/No Code platforms such as UiPath AI Center, Microsoft Power Platform, and H2O.ai are increasingly used to build AI workflows with minimal coding, making them accessible to business teams. These platforms allow rapid prototyping, automation of routine tasks, and integration of AI models into enterprise applications. However, for complex, high-precision, or domain-specific generative AI applications, Low Code/No Code approaches may need to be supplemented with custom development to ensure quality and relevance. ThirdEye Data leverages these platforms for rapid PoC deployment and business-user workflows, while simultaneously building fine-tuned AI models to deliver enterprise-grade performance, scalability, and measurable ROI.