Google Vision AI: Teaching Machines to see, the Future of Visual Intelligence

“When your app can seeas well as compute, it opens doors to entirely new experiences — from recognizing products in a photo to reading text from images instantly. Google Vision AI is the bridge between pixels and meaning.”

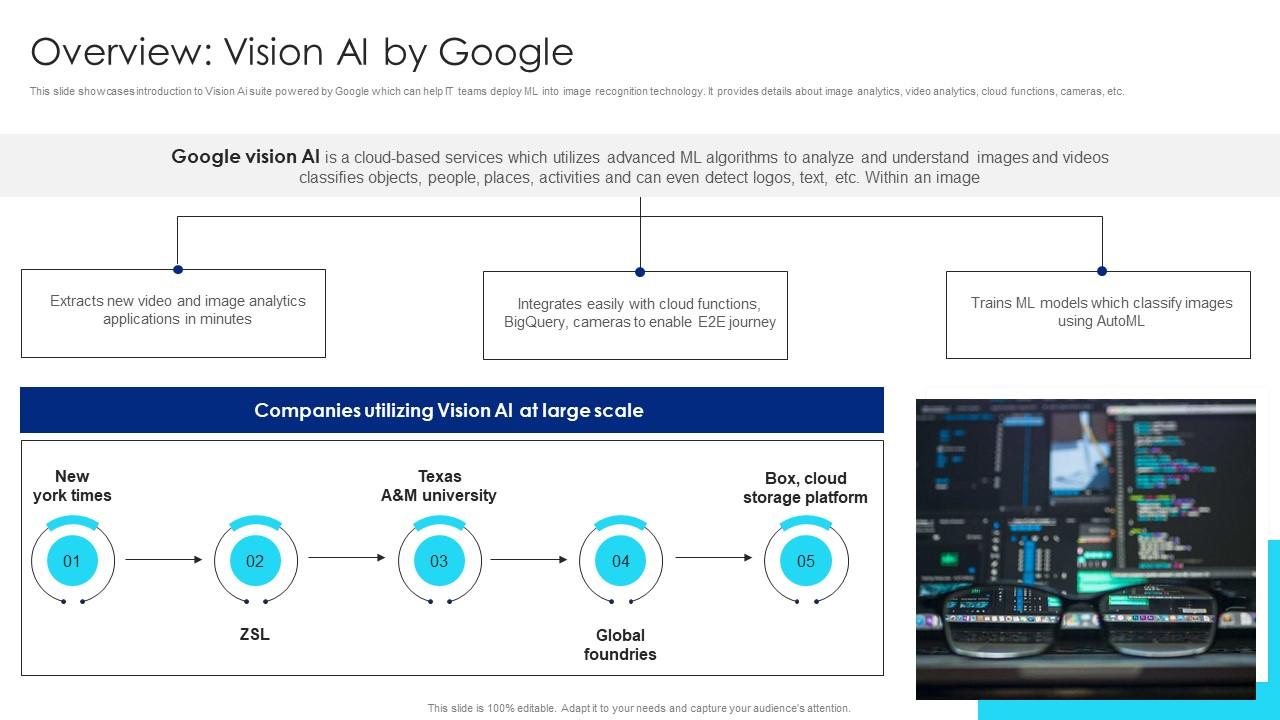

What is Google Vision AI?

Imagine you run an online marketplace. A customer uploads a photo of a sneaker they saw in a café and asks: “Find this shoe model for me.”

Behind the scenes, Google Vision AI can identify the shoe, match it to catalog images, find similar products, and return a list of candidates — all within seconds. The app “understood” the photo.

That’s the power of Google Vision AI: machine vision capabilities that let software interpret images and videos, not just store them. Instead of dealing with raw pixels, your systems get structured insights: labels, objects, faces, text, explicit content, landmarks, and more.

Originally released as the Cloud Vision API, Vision AI has evolved (and continues to evolve) into a richer platform. It’s now part of the broader Google/Vertex AI ecosystem, including tools like Vertex AI Vision(for video and camera stream analysis) as well.

In short:

Google Vision AI= tools + APIs + infrastructure that let applications see and “understand” visual inputs.

How Vision AI Works: The Pipeline & Connections

To make sense of Vision AI, let’s walk through its internal architecture and how its components connect in practice.

- Image / Video Ingestion

- Input can be static images (JPEG, PNG, TIFF) or video streams (camera feeds, video files).

- Vision AI supports ingestion from cloud storage, device uploads, or real-time streams under Vertex AI Vision.

- Preprocessing

- Resizing, normalization, color space transforms, compression handling.

- May apply filters or pre-checks (e.g. validate format, orientation).

- Model Invocation / Feature Extraction

- Google’s pre-trained models (or your custom ones) process the input.

- Various specialized models handle tasks like label detection, object detection, OCR (text detection), face detection, explicit content filtering, landmark detection, etc.

- Some models are multi-task or ensemble-based behind the scenes.

- Postprocessing & Metadata Enrichment

- Confidence scoring, bounding boxes, segmentation masks, text bounding, recognition, filtering.

- Enrich results with labels, hierarchies, localization (for multilingual text), or links.

- Optional Customization / Fine-Tuning

- You can bring your own custom model or fine-tune on domain data (especially in Vertex AI Vision).

- Combine Google’s generic label models with domain-specific training to improve precision.

- Output / API Response

- The API returns structured data: labels with confidence scores, detected objects with bounding boxes, extracted text, classification of content, etc.

- The client app uses the output to trigger further logic (e.g. search, alert, filter, UI).

- Integration & Usage

- The output might feed into search (e.g. image → caption → embedding → semantic search).

- Or tie into RAG systems: use OCR text extraction, then retrieve more info.

- Or be part of downstream pipelines: analytics, moderation, auto-tagging.

In modern systems, Vision AI is often part of a vision + language pipeline. For example:

- Use Vision AI to extract text or objects from images.

- Encode those extracted elements into embeddings (via transformer models).

- Use a vector DB + search engine to match, cluster, retrieve.

Because Vision AI integrates with Google Cloud infrastructure (BigQuery, Cloud Storage, Vertex AI, IAM), it slots into end-to-end AI systems. The separation between “vision input” and “semantic understanding” becomes less distinct.

Use Cases / Problem Statements Solved with Vision AI

Here are real-world scenarios where Vision AI becomes a differentiator:

- Image Search & Reverse Image Lookup

Let users upload or take a photo, then find visually or semantically similar items (e.g. fashion, décor).

→ Matches catalog images, classifies scenes, extracts features.

- OCR & Document Understanding

Extract text from images, scanned PDFs, receipts, invoices, business cards.

→ Must support multiple languages, layouts, handwriting.

- Content Moderation / Safe Content Filtering

Detect explicit or inappropriate content (nudity, violence) in user-uploaded media before showing it.

→ Helps platforms with user-generated images / videos.

- Object & Entity Detection

Recognize objects (cars, plants, machinery), logos, landmarks, or brand elements in images.

→ Retail, surveillance, AR/VR use cases.

- Face Detection & Analysis

Detect faces, facial landmarks, expression estimations, possibly blur or anonymize faces for privacy.

- Automated Tagging / Asset Management

Bulk annotate image libraries, assign metadata and tags to images for search and organization. Resource management tools use this heavily.

- Healthcare & Medical Imaging (to some extent)

In domains where images are used (x-rays, scans), extracting structure or detecting anomalies. (Though specialized medical vision models often needed.)

- Smart Video & CCTV Analytics

Ingest video feeds for event detection, motion detection, occupancy, behavior recognition. Vertex AI Vision supports ingestion of real-time streams.

- Augmented Reality (AR) / Mixed Reality Applications

Recognize objects in camera view and overlay info (navigation, product details).

- Visual QA / Multimodal Agents

Agents that can take image + question input, reason about what’s in an image, and answer questions (e.g. “What’s the text on this sign?”).

Each use case yields business value: faster manual work, better UX, automation, compliance, monetization opportunities.

Strengths & Advantages of Google Vision AI

What makes Google Vision AI stand out:

- Maturity & Scale

It’s built on years of Google’s research and infrastructure — reliable, battle-tested API.

Cloud Vision API is well documented and frequently updated.

- Comprehensive Feature Set

From labeling, detection, OCR, explicit content filtering, landmarks — lots of built-in capabilities.

- Ease of Use / Integration

REST / gRPC APIs, client libraries in multiple languages.

You don’t need to train from scratch.

- Scalable & Managed

Google handles infrastructure, scaling, availability, versioning.

With Vertex AI Vision, it supports ingesting many video streams serverlessly.

- Custom Model Support

You can bring your own models or AutoML models and integrate with Vision workflows.

- Enterprise Security & Governance

Integration with IAM, private networking, data controls.

- Low-Cost Entry & Free Tier

Cloud Vision has free usage quotas.

- Regular Improvements / Updates

Google updates OCR models, adds new capabilities iteratively.

Limitations & Trade-Offs of Google Vision AI

Here are challenges, pitfalls, and trade-offs you should consider:

- Generic Models May Miss Domain Specifics

For specialized domains (medical imagery, satellite imagery, industrial defects), out-of-the-box models may not suffice. Custom models or fine-tuning often required.

- Opaque Behavior / Black Box

It’s hard to fully explain whythe model made some label, especially on edge images.

- Adversarial / Noise Sensitivity

Some academic studies show Vision APIs can be fooled by noise or adversarial perturbations.

- Latency & Cost at High Volume

For high-freq video streams or many image requests, costs and latency can mount.

- Privacy / Data Leakage Risks

Handling user images requires careful compliance, privacy controls.

- Limited Offline / Edge Support

The API is cloud-based; real-time offline processing is limited (though Hybrid / on-device vision models exist separately).

- Versioning & Model Changes

Google occasionally deprecates older models (legacy models being retired) — you need to watch release notes.

Alternatives of Google Vision AI

If Vision AI doesn’t fit your use case, these are alternatives:

- Open-Source Vision Models / Frameworks

E.g. Detectron2, YOLO, OpenCV, TensorFlow + custom architectures. Full control but you build & scale infrastructure.

- AutoML Vision

Google’s AutoML tools let you train custom vision models without deep ML expertise.

- Other Cloud Vision Services

AWS Rekognition, Azure Computer Vision, Alibaba Cloud Vision — each with their own APIs and trade-offs.

- Edge Vision / On-Device Models

Use mobile-compatible models (e.g. MobileNet, TFLite) to do inference on device, reducing latency and preserving privacy.

- Specialized Vision Platforms

In industrial or medical domains, you often use domain-specific vision solutions that include calibration, sensor fusion, etc.

Each alternative involves trade-offs in control, performance, cost, and ease-of-integration.

Upcoming Updates / Trends & Industry Insights of Google Vision AI

Here’s what’s happening in vision AI and where Google Vision may evolve:

- Vision + Generative AI / Multimodal Fusion

Systems merging image understanding with LLMs to answer visual questions, generate descriptions, or drive agents (e.g. Google Lens integration). 📰Google is integrating visual search into its AI Mode.

- Video & Real-Time Stream Processing

Vertex AI Vision already supports real-time video ingestion. Expect more tools for live video insight, event detection, edge-to-cloud streaming.

- Model Efficiency & On-device Vision

More lightweight, efficient vision models that can run on mobile, edge, low-power devices.

- Custom & Domain-Specific Vision Models

More plug-and-play fine-tuned models for industries like healthcare, retail, manufacturing.

- Explainable Vision & Interpretability

Tools to highlight which image regions contributed to a label or decision — improving trust and debugging.

- Vision-driven Agents

Vision-Language-Action models (VLAs) that can see, reason, and act (e.g. robotics) are emerging.

- Better Adversarial Robustness & Safety

As usage expands, ensuring model resilience to noise, input tampering, or manipulated imagery becomes crucial.

- Integration with Search & Retrieval

Vision AI extracting text or features, then feeding into embedding + search systems — making mixed image-text search seamless.

Project References & Tutorials of Google Vision AI

Frequently Asked Questions of Google Vision AI

Q1: Is Google Vision AI the same as Vertex AI Vision?

A: Not exactly — “Google Vision AI” often refers to the legacy Cloud Vision API, while Vertex AI Visionis a newer platform on Vertex AI focusing on video, image streams, app workflows, and deployment.

Q2: Can I bring my own vision model?

Yes — Vision AI and Vertex Vision support using custom models or importing AutoML / trained TensorFlow / PyTorch vision models.

Q3: How many images / videos can I process?

Vision AI is scalable. You pay per feature / unit applied (e.g. label detection, OCR). There’s a free quota.

Q4: Is the Vision API deprecated?

No — it’s actively maintained. Older legacy models are being phased out but replaced with updated models.

Q5: How accurate is OCR / text detection?

Generally very good, especially for clean printed text. Performance degrades with handwriting, blur, low resolution, obstructions.

Q6: Can it detect text in different languages?

Yes — Vision supports multilingual OCR and detects a wide variety of scripts.

Q7: What about privacy / data ownership?

Your images and data remain your own. Google’s models don’t “train” on your data (in standard usage). But check your region’s compliance, and use proper data controls.

Q8: Can it work offline?

The standard API is cloud-based. For offline or on-device vision, you need specialized models and frameworks separate from Vision API.

.

Third Eye Data’s Take on Google Vision AI

We see Google Vision AI / Vision APIsas part of our toolkit for vision-based solutions—particularly in Document Intelligence, anomaly detection, or image understanding contexts. At Third EyeData, we may wrap Google Vision AI behind our models or pipelines when high-quality OCR, image classification, or object detection prebuilt APIs offer time and cost savings. We blend such services with our custom ML models rather than relying solely on them.

It is a mature, powerful, and versatile tool for adding sight to your applications. Whether you want to analyze images, extract text, moderate content, detect objects, or build multimodal agents, Vision AI provides the building blocks. Integrated with Vertex AI Vision and Google’s ML stack, it fits cleanly into broader AI systems.

However, you’ll want to watch domain performance, cost, privacy, and versioning. When your use case demands higher accuracy or specialization, combining Vision output with embedding + search or custom models is often necessary.

If you’re ready to try:

- Enable the Vision API / Vertex AI Visionin your Google Cloud console.

- Upload sample images and test label detection, OCR, object detection via the API or UI.

- Build a micro-app: for example, upload images → OCR → lookup recognized text in a database.

- Extend by integrating search or downstream logic.

Once you have something working, you can scale it with video processing, real-time streams, or multimodal agents.