Machinery Fault Detection with Vibration Data Anomaly Detection using Variational Auto Encoder

Auto Encoder is a unsupervised Deep Learning model. Data is encoded to a lower dimensional latent space using an encoder network. The decoder decodes the latent space data trying to reconstruct the original data. With Variational Auto Encoder (VAE), the encoder encodes probability distribution in the latent space. The decoders samples from the latent distribution and decodes to re generate original input. In this post we will find out how VAE can be used to identify faulty machinery by finding anomaly in the vibration data. The implementation is based on PyTorch and available in my Python package torvik. Code is available in the Github repo whakapai.

Variational Auto Encoder

With regular auto encoders, the latent space can be disjoint and non continuous, because the only requirement is to minimize regeneration error. Variational Auto Encoder circumvents this problem by having the encoder, encode a probability distribution instead of latent values directly. To be more specific, encoder output is mean and std deviation vector in the latent space for a multi variate Gaussian distribution. The decoder samples from the latent space probability distribution and decodes to regenerate the input.With VAE, we get a more compact and centralized representation in the latent space.

The loss function of VAE has 2 parts. There is the usual log likelihood of the decoder output. There is a second term in the loss function which penalizes the latent probability distribution for being far from a standard normal distribution. KL divergence is used for the difference between the 2 distributions.

What does VAE have to do with anomaly detection, you might ask. The regeneration error between the original input and the decoder output tells us how faithfully the input has been reconstructed. For anomalous data, the regeneration error will be high.

Machinery Vibration Data Anomaly Detection

The vibration data is artificially created by combining multiple sinusoidal components along with some random noise. For anomalous data, some additional sinusoidal components are added.

VAE is implemented as a no code framework based on PyTorch. To train a new model, all that is required is a configuration file. Here is some console output while training the model.

epoch [13-100], loss 177.027836 epoch [14-100], loss 162.747952 epoch [15-100], loss 151.778849 epoch [16-100], loss 143.338356 epoch [17-100], loss 127.745705 epoch [18-100], loss 110.538446 epoch [19-100], loss 96.696293 epoch [20-100], loss 79.637648 epoch [21-100], loss 71.642561 epoch [22-100], loss 69.146497 epoch [23-100], loss 67.764580 epoch [24-100], loss 67.264786 epoch [25-100], loss 66.748985 epoch [26-100], loss 66.172057 epoch [27-100], loss 65.879896

For testing, several records were generated with the last one being anomalous. Here are the regeneration errors, with the last one, which is anomalous having the maximum regeneration error.

regen error 3.396191 regen error 3.425475 regen error 3.488790 regen error 3.436164 regen error 3.414837 regen error 3.430545 regen error 3.580210 regen error 3.404906 regen error 3.502626 regen error 4.582457

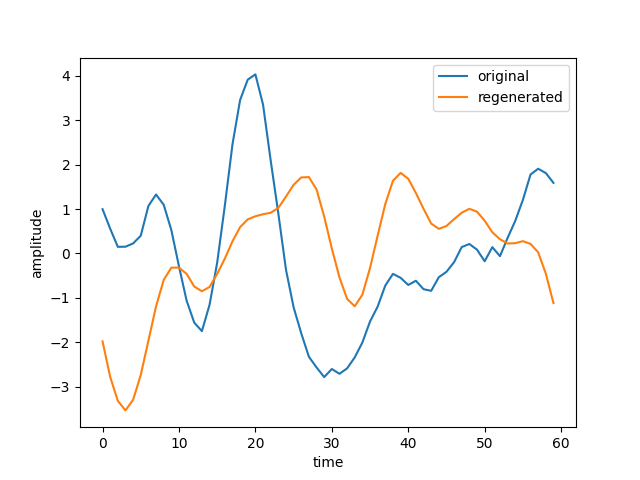

Here is a plot of original and reconstructed data for the anomalous case. You will notice significant difference between the two data sets.

Original and reconstructed signal for the anomalous case

Please follow the tutorial document if you want to execute all the steps from model training to predicting regeneration error. Here is the driver code for the VAE model. Here is the configuration file for the VAE model. Here is the driver code for time series data generation.

I have done minimal tuning of the model. Here are some of the parameters that could be used for tuning the model and improving the model performance.

- Encoder output size (train.num.enc.output)

- Latent layer size (train.num.latent)

- More training data

- Batch size (train.batch.size)

- Learning rate (train.opt.learning.rate)

- Using batch normalization (train.enc.layer.data and train.dec.layer.data)

- Using dropout (train.enc.layer.data and train.dec.layer.data)

Wrapping Up

VAE is a generative model. Generative models generate realistic data and generally used for data generation tasks. We have used VAE here for anomaly detection in time series data. I will extend by my implementation for 2D data e.g. image in future.