And you would be right. It has been around for years and has proven itself as the “big boy on the block”.

But let’s take a look at the newcomer. It is being sold as a carefree Kafka competitor, which even surpasses Kafka (according to Microsoft). So let’s have an objective look at “the good, the bad and the ugly” of this solution and if what they are selling is really as good as they claim.

What is Azure Event Hubs ?

Well if you want the boring sales pitch, have a look here. Read it? Good, now let’s recap. Basically it is a direct competitor of Kafka but offered as a PAAS solution. If that is not enough you will also have a support contract to help you with all your worries and bugs.

Sounds great right? After working with this solution for a couple of months I have found there is actually a shadow side to this story. But first, let’s start on a positive note.

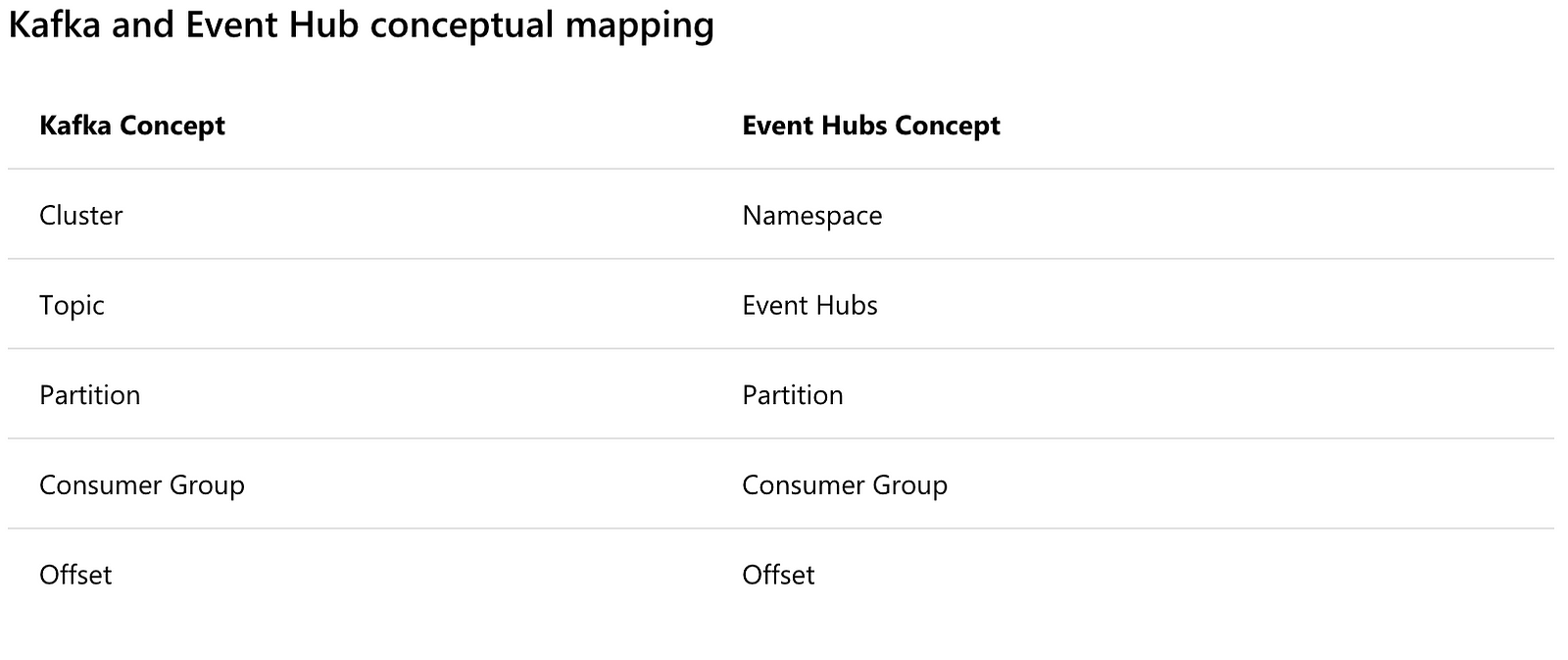

Vocabulary 101

Before we start praising this product, it is good to know the nomenclature used within the platform and how the terms relate to Kafka.

The good

Without further stalling let us dive into the good points of Event Hubs.

PAAS

Yes it is a fully managed PAAS solution. Setting it up from the portal requires only a couple of clicks. With it you get some great features:

- You can create an auto-inflate cluster, which basically means that the cluster scales up to your needs

- You can set up geo disaster recovery

- You can create a Kafka enabled cluster ( more on this later)

- You can choose with how many nodes you start in the cluster

Once you have created the cluster it is available through an FQDN. I must say I was impressed with what came out of the box.

On a side note, you can also create the cluster through terraform or ARM templates.

Replication

While replication in Kafka is handled through several parameters (either server wide or on topic level) it is something you can simply not forget to configure. The main reasons are performance and if your broker crashes, and you defined only a single partition, all your data is offline.

In Event Hubs this is handled for you in the background. Replication of “Event Hubs” partitions is 3 but you can not influence this figure at this point in time.

Security

This is a plus for all of you who spent their time doing this on Kafka. No messy kerberos, no certificates. Out of the box SSL enabled and fully integrated with AD. This means that you can choose which users have control over the cluster, while reading and writing is done through access policies. These policies are defined by the cluster administrator, yet during setup one SSL certificate is always present giving full control over the entire cluster.

The administrator(s) can generate these policies and distribute the keys to the correct persons in the organisations. If you think a key is compromised you can easily delete it and regenerate a new one.

So all those who need to argue with architects and cyber security, this is definitely a big plus.

Kafka enabled

This one might need some explanation. Until recently there was no compatibility with the Kafka clients and Event Hubs but in May 2018 this feature has been added in preview. Basically it means that your consumer and producer for Kafka now also work for Event Hubs. There are a couple of things you need to consider:

- So far only Kafka clients 1.X are supported

- You have to explicitly enable this feature during cluster creation. You can not do this afterwards.

While this is a great feature, it would be nice to see it enabled by default.

Capture mode

Instead of having a service reading your data and writing it to storage, Event Hubs comes with an integrated solution. If you create an “Event Hub” you can choose to where you want to offload the data. You have 2 options at this point in time: Azure Blob Storage or Azure Datalake. Files will be created on an interval specified by you (a size in MB or by time).

Note that this feature will only work with “Standard” created clusters, not a basic one.

The bad and the ugly

Well unfortunately it is not all sunshine in Microsoft land. Next is an overview of issues I encountered ( some of them solved at the time of writing) ranging from “shrug” to “screen smashing rage”.

Capture mode

We said this was a plus, well it also has a drawback. The directory structure is in a fixed format and can not be changed. So if you want all your files in 1 daily directory forget it. Given that they work with a pattern it would be more useful if they let the end user decide how the file structure looks like.

Quotas

Quotas, quotas everywhere… It seems Azure has a fixation on locking everything down with quotas. So basically you will run in the following limitations:

- Messages can’t be bigger than 256 KB

- Number of machines in a cluster is maximum 10

- Number of Event Hubs is limited to 10 per namespace

Well the list goes on and on, more info can be found here. From the support page it is unclear which quotas you will be able to extend or not, but worst case you loose a couple of days getting certain quotas increased.

Kafka enabled flag terraform

While an ARM template allows us to automate the cluster deployment, I was also looking into automatic deployment through terraform.

Unfortunately it wasn’t available which lead to the following series of tickets I had to create:

- https://github.com/Azure/azure-sdk-for-go/issues/2099

- https://github.com/terraform-providers/terraform-provider-azurerm/issues/1417

If both these tickets get resolved we can use terraform to create a Kafka enabled cluster.

Serialisation bug

This one has been fixed according to Microsoft in the week 30th of July. The problem occurred when producing messages through HTTP requests, as described here. The messages were posted successfully on the Event Hubs but we were unable to read the messages using Kafka client libraries. When we used the Event Hubs native libraries it worked fine.

While this was more of nuisance then a blocking issue, I still believe it was poor compatibility testing from Microsoft.

Spark performace

When we were trying to write data into Event Hubs using spark, we used the native spark drivers from Microsoft and found that writing never exceeded 3KB/s, which was … slow and frustrating. From 2nd of July 2018 this was fixed and throughput is better ( still not ideal). As an alternative I suggest using the Kafka libraries as they seem to get a good throughput (for the dataset I tested it went up to 1.3 MB/s)

Retention period

The retention per Event Hub is no longer than 7 days. I have heard that they will update this to 30 days in the future, still I believe they should leave it to the customer.

Fixed port

Event Hubs namespace listens only on port 9093 for the client libraries and port 443 if you produce messages through an HTTP client. While this has some advantages for Microsoft it also gives some disadvantages to customers. Other technologies let you change the port, why hardcode this port ?

“Exactly one time” — semtantic

This feature has been introduced in Kafka 0.11 and has been described at length by confluence. While it is complex to implement, it gives the end user so much more flexibility when joining streams and building real-time applications. So hopefully we will get this feature soon.

Kafka connect and Kafka streams

This one is easy, it is not supported. While there are plans to support Kafka Streams there is no mention of Kafka Connect. While it is clear that these 2 frameworks have leveraged the Kafka platform to it’s popularity today, I am uncertain why Microsoft didn’t support this from day one.

Conclusion

While it is obvious that Microsoft is trying to challenge the dominance of Apache Kafka (or Confluent) they dropped the ball on this one. The platform is riddled with bugs and a lot of features are still missing.

In 2015 Microsoft released an article comparing both Eventhub and Kafka, if you want you can find it here, yet most of the info is inaccurate, out of date or twisted.

Time to update or remove it Microsoft, this is just shooting yourself in the foot. To give a couple of examples:

- Throttling: Microsoft states it is a good idea to throttle messages and that Kafka does not support this. Yet you have quotas to throttle network and clients for Kafka. Microsoft stating that you can abuse Kafka is simply untrue. If you enable SSL on your cluster, abuse becomes impossible.

- Security: They state there is no security, yet Kafka supports SSL and Kerberos

- …

Well if you know both technologies you will find more inaccurate statements.

So should we toss it ? Maybe not. It really brings a couple of features to the table that are better or easier to do than with Kafka but for me the maturity is the biggest issue.

If you really rely on heavy duty streaming and complex operations on those streams I would still go with Apacha Kafka. It might be a bit more complex to set up but you get so much more in return.

As always I hoped you enjoyed reading, next time I will try to do a technical explanation on how to do a setup and explain how you can write some code against this platform.

Disclaimer

I wrote this article from a personal view while trying to be objective as possible. These thoughts might not necessary be those of my firm.