Vertex AI Studio: The Playground for Building & Deploying Generative AI

“From prompt to production — design, test, tune, and ship generative AI in one place.”

In a world flooded with content, applications, and data, what separates a “cool AI demo” from a scalable, trustworthy AI product? The ability to prototype fast, iterate safely, integrate with real systems, and deploy with governance. Vertex AI Studio by Google Cloud is meant to be that bridge — a managed workspace for generative AI workflows, built for professionals and teams.

In this post, we’ll dive deep into Vertex AI Studio:

- What it is (and isn’t)

- How it connects with the larger Vertex/Google AI ecosystem

- Real use cases and problems it solves

- Advantages and trade-offs

- Alternatives you should know

- Industry trends & what’s next

- Project examples & references

- FAQs

- Conclusion and next steps

Let’s go.

What Is Vertex AI Studio?

Vertex AI Studio is Google’s managed console tool inside the Vertex AI platform for prototyping, tuning, and deploying generative AI models (text, images, code, multimodal) using foundation models like Gemini, open models, or your own tuned models.

In simpler terms: it’s a workspace where you can design prompts, test outputs, tune models, and deploy them as part of applications, without wrestling with infra, GPUs, or low-level APIs.

Key capabilities include:

- Access to Google’s foundation models + third-party/open models (Model Garden) in one interface

- Prompt design / prompt engineering tools (chat interface, multiple configurations)

- Fine-tuning / customization (adapter tuning, style tuning, RLHF) using your data

- Connecting to real data & actions via Vertex AI Extensions (datastores, connectors)

- Integration with full ML lifecycle: deployment, governance, scalability under Vertex AI’s umbrella

- Enterprise-level governance, privacy, data controls (your data doesn’t leak into base models)

It’s part of the Vertex AI ecosystem — coexisting with Vertex AI pipelines, Agent Builder, Vertex AI Search, etc.

How It’s Connected

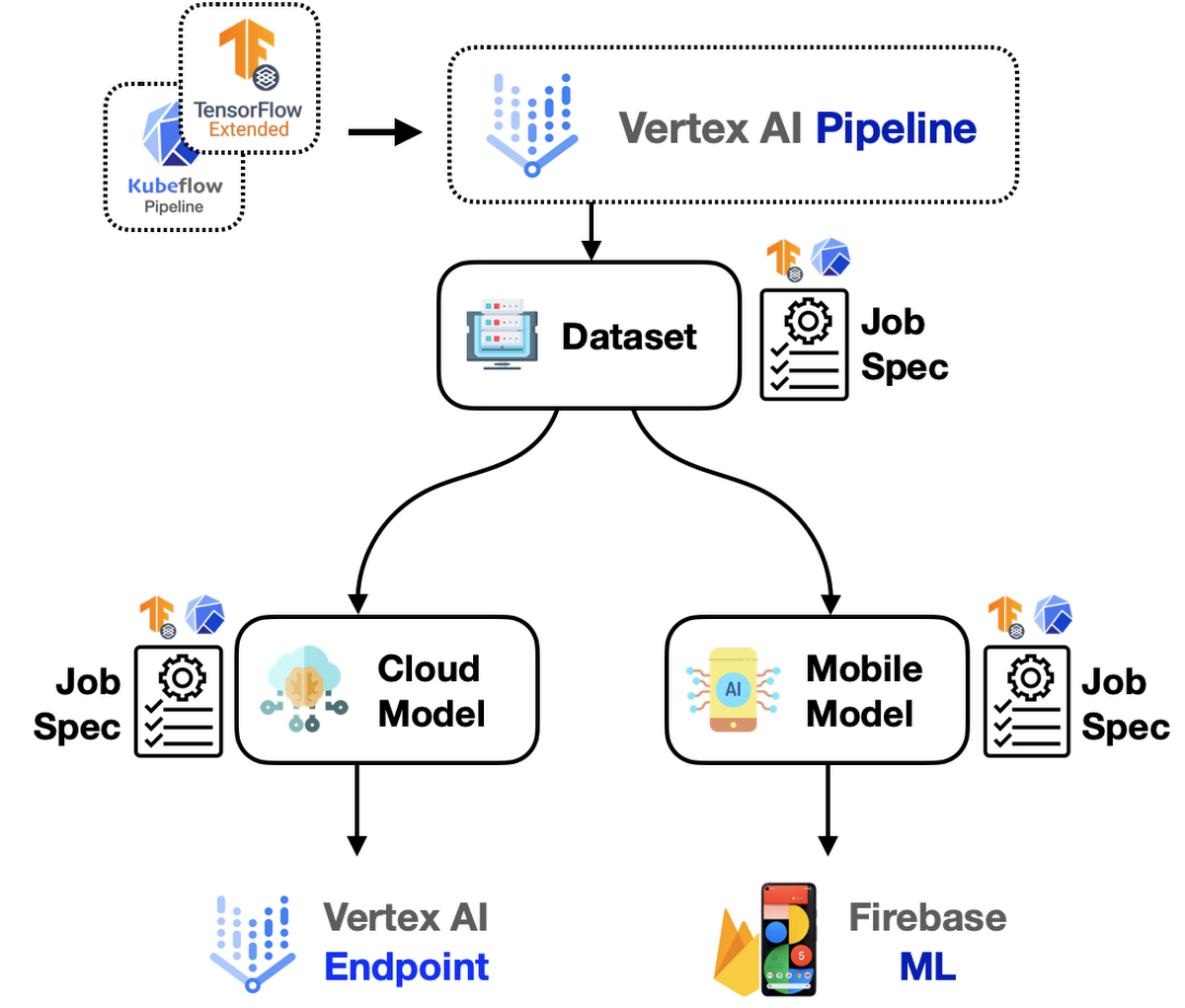

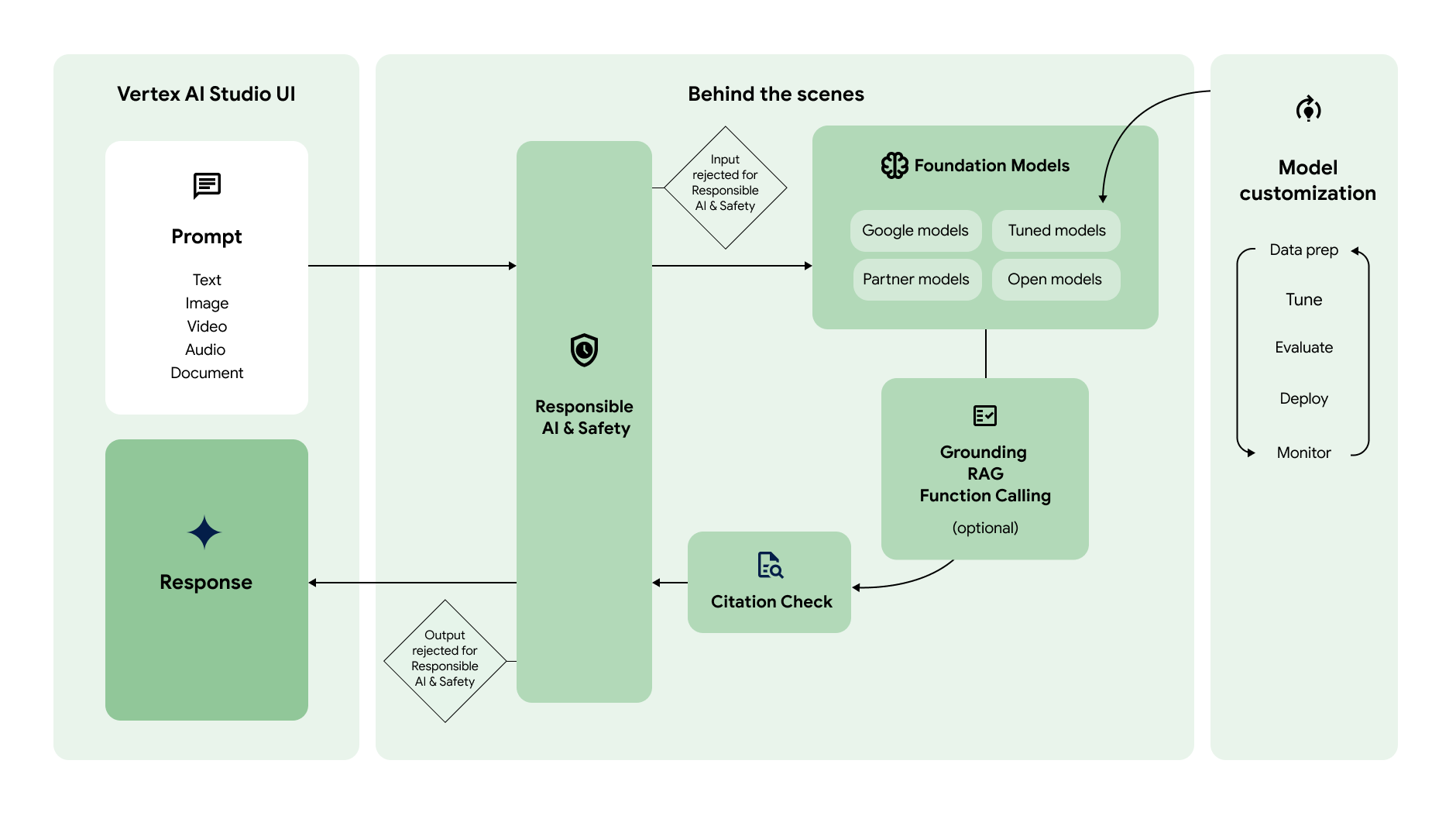

Understanding Vertex AI Studio means seeing how its pieces interplay and link to the larger Vertex AI fabric. Here’s a conceptual architecture and workflow:

- Model Access & Model Garden

- Studio gives you access to Google’s foundation models (Gemini, open models) and third-party models via the Model Garden.

- You pick or switch between models to see which fits your task best.

- Prompt & Prototype Stage

- In Studio you can type your prompt (text, image, code) in a UI.

- Experiment with temperature, top-k/p, system messages, example prompt templates.

- You see live output, tweak, iterate. (Think of a “playground”)

- Customization / Tuning

- Once a baseline prompt works, you can tune the model using your data.

- Supported methods include adapter tuning, style tuning, RLHF (Reinforcement Learning from Human Feedback). (This is how you make the model “fit your domain”)

- The tuning produces a variant model that lives in your environment (doesn’t leak back to the base model).

- Extension & Data Integration

- Vertex AI Extensions (in or near Studio) let you define connectors — bringing in your internal data sources, APIs, or third-party tools.

- This enables agents to act on your data, not just generate free text.

- Testing & Evaluation

- Studio integrates with Gen AI evaluation services: you can run test suites, human labeling, benchmark responses, compare models.

- This gives feedback loops to refine prompts and tuning.

- Deployment / Endpoint / Application Integration

- Once ready, you can deploy your prompt/model combo as an AI service using Vertex AI managed endpoints (auto-scaling, monitoring).

- The same custom models can then be called from apps, chatbots, agents, etc.

- Governance, Security & Governance

- Vertex AI Studio sits within Google’s security model: you control data permissions, region, data isolation.

- The base models are immutable; your tuned versions don’t leak back.

- Governance, versioning, monitoring, audits are supported by Vertex AI’s infra.

Hence, Studio is the front-end workspace + glue that joins prompt creation, tuning, evaluation, and deployment — all under Vertex AI’s managed infra and tools

Use Cases / Problem Statements Addressed

Let’s ground this in tangible examples — where Studio shines and the problems it solves.

- Rapid Prototyping of Generative Features

Want to test “auto summary of legal docs,” “image captioning,” or “chat with your internal data”? Studio gives you a fast sandbox — no infra setup required. - Customized Domain Agents

Train a variant of Gemini that better speaks your company’s legal terms, industry jargon, or brand voice via tuning. Use Studio as the tuning layer. - Composable AI in Products

Use Studio to build parts of your app: a content generator, chatbot, smart assistant, or image generator — test in UI then deploy. - Prompts + Evaluation & Monitoring Loop

You can track which prompts succeed/fail, log metrics, refine. Without Studio, you’d manually wire this. - Foundation Model Layer Abstraction

Instead of dealing with multiple APIs and model versions, Studio provides a unified interface to switch models or test multiple backends. - Safe Experimentation

As a managed environment, Studio allows teams to experiment without risking production stability. - Multimodal Generative Apps

Because Studio supports text, image, code, video prompt modalities (depending on model), you can prototype apps that use combinations (e.g. describe image + ask question). - AI for Internal Tools

For content teams, marketing, legal — they can use Studio to generate drafts, check policies, generate reports, etc.

In all, it helps you go from “I wonder if AI can do that” to “AI feature is live in my app” faster and safer.

What Makes It Strong

Here are the advantages that make Vertex AI Studio compelling:

- No Infrastructure Overhead

You don’t need to manage GPUs, clusters, or deployment pipelines to just prototype. - Unified Workspace

A single interface for prompt testing, tuning, data integration, deployment — reduces context switching and errors. - Access to Google Foundation Models

You can experiment with high-end models (Gemini, open models) without wiring separate APIs. - Safe Model Customization

Tuning/customization is isolated — your data doesn’t leak into the base model, and base model stays intact. - Seamless Transition to Production

Prototype in Studio, then deploy via Vertex endpoints, leveraging the same model and prompt configuration. - Integrated Tools & Data Connectors

No separate glue code to talk to your internal DBs, APIs — connectors reduce friction. - Evaluation & Metrics Built-In

Helps you measure performance, bias, failure modes — crucial when moving to production. - Enterprise-Grade Security & Governance

Built-in permissions, VPC controls, region selection, data sovereignty control. - Model Garden & Flexibility

You can try different foundation models, open weights, or custom models from the same workspace. - Fast Iteration & Productivity

Engineers, product teams, non-engineers alike can prototype features without full ML pipeline involvement.

Cons / Trade-Offs & Challenges of VertexAI Studio

No tool is perfect — here’s where Studio may not (yet) shine, or where you should be cautious:

- Less Low-Level Control

Because it’s managed, you may not have access to deep internals: customizing training loops, memory caching, advanced model internals can be limited. - Cost at Scale

Prototyping is cheap, but tuning, large prompt usage, deployment — if mismanaged — can get expensive. - Model Latency / Throughput Constraints

For high-volume apps, latency might be non-negligible unless optimized or caches used. - Dependency & Lock-In

Heavy use means you’re tied into Google’s model stack, connectors, APIs — migrating later can be nontrivial. - Feature Maturity

Some advanced features (exotic prompt pipelines, exotic connectors, edge execution) may be in preview or limited. - Domain-Specific Performance Gaps

Generic foundation models may underperform for niche domains unless well tuned or complemented with retrieval. - Learning Curve

While it abstracts away infra, prompt engineering, tuning, and debugging generative errors is still complex. - Data Privacy & Governance Risks

You must properly configure permissions; inadvertently exposing internal data is a risk.

Alternatives of Vertex AI Studio

If Vertex AI Studio doesn’t fit your situation, here are alternative approaches:

- Open-Source Prompt + Experimentation Tools

Tools like LangSmith, PromptLayer, PromptFlow for local prompt experimentation, and then custom infra for deployments. - Provider-Specific Playgrounds / Consoles

OpenAI Playground, Anthropic Playground, Claude Studio — for prompt testing but without enterprise integration. - Custom Tooling + ML Platform

Build your own prompt/UI/test environment over your ML infra (e.g. Jupyter + Model APIs + dashboards). - Hybrid Approaches

Use Studio for prototyping, then export prompt logic and switch to custom runtime. - Other Generative AI Platforms

Azure AI Studio, AWS Bedrock + tools, etc. (depending on cloud alignment)

Each alternative has trade-offs between control, ease, features, and integration.

Upcoming Updates / Industry Insights of Vertex AI Studio

Here’s what’s evolving or expected around Vertex AI Studio / generative AI platforms:

- Deeper Gemini Integration

Studio is likely to support newer Gemini model versions out-of-the-box, adding capabilities and modalities. - Expanded Extension / Connector Ecosystem

More built-in connectors — to CRMs, ERP, data warehouses, SaaS tools — making AI features easier to plug in to enterprises. - Better Prompt Pipelines & Chains

Advanced prompt orchestration (conditional flows, multi-step pipelines) integrated into Studio UI. - Edge & On-Device Variants

Studio may eventually allow you to push tuned models to edge devices (for offline / low-latency use). - Auto-Tuning & Suggestive Prompting

Tools that suggest prompt improvements, parameter tuning, fallback strategies. - Collaborative Workflows & Versioning

Support for multiple users, prompt version control, review flows, branching experiments. - Safety / Guardrail Layers

Built-in filters, bias detection, hallucination detection, fallback controls in the UI. - Multimodal Expansion

More advanced support for video, audio, 3D embeddings, cross-modal apps. - Cross-Cloud / Multi-Model Interoperability

Ability to use alternative model providers (Mistral, etc.) through Studio integration. (Google recently announced integration of Mistral’s Codestral into Vertex)

Project References & Tutorials

Frequently Asked Questions of Vertex AI Studio

Q1: Is Vertex AI Studio only for prototyping or can it support production workloads?

A: It’s designed for both prototyping and smooth transition to production. You can deploy tuned prompts/models via Vertex AI endpoints.

Q2: Does my data get used to train Google’s models?

A: No. When you tune models with your data, the base model remains unchanged and your data remains private.

Q3: Can I bring my own model into Studio (e.g. an open model)?

A: Yes — Studio supports open models and third-party models from its Model Garden.

Q4: Does it support multimodal inputs (images, video, code)?

A: Yes — Studio allows prompts using text, images, code depending on model capabilities.

Q5: What kind of tuning does Studio support?

A: Techniques like adapter tuning, style/subject tuning, and RLHF are supported.

Q6: Can I export code or integrate it in my own app?

A: Yes — you can often export prompt + configuration code or call models via APIs. (Switching from UI to app)

Q7: What are the region / availability constraints?

A: As with any Google Cloud service, availability depends on supported regions for generative AI and model deployments. Always check in your console.

Q8: Is Vertex AI Studio the same as Google AI Studio?

A: No, they differ. Google AI Studio is more lightweight, aimed at prompt prototyping and ease-of-use, while Vertex AI Studio is enterprise-grade, integrated with ML ops, governance, and deployment.

Third Eye Data’s Take on Vertex AI Studio

We view Vertex AI Studio (or equivalent visual interfaces) as a potential tool that simplifies AI development workflows. At Third Eye Data, we may use or evaluate Studio-like environments for rapid prototyping, model exploration, and experimentation. However, our public emphasis remains on end-to-end AI solutions, rather than promoting particular GCP UI/Studio features.

Vertex AI Studio is not just another “playground.” It’s a full-featured workspace for generative AI professionals and teams. It lets you:

- Prototype quickly

- Tune models safely

- Hook into real data & actions

- Deploy with governance and scale

In doing so, it reduces friction between idea and production and helps you build trustworthy AI features rather than one-off demos.

What to do next:

- Sign up & explore Studio in your Google Cloud console

- Run the “Introduction to Vertex AI Studio” course (Skills Boost) to get hands-on.

- Pick a domain you care about (e.g. your domain’s jargon) and build a prompt + tuning experiment.

- Deploy that prompt as an endpoint in your app or chatbot and measure performance.

- As you grow, explore more advanced workflows: evaluation, versioning, connectors, multimodal experiments, or cross-agent flows.