A Comprehensive Guide on Latent Dirichlet Allocation

What is Latent Dirichlet Allocation or LDA?

Latent Dirichlet Allocation (LDA) is a generative probabilistic model used primarily for topic modeling in natural language processing (NLP). It assumes that documents in a corpus consist of multiple latent topics. And each topic is represented as a distribution over words.

LDA enables the extraction of thematic structure from unstructured text data, providing insights into the hidden topics within a collection of documents.

How Does Latent Dirichlet Allocation Work?

Core Assumptions:

- Each document is a mixture of multiple topics.

- Each topic is a mixture of words with certain probabilities.

- The generative process follows a probabilistic approach using the Dirichlet distribution to enforce sparsity and structure.

Generative Process:

For each document in the corpus:

- Choose a distribution of topics– sampled from a Dirichlet distribution.

- For each word in the document:

- Sample a topicbased on the document’s topic distribution.

- Sample a wordfrom the chosen topic’s word distribution.

Parameters:

- α: Hyperparameter controlling the distribution of topics per document.

- β: Hyperparameter controlling the distribution of words per topic.

The algorithm seeks to estimate two key probabilities:

- The topic distribution for each document.

- The word distribution for each topic.

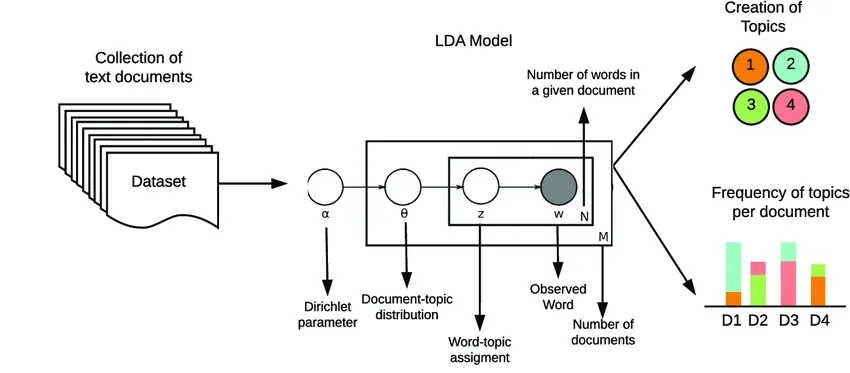

LDA Process Flow

Image Source: Medium

List of Symbols Used in LDA and Their Meanings

Model Parameters

α (alpha):

- Represents the Dirichlet prior for the distribution of topics in a document.

- Controls the sparsity of topic proportions in documents.

- Smaller α → fewer topics per document; larger α → more evenly distributed topics.

β (beta):

- Represents the Dirichlet prior for the distribution of words in a topic.

- Controls the sparsity of word distributions in topics.

- Smaller β → fewer words dominate each topic; larger β → words more evenly distributed.

K:

- The total number of topics.

- Predefined as an input to the model.

M: The number of documents in the corpus.

Nᵢ: The number of words in document i.

Distributions and Variables

θ (theta):

- Topic distribution for a document.

- A vector where each entry corresponds to the proportion of a specific topic in the document.

ϕ (phi):

- Word distribution for a topic.

- A vector where each entry corresponds to the probability of a word appearing in the topic.

z (z):

- Latent topic assignment for a word in a document.

- Indicates which topic a word belongs to in the generative process.

w (w):

- Observed word in a document.

- Represents the actual words from the corpus.

P(w | z): The probability of a word given a topic.

P(z | θ): The probability of a topic given a document’s topic distribution.

Mathematical Notation

Σ (sigma): Summation symbol used in calculations (e.g., sum of probabilities).

∏ (pi): Product notation used in probability models.

Dir(θ | α): Dirichlet distribution governing the topic proportions θwith parameter α.

Mult(w | ϕ): Multinomial distribution generating words wfrom topic-word distribution ϕ.

log(): Logarithmic function, often used in likelihood calculations for optimization.

Indexes and Counters

i:

- Index for documents.

- Ranges from 1 to M(total documents).

j:

- Index for words within a document.

- Ranges from 1 to Nᵢ(total words in document i).

k:

- Index for topics.

- Ranges from 1 to K(total topics).

n(w, z): Count of word wassigned to topic z.

n(z, d): Count of topic zassigned to words in document d.

Visualization of Relationships Between Symbols

Here’s how these symbols interact in the generative process:

For each document: Sample θ ~ Dir(α)(topic distribution for the document).

For each word in the document:

- Sample a topic z ~ Mult(θ)(topic assignment).

- Sample a word w ~ Mult(ϕ_z)(word from the topic’s word distribution).

These symbols, when combined, form the probabilistic framework of LDA and enable its applications in topic modeling.

What is LDA Used for in NLP?

LDA is widely used in NLP for uncovering the thematic structure of text data. Common applications include:

- Topic modeling:Identifying hidden topics in large text corpora.

- Document classification:Grouping similar documents based on topic composition.

- Recommendation systems:Matching users with content based on extracted topics.

- Text summarization:Extracting key themes for concise summaries.

LDA Solved Example

Let’s consider a small corpus with three documents:

- “I love machine learning and artificial intelligence.”

- “Natural language processing enables machines to learn.”

- “Deep learning advances artificial intelligence.”

Assume two latent topics:

- Topic 1: Machine learning and AI.

- Topic 2: Natural language processing and learning.

The LDA process assigns probabilities to words within each topic. For example:

- Topic 1: [“machine”: 0.3, “learning”: 0.2, “intelligence”: 0.5]

- Topic 2: [“natural”: 0.4, “language”: 0.3, “processing”: 0.3]

Each document is modeled as a mixture of these topics. The model computes distributions like:

- Doc 1: Topic 1 (70%), Topic 2 (30%)

- Doc 2: Topic 2 (80%), Topic 1 (20%)

- Doc 3: Topic 1 (60%), Topic 2 (40%)

Applications of LDA

- Healthcare:Analyzing patient records for prevalent conditions.

- Marketing:Understanding customer feedback through reviews.

- Education:Categorizing academic articles into research themes.

- Social Media:Identifying trending topics on platforms like Twitter.

Using LDA in Generative AI Solutions and Custom OpenAI Applications

LDA can be a powerful tool in generative AI solutionsand can seamlessly integrate into custom OpenAI applications.

How LDA Can Be Used in Generative AI Solutions

LDA is primarily a topic modeling technique but can be instrumental in enhancing generative AI models by providing structured thematic information. Here are some specific ways LDA contributes:

Content Structuring and Summarization: LDA can extract topics from vast unstructured text data, allowing generative models to produce summaries or generate content with a specific thematic focus.

Fine-Tuned Prompt Engineering: By identifying dominant topics in a corpus, LDA can help craft highly relevant and contextual prompts for generative AI models like OpenAI’s GPT. This ensures more accurate and coherent responses.

Guided Content Creation: Generative models often lack structure in their output. Integrating LDA can guide the generation process, creating content aligned with predefined or extracted topics, such as research papers, marketing blogs, or product descriptions.

Hybrid Knowledge Systems: LDA can identify domain-specific topics, enabling generative AI models to provide detailed and contextually accurate content or recommendations tailored to those topics.

Cross-Domain Adaptations: LDA’s ability to uncover latent topics from diverse corpora allows generative AI systems to transfer knowledge and generate cross-disciplinary insights.

Implementing LDA in Custom OpenAI Applications

Custom OpenAI applications can leverage LDA in the following ways:

Preprocessing Step for NLP Pipelines: Use LDA to preprocess and categorize input data into topics before feeding it to a generative model. This allows the model to specialize in generating content relevant to the identified topics.

Dynamic Topic-Based Response Generation: Implement LDA to dynamically detect the user’s intent and topics of interest from queries, enabling OpenAI applications to respond with targeted and coherent information.

Integration Workflow:

- Input:Text corpus or user queries.

- Processing:

- Apply LDA to extract dominant topics.

- Use the topic distributions to define constraints or guide the generative model.

- Output:Generate topic-relevant responses or content.

Custom Use Case Examples:

- E-learning Platforms:Automatically generate lesson summaries or topic-specific quizzes.

- Customer Support:Categorize and respond to customer queries with topic-specific FAQs or solutions.

- Content Marketing:Generate blog posts or campaign content focusing on popular or trending topics identified by LDA.

Example Workflow for Custom Applications

Step 1: Topic Extraction with LDA

Use Python libraries like Gensim or scikit-learn to extract topics from your dataset.

Step 2: OpenAI Integration

Pass the extracted topics as part of the input context to OpenAI’s API.

Step 3: Generate Topic-Specific Outputs

Use OpenAI’s completion or chat models to generate outputs that align with the identified topics.

Code Snippet for Integration

from gensim.corpora.dictionaryimport Dictionary

from gensim.models.ldamodelimport LdaModel

import openai

# Topic Modeling with LDA

documents = [“AI and machine learning are transforming industries”,

“Natural language processing helps with text analysis”,

“Deep learning advances in AI”]

texts = [[word.lower() for word in doc.split()] for doc in documents]

dictionary = Dictionary(texts)

corpus = [dictionary.doc2bow(text) for text in texts]

lda = LdaModel(corpus=corpus, id2word=dictionary, num_topics=2)

# Extract Topics

topics = lda.show_topics(num_words=5)

# OpenAI Generative Model

openai.api_key = “YOUR_API_KEY”

response = openai.ChatCompletion.create(

model=”gpt-4″,

messages=[

{“role”: “system”, “content”: f”Generatea summary about: {topics}”}

]

)

print(response[‘choices’][0][‘message’][‘content’])

Why Use LDA in Generative AI and OpenAI Applications?

Enhanced Context Understanding: LDA complements generative models by adding interpretability and structure, crucial for applications like report generation or chatbot responses.

Domain-Specific Insights: LDA identifies domain-specific topics, which generative models can leverage to produce accurate, relevant, and coherent outputs.

Hybrid AI Architectures: Combining LDA’s topic modeling with OpenAI’s generative power creates hybrid AI systems capable of performing thematic analysis and generating advanced narratives.

LDA Topic Modeling

Topic modeling involves using LDA to assign documents to topics and extract the most representative words for each topic. For example:

- Input: A collection of research papers.

- Output: Topics like “Artificial Intelligence,” “Data Science,” etc., with associated keywords.

LDA helps distill vast amounts of text data into manageable themes, making it easier to analyze and interpret.

Read Our Blog on Topic Modeling and Latent Dirichlet Allocation (LDA) in Python

LDA Algorithm

The LDA algorithm involves three main steps:

Initialization:Assign random topic probabilities to words in the corpus.

Iterative updates:Use methods like Gibbs Sampling or Variational Inference to:

- Refine topic assignments for words.

- Update distributions for topics and words.

Convergence:Stop when topic distributions stabilize.

LDA in Python

Python libraries like Gensim and scikit-learn provide robust implementations of LDA.

Example with Gensim:

from gensim.corpora.dictionary import Dictionary

from gensim.models.ldamodel import LdaModel# Sample corpus

documents = [“I love machine learning”, “Deep learning advances AI”, “Natural language processing”]# Preprocess data

texts = [[word.lower() for word in doc.split()] for doc in documents]

dictionary = Dictionary(texts)

corpus = [dictionary.doc2bow(text) for text in texts]# Train LDA model

lda = LdaModel(corpus=corpus, id2word=dictionary, num_topics=2)# Display topics

for idx, topic in lda.print_topics():

print(f”Topic {idx}: {topic}”)

LDA with scikit-learn

from sklearn.decompositionimport LatentDirichletAllocation

from sklearn.feature_extraction.textimport CountVectorizer

# Sample corpus

documents = [“I love machine learning”, “Deep learning advances AI”, “Natural language processing”]

# Convert text to feature matrix

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(documents)

# Train LDA model

lda = LatentDirichletAllocation(n_components=2, random_state=42)

lda.fit(X)

# Display topics

terms = vectorizer.get_feature_names_out()

for idx, topic in enumerate(lda.components_):

print(f”Topic{idx}: {[terms[i] for iin topic.argsort()[:-6:-1]]}”)

Advantages of LDA

- Interpretable:Produces human-readable topics and word distributions.

- Unsupervised:Requires no labeled data, making it versatile.

- Scalable:Handles large corpora efficiently.

Limitations of LDA

- Fixed Number of Topics:The number of topics must be predefined.

- Sensitive to Parameters:Performance heavily depends on α and β values.

- Short Texts:Struggles with short documents like tweets.

- Bag-of-Words Assumption:Ignores word order and context.

Alternatives to LDA

- Non-Negative Matrix Factorization (NMF):Better for short texts.

- Hierarchical Dirichlet Process (HDP):Dynamically determines the number of topics.

- Neural Topic Models:Leverages deep learning for better accuracy.

- BERT-Based Models:Embedding-based approaches for contextualized topic extraction.

Relevance of LDA in Upcoming Enterprise AI Solutions in 2025

As enterprises increasingly adopt AI-driven tools for automation and analytics, LDA remains relevant due to its foundational role in topic modeling and text analysis. By 2025, its importance will be amplified in the following areas:

Enhanced Content Categorization:Enterprises will use LDA to classify and structure vast amounts of unstructured text data from diverse sources such as customer reviews, emails, and reports.

Intelligent Knowledge Management Systems:LDA will play a role in developing systems that retrieve and organize business-critical information, improving decision-making.

Personalized Customer Experience:LDA-powered models will enhance recommendation engines by understanding customer preferences at a thematic level.

Integration with Generative AI:LDA can complement generative AI models by providing interpretable topic structures, enabling fine-tuned content generation and summarization.

Scalability in Big Data Environments:With advancements in distributed computing, LDA will become even more efficient for analyzing large-scale enterprise datasets.

Enterprises looking for cost-effective, interpretable, and robust solutions will continue to rely on LDA while integrating it with modern AI technologies for hybrid solutions.