Hadoop Data

Warehouse Project

Apache Hadoop-Based Data Warehousing: Overview & Insights

Companies have been using decision support for years, but they always had to go out and find the data each time they faced a challenge of writing a new decision support application. This extra effort was because large companies like General Motors installed centralized database management systems (DBMS) that were “best-in-class” in their day, but limited by physical space and costs. Too often, the high volumes and the complexities of operational data brought these systems to their virtual knees.

Then in the early 1990s, GM began aggregating all of its data regarding vehicles and vehicle orders into data warehouses. These information systems segregated the company’s operational data and data query activities from the production databases. Data access became easier and less costly, regardless of the location of the source data. Now this brings us to the question of what is a Data Warehouse?

WHAT IS A DATA WAREHOUSE?

A Data Warehouse is a structured relational database, where you aim to collect together all the interesting data from multiple systems. When putting data into a warehouse, you need to clean and structure it at the point of insertion. This cleaning and structuring process is usually called ETL – Extract, Transform, and Load.

While the data warehouse approach is helpful since your data looks clean and simple, it can also be very limiting, as you’re now unable to change the questions you want to ask later since the data’s already been pre-processed, or to correct for errors in the ETL process. It can also get very expensive, as enterprise data warehouses are typically built on specialized infrastructure which becomes very pricey for large datasets.

Therefore, Enter Hadoop…

THE HADOOP ECOSYSTEM

The Hadoop ecosystem starts from the same aim of wanting to collect together as much interesting data as possible from different systems, but approaches it in a radically better way. With this approach, you dump all data of interest into a big data store (usually HDFS – Hadoop Distributed File System). This is often in cloud storage – cloud storage is good for the task, because it’s flexible, and inexpensive since it puts the data

close proximity to cheap cloud computing power. You can still then do ETL and create a data warehouse using tools like Hive if you want, but more importantly you also still have all of the raw data available so you can also define new questions and do complex analyses over all of the raw historical data in whichever way you deem fit.

The Hadoop toolset allows great flexibility and power of analysis, since it does big computation by splitting a task

over large numbers of cheap commodity machines, letting you perform much more powerful, speculative, and

rapid analyses than is possible in a traditional warehouse.

Hadoop is an open-source, Java-based software framework for storing data and running applications on clusters of commodity hardware. It provides massive storage for any kind of data, enormous processing power and the ability to handle virtually limitless concurrent tasks or jobs. Redefining the way in which data can be stored & processed, Hadoop has become a household name for businesses across various verticals.

The Hadoop Distributed File System (HDFS) is the underlying file system of a Hadoop cluster. It provides scalable, fault-tolerant, rack-aware data storage designed to be deployed on commodity hardware. Hadoop is an open source project that offers a new way to store and process big data. The software framework is written in Java for distributed storage and distributed processing of very large data sets on computer clusters built from

commodity hardware.

Hadoop is an open-source, Java-based software framework for storing data and running applications on clusters of commodity hardware. It provides massive storage for any kind of data, enormous processing power and the ability to handle virtually limitless concurrent tasks or jobs. Redefining the way in which data can be stored & processed, Hadoop has become a household name for businesses across various verticals.

The Hadoop Distributed File System (HDFS) is the underlying file system of a Hadoop cluster. It provides scalable, fault-tolerant, rack-aware data storage designed to be deployed on commodity hardware. Hadoop is an open source project that offers a new way to store and process big data. The software framework is written in Java for distributed storage and distributed processing of very large data sets on computer clusters built from

commodity hardware.

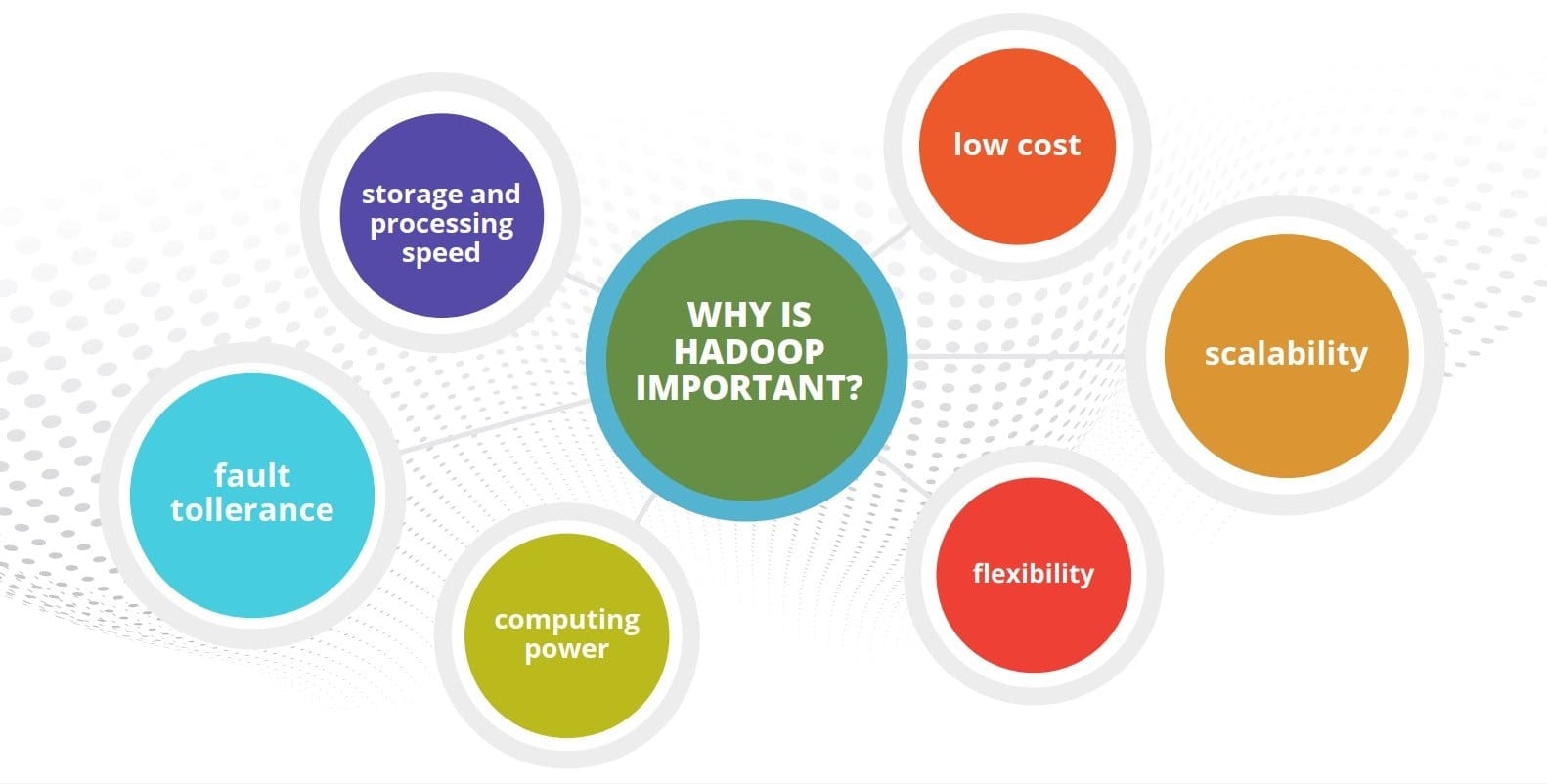

While large Web 2.0 companies such as Google and Facebook use Hadoop to store and manage their huge data sets, Hadoop has also proven valuable for many other more traditional enterprises based on the following six big advantages:

1. Ability to store and process huge amounts of any kind of data, quickly: With data volumes and varieties constantly increasing, especially from social media and the Internet of Things (IoT), that’s a key consideration.

2. Computing power: Hadoop’s distributed computing model processes big data fast. The more computing nodes you use, the more processing power you have.

3. Fault tolerance: Data and application processing are protected against hardware failure. If a node goes down, jobs are automatically redirected to other nodes to make sure the distributed computing does not fail.

Multiple copies of all data are stored automatically.

4. Flexibility: Unlike traditional relational databases, you don’t have to preprocess data before storing it. You can store as much data as you want and decide how to use it later. That includes unstructured data like text, images and videos.

5. Low cost. The open-source framework is free and uses commodity hardware to store large quantities of data.

6. Scalability. Unlike traditional relational database systems (RDBMS) that can’t scale to process large amounts of data, Hadoop enables businesses to run applications on thousands of nodes involving thousands of terabytes of data. You can easily grow your system to handle more data simply by adding nodes.

The increased need to analyse, organise and convert big data into meaningful information is what has contributed to the growth of the yellow elephant, Hadoop, globally. Analysts predict that the global Hadoop market will witness a CAGR of more than 59% by 2020.

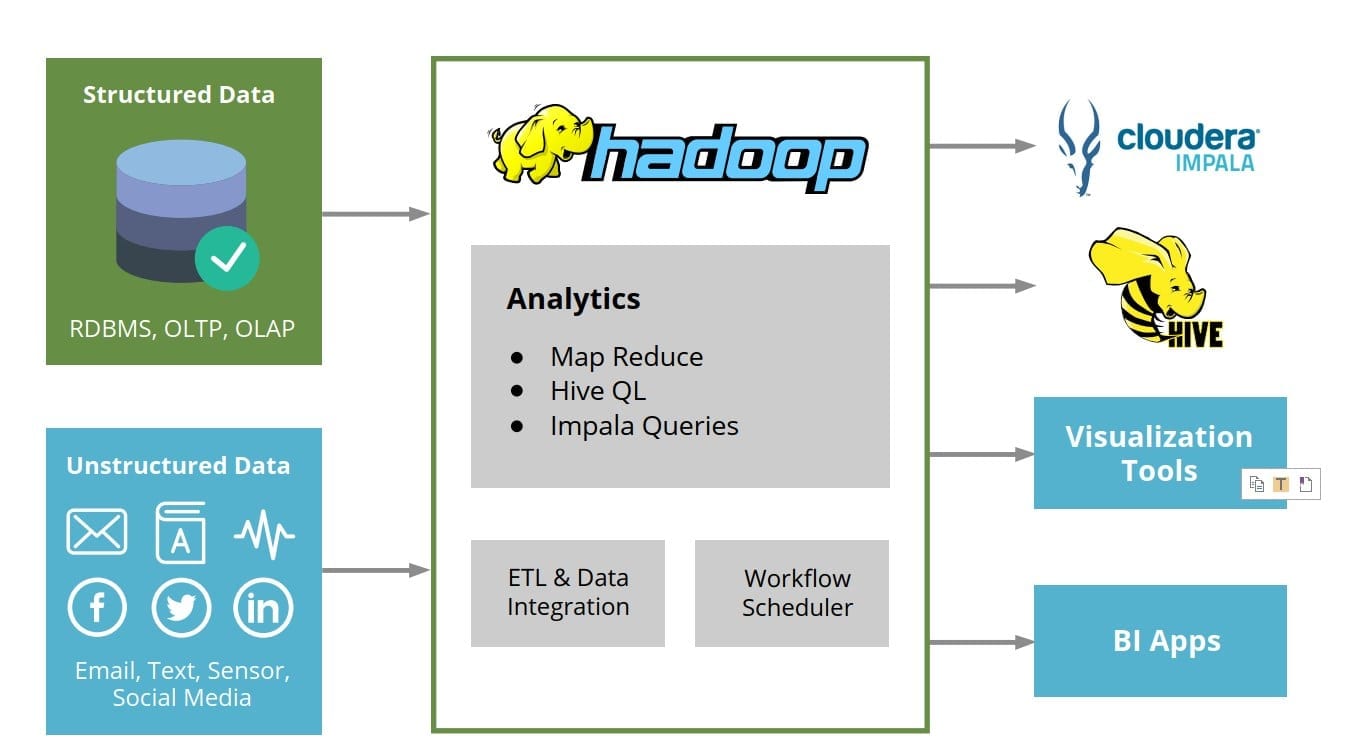

HADOOP DATA WAREHOUSE ARCHITECTURE

The following illustration shows a simple architecture of a typical Hadoop data warehouse.

- End users directly access data derived from several source systems through the data warehouse.

- Structured, semi structured and unstructured raw data from diverse sources is ingested and analyzed in the Hadoop data warehouse.

- These data could be from operational RDBMSs, other enterprise data warehouses, human entered logs, machine generated data files or any other special systems. The data is ingested using native tools and/or 3rd party data integration products.

A Hadoop Data warehouse has the following characteristics:

- Integrates into any enterprise BI environment via standard connectivity and security mechanisms.

- Is comparable or has better performance than most of its commercial competitors.

- Supports Ansi SQL (with few limitations).

A Hadoop Data warehouse maintains the core Hadoop’s strengths:

- Flexibility

- Ease of scaling

- Cost effectiveness.

WHY YOU SHOULD CHOOSE THIRDEYE

Third Eye brings a strong set of real-world experiences and expertise around Hadoop Data Warehousing projects. Our partnership approach to projects, our no-surprises project management philosophy and capability to leverage the (onsite-offsite-offshore) delivery model which will ensure value for every dollar spent.

Third Eye will conduct your project in phases, which will include data ingesting, profiling, modeling & ETL Design.

Third Eye Hadoop solutions will help you collect, analyze and report on customer experiences as you manage:

- Customer data. Gather all the right data – where and when you need it – from across the entire customer

life cycle. - Marketing mix. Analyze, forecast and optimize your mix of advertising and promotions – through websites,

emails, social media, forums and more. - Social media analytics. Identify and harness top social network influencers and their communities.

Customer segmentation. Know which customer groups are most likely to purchase your products and

services, and why. - Real-time decisions. Make the best offers to the right customers at the right time.

Because Third Eye is focused on analytics, not storage, we offer a flexible approach to choosing the right hardware and database vendors. We can help you deploy the right mix of technologies, including Hadoop and other data warehouse technologies.

Remember, “The success of any project is determined by the value it brings” So metrics built around revenue generation, margins, risk reduction and process improvements will help pilot projects gain wider acceptance and garner more interest from other departments. We’ve found that many organizations are looking at how they can implement a project or two in Hadoop, with plans to add more in the future.

CONTACT US

Third Eye Data

5201 Great America Parkway, Suite 320,

Santa Clara, CA USA 95054

Phone: 408-462-5257

Contact us

[contact-form-7 id=”182″ title=”Talk to Us”]