Azure Stream Analytics is a managed event-processing engine set up real-time analytic computations on streaming data. The data can come from devices, sensors, websites, social media feeds, applications, infrastructure systems, and more.

Use Stream Analytics to examine high volumes of data streaming from devices or processes, extract information from that data stream, identify patterns, trends, and relationships. Use those patterns to trigger other processes or actions, like alerts, automation workflows, feed information to a reporting tool, or store it for later investigation.

Some examples:

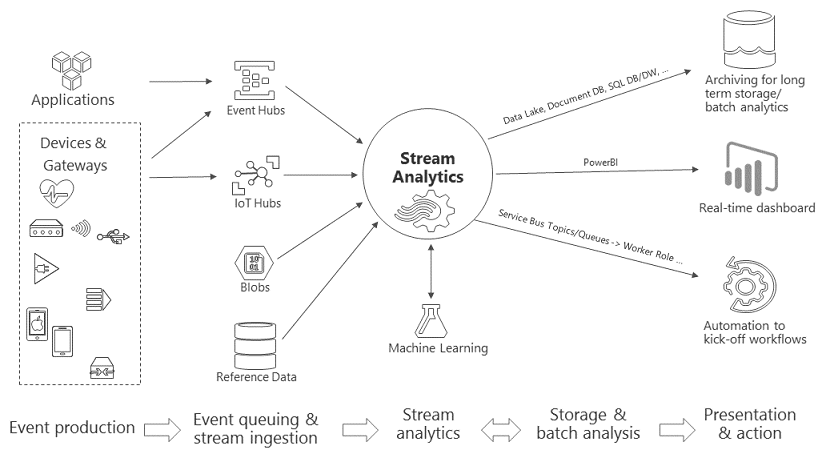

This diagram illustrates the Stream Analytics pipeline, showing how data is ingested, analyzed, and then sent for presentation or action.

Stream Analytics pipeline

Stream Analytics starts with a source of streaming data. The data can be ingested into Azure from a device using an Azure event hub or IoT hub. The data can also be pulled from a data store like Azure Blob Storage.

To examine the stream, you create a Stream Analytics job that specifies from where the data comes. The job also specifies a transformation; how to look for data, patterns, or relationships. For this task, Stream Analytics supports a SQL-like query language to filter, sort, aggregate, and join streaming data over a time period.

Finally, the job specifies an output for that transformed data. You control what to do in response to the information you’ve analyzed. For example, in response to the analysis, you might:

You can adjust the number of events processed per second while the job is running. You can also produce diagnostic logs for troubleshooting.

Stream Analytics is designed to be easy to use, flexible, and scalable to any job size.

Stream Analytics connects directly to Azure Event Hubs and Azure IoT Hub for stream ingestion, and to Azure Blob storage service to ingest historical data. Combine data from event hubs with Stream Analytics with other data sources and processing engines. Job input can also include reference data (static or slow-changing data). You can join streaming data to this reference data to perform lookup operations the same way you would with database queries.1

Route Stream Analytics job output in many directions. Write to storage like Azure Blob, Azure SQL Database, Azure Data Lake Stores, or Azure Cosmos DB. From there, you could run batch analytics with Azure HDInsight. Or send the output to another service for consumption by another process, such as event hubs, Azure Service Bus, queues, or to Power BI for visualization.

To define transformations, you use a simple, declarative Stream Analytics query language that lets you create sophisticated analyses with no programming. The query language takes streaming data as its input. You can then filter and sort the data, aggregate values, perform calculations, join data (within a stream or to reference data), and use geospatial functions. You can edit queries in the portal, using IntelliSense and syntax checking, and you can test queries using sample data that you can extract from the live stream.

You can extend the capabilities of the query language by defining and invoking additional functions. You can define function calls in the Azure Machine Learning service to take advantage of Azure Machine Learning solutions. You can also integrate JavaScript user-defined functions (UDFs) in order to perform complex calculations as part a Stream Analytics query.

Stream Analytics can handle up to 1 GB of incoming data per second. Integration with Azure Event Hubs and Azure IoT Hub allows jobs to ingest millions of events per second coming from connected devices, clickstreams, and log files, to name a few. Using the partition feature of event hubs, you can partition computations into logical steps, each with the ability to be further partitioned to increase scalability.

As a cloud service, Stream Analytics is optimized for cost. Pay based on streaming-unit usage and the amount of data processed. Usage is derived based on the volume of events processed and the amount of computing power provisioned within the job cluster.

As a managed service, Stream Analytics helps prevent data loss and provides business continuity. If failures occur, the service provides built-in recovery capabilities. With the ability to internally maintain state, the service provides repeatable results ensuring it is possible to archive events and reapply processing in the future, always getting the same results. This enables you to go back in time and investigate computations when doing root-cause analysis, what-if analysis, and so on.