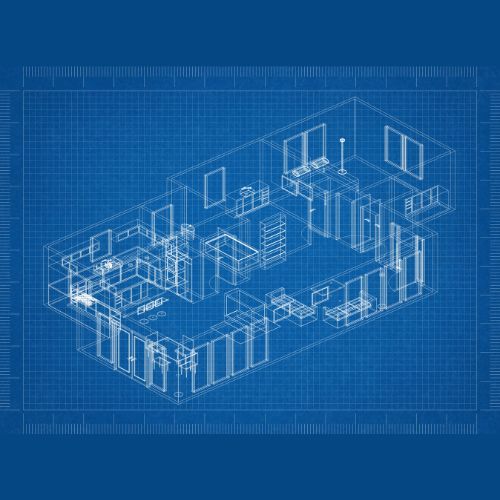

Automated Data Extraction of Set Products from Floor Plans

ThirdEye Data successfully delivered a Proof of Concept (PoC) that used state-of-the-art object detection models to identify and structure predefined architectural objects from image-based floor plans. The MVP phase is currently under discussion following the successful completion of the PoC.

Automating Floor Plan Data Extraction: A ThirdEye Data Case Study

BUSINESS GOALS OR CHALLENGES

Business Goals

- Automate the extraction of predefined construction set products from unstructured floor plan images.

- Convert visual design data into structured digital output compatible with tools like ArchiCAD.

- Reduce reliance on manual annotations and accelerate the drafting process.

- Enable scalable, intelligent design review and object detection workflows.

Understanding the Challenges:

- Floor plans existed only as static images, without vector or layer data.

- Manual detection of embedded objects was labor-intensive and error-prone.

- Wide variation in complexity and object positioning across 4,000+ plans.

- Required high accuracy in detecting multiple object types and aligning with wall layouts.

- Needed integration with downstream tools used by internal design teams.

Prerequisites and Preconditions:

- Access to a large dataset of real-world floor plan images.

- Manual annotations using tools like Roboflow for training data preparation.

- Pre-agreed success benchmarks for object detection across simple to complex layouts.

- Approval of bounding box visualizations and JSON output structure.

- Readiness to iteratively improve detection accuracy based on real-world feedback.

THE SOLUTION

ThirdEye Data delivered a PoC project, focusing on building and optimizing a deep learning pipeline using YOLOv8 for object detection and OpenCV for image post-processing.

Solution Highlights

Developed a deep learning-based multi-object detection system using YOLOv8 to identify and quantify key interior construction products in floor plan images.

Integrated OCR to extract specification codes related to doors, windows, and fixtures.

Applied image preprocessing and post-processing logic using OpenCV and Pillow for enhanced boundary detection and noise reduction.

Generated structured output in JSON format, capturing object class, coordinates, and count for each detected object.

Enabled UI-based image uploads and red-box visual feedback for easy validation by design teams.

Built a modular and scalable backend inference engine using FastAPI, containerized for production deployment.

Prepared datasets using Roboflow for manual annotation, version control, and augmentation to improve model generalization.

Technologies Used

Object Detection & Training

YOLOv8 for real-time multi-object detection

Detectron2 as an alternative benchmarking model

PyTorch for deep learning pipeline and model training

Image Preprocessing & Alignment

OpenCV for edge detection, noise reduction, and alignment

Pillow for image manipulation and post-processing

Text & Code Extraction

EasyOCR and Tesseract for extracting window and door codes

spaCy for parsing text annotations and specification tags

Data Annotation & Management

Roboflow for image annotation, versioning, and augmentation

Label Studio for team-based labeling workflows

Backend & Integration

FastAPI for serving inference results via REST API

Docker for containerized deployment

ezdxf and AutoCAD .NET API for DXF file creation and CAD integration

UI & Visualization

Streamlit and Dash for visualizing floor plan inputs and red-box outputs

Matplotlib for bounding box overlays and object summaries

Data Output Format

JSON for structured results (object class, coordinates, count)

DXF for CAD-compatible drawing exports

VALUE CREATED

- 80–90% detection accuracy achieved for simple and mid-complex floor plans.

- Over 4,000 plans processed, drastically reducing manual annotation workload.

- 65% time savings in identifying and placing set products within floor plans.

- Improved detection consistency across over 5 distinct object categories.

- Delivered structured JSON and DXF outputs, enabling smooth downstream integration with ArchiCAD and AutoCAD tools.

- Enabled real-time validation of detected results using visual red-box overlays, improving design team productivity.

- Reduced average design review cycle from 2–3 days to a few hours for batch uploads.

- Positioned the customer to scale the system across all incoming architectural plans with minimal human intervention.