Customization of LLM ChatBots with Retrieval Augmented Generation

For many domain specific business application the pre trained LLM is not enough. Customization is necessary. Out of the box pre trained black box models, even with prompt tuning may be inadequate. There are two ways to customize LLM with recent or private data. The solution is either Fine Tuning(FT) or Retrieval Augmented Search (RAG). For various reasons Fine Tuning is often not viable. In this post we will review RAG, including the technique, pros, cons and it’s inner workings.

Why Retrieval Augmented Generation

LLM although trained on massive amount of data still has some limitations as follows. Customization, whether based on FT or RAG address these issues.

- LLM training data is never up to date. Most models have some time cutoff, with model trained on data prior to the cutoff date

- LLM training data is based on publicly available data. The training data will never include any private business data.

- When data is not available, LLM will make stuff up. It will happily create plausible and fluent but incorrect content. This phenomenon is known as hallucinations

If you are considering Fine Tuning, there are some serious issues that need to to considered first. LLM fine tuning is different from fine tuning in general. In general fine tuning, some downstream layers in the network is unfrozen and then retrained with updated weights. With most LLM fine tuning, the network is augmented and the augmented parts trained with new data. The original LLM network remains frozen. Here are the drawback of the Fine Tuning approach.

- Preparing training data and retraining is time consuming and expensive

- Deep technical expertise is needed

- The cost is recurring as periodic fine tuning will be required to account for fresher data

- Continuous model monitoring to detect drift will be required

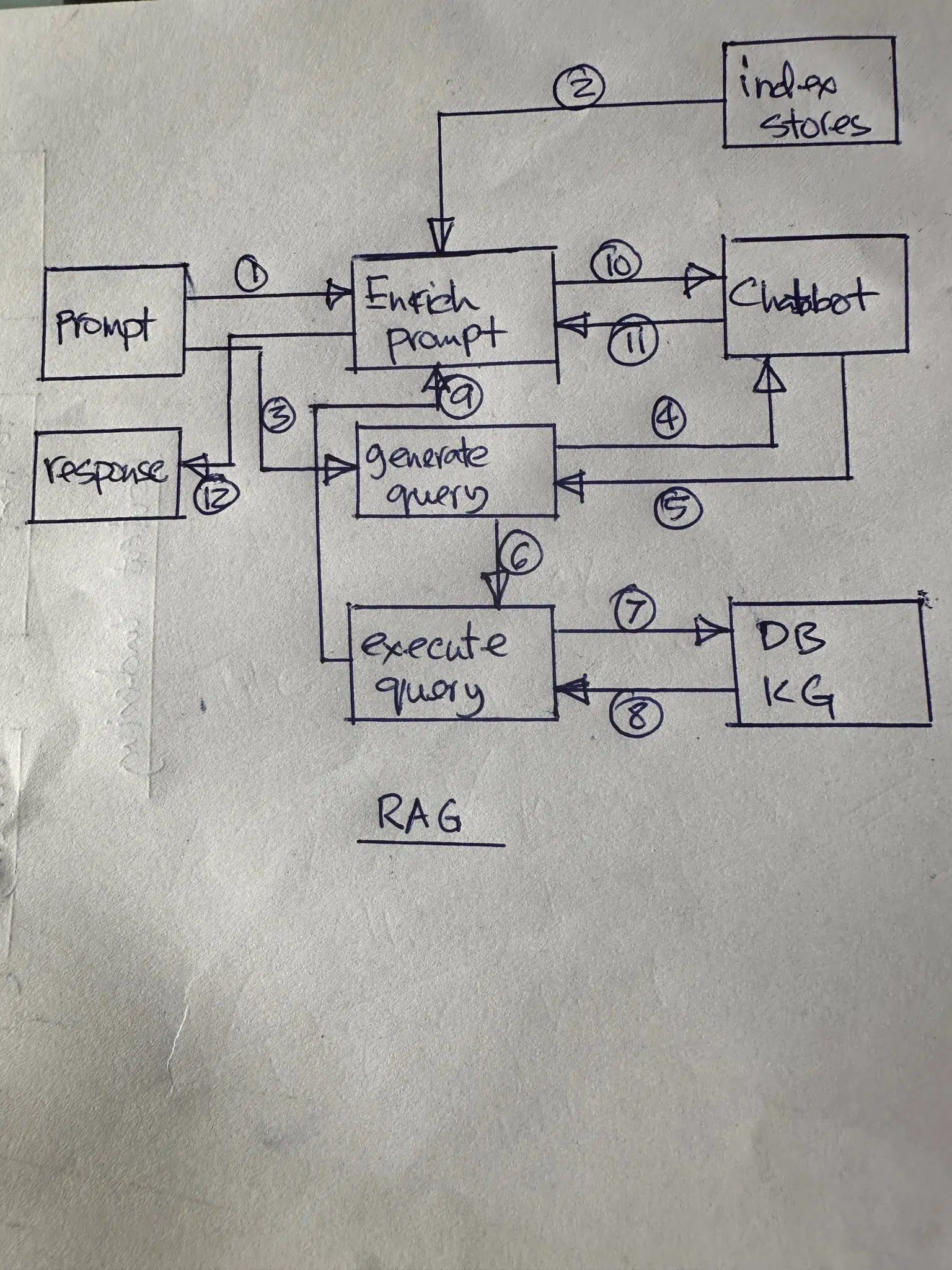

Customization with RAG is dynamic and on demand, with the model remaining static. Prompt processing with RAG constitutes these steps, assuming that all relevant content has already been indexed.

- User generates a prompt expressing the intent.

- A contextual search is performed gathering data related to the prompt from various external indexed data sources. The data could be unstructured text, structured, such as tabular data, knowledge graph data and semi structured such JSON and HTML

- The collected contextual data chunks are ranked. All of it or parts of it are used to prepended the raw user prompt to enrich the prompt, subject to the context length limit. Answer to the prompt lies in the contextual data.

- The enriched prompt is sent to the LLM.

- The LLM response is expected to contain satisfactory response to the prompt.

With RAG, periodic re indexing of all new and updated relevant content is necessary. This process is not as expensive compared periodic fine tuning of LLM.

With RAG, the user provides all the relevant data by performing search on indexed data. The LLM has no role in the search. LLM simply acts as an NLP engine that gleans the answer from the provided context and generates fluent and coherent text, hopefully contains the answer. The performance of RAG based LLM will depend upon

- How complete the relevant data is

- How well the relevant contextual data is indexed

- How well the retrieved data is prioritized and ranked

Content indexing and search

There are two kinds of indexing; vector indexing and plain text indexing. Vector indexing based on LLM embeddings is semantic in nature. Text indexing is more exact, based on literal match. With RAG based LLM you might need both. If a customer is querying about a specific order, text indexing is more appropriate. For product search query through chatbot, vector indexing and semantic search may be more appropriate.

For a given prompt, how do you decide what kind of search to perform with the following options available. It’s not easy to decide.

- Full text search with text indexing

- Semantic search with embedding vector indexing

- Both full text and semantic search, called hybrid search

Assuming the worst case scenario where exactly matched content and semantically matched content is needed, often hybrid search is performed. For hybrid search, all content need to have vector indexing and and full text indexing. For hybrid search these are the steps.

- Get query embedding vector and find matching content chunk based on chunk embedding vector similarity with the query vector. The indexed data is typically stored in a vector database. The embedding vector similarity score could be used to rank the search results

- Perform full text search based full text indexing of text chunks. The indexed data is typically stored in a document database. The documents are generally ranked with BM25 scores.

- Since semantic search and full text search ranking use different techniques, they need to be reconciled for final ranks of all returned content. The 2 ranked lists can be reconciled algorithmically or vector similarity could be used for all retrieved content to create one ordered list of content chunks.

- The top k ranked content that fits within the context length limit are used to enrich the user prompt to create the final prompt.

Content chunk re ranking

Since ranking algorithm is different for vector indexing and full text indexing, we need to reconcile them for a final list of content chunks. Here are the different approaches

- Algorithmically reconcile two ordered lists of content chunks

- Use embedding vector similarity with the prompt for re ranking all retrieved content

- Use Cohere re ranking

Cohere provides a reranking technique through an API service. Details of how the re ranking algorithm works is not known.

Content chunking for space efficiency

Since LLM context length is limited, large documents need to to be handled carefully. A large document may represent multiple concepts. It’s more efficient to partition large documents, so that each partition or chunk is more semantically cohesive. Once partitioned, each chunk should be indexed separately. The partitioning could be achieved in several ways

- Fixed size

- Paragraph based

- Based on document structure, such as sections, sub sections

- By text segmentation

Selection of relevant content has 2 criteria, high relevancy and low content size. Partitioning helps to reduce content size, increasing space efficiency. The retrieval process will retrieve content chunk.