Navigating the Labyrinth of Deepfakes and Clearfakes: Risks, Challenges, and Solutions

The rapid advancement of artificial intelligence (AI) has brought about a plethora of transformative technologies, revolutionizing industries and reshaping our daily lives. However, amidst the undeniable benefits lies a lurking threat: the rise of deepfakes and clearfakes. These sophisticated techniques have the potential to manipulate media content, posing a significant threat not only to society but also to individual and organizational financial security.

Deepfakes vs. Clearfakes: Understanding the Nuances

Deepfakes and clearfakes, often used interchangeably, are distinct technologies with different modus operandi.

- Deepfakes: Deepfakes leverage deep learning algorithms to seamlessly superimpose one person’s face or voice onto another, creating hyper-realistic videos or audio recordings. These fabricated media can be used to spread misinformation, damage reputations, or even incite violence, while also posing a significant threat to financial security.

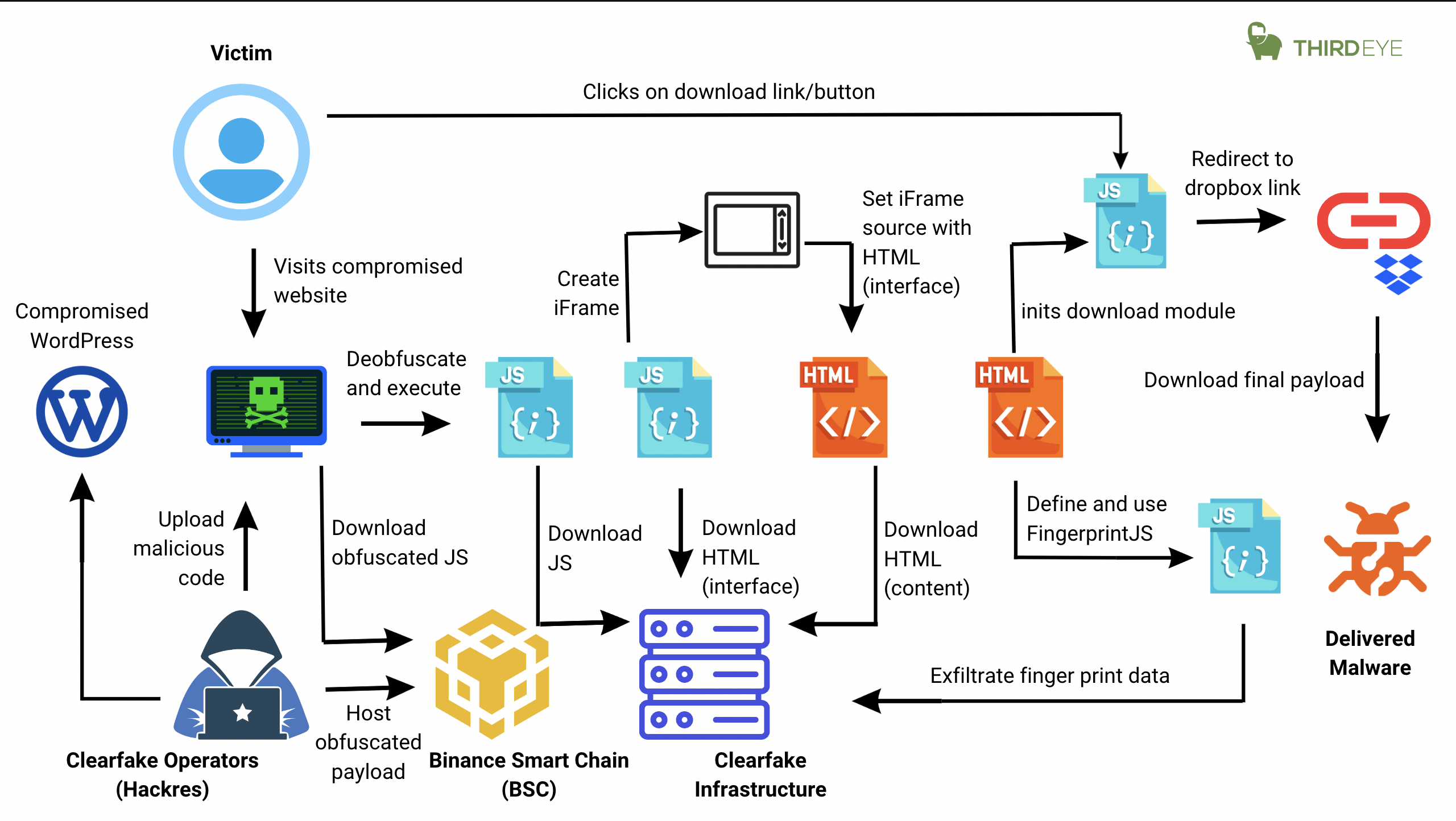

- Clearfakes: Clearfakes, on the other hand, utilize AI-powered image editing tools to subtly alter existing images or videos, making them appear more authentic. These manipulated media can be used to distort reality, spread false narratives, or undermine trust in institutions, while also enabling fraudsters to manipulate financial documents and perpetrate scams.

ClearFake Technology Workflow (As Per September’23)

Risks and Challenges: A Looming Threat

The pervasiveness of deepfakes and clearfakes poses a multitude of risks and challenges, including financial perils:

- Misinformation and Propaganda: The ability to fabricate realistic media content can be weaponized to spread misinformation, manipulate public opinion, and sow discord. This can have far-reaching consequences, influencing elections, disrupting economies, and even inciting violence, while also creating opportunities for financial fraud.

- Reputational Damage: Deepfakes and clearfakes can be used to tarnish reputations, spread damaging rumors, and destroy careers. This can have a devastating impact on individuals and organizations alike, potentially leading to financial losses and reputational damage that could impact business operations and investor confidence.

- Erosion of Trust: The proliferation of manipulated media can erode trust in institutions, media outlets, and even individuals. This can lead to social fragmentation, hinder effective communication, and undermine societal cohesion, while also eroding trust in financial institutions and markets.

- Financial Losses: Deepfakes and clearfakes can be used to perpetrate a variety of financial scams, including Business Email Compromise (BEC), investment scams, and cryptocurrency scams. These scams can lead to substantial monetary losses for individuals and organizations alike, jeopardizing financial security and stability.

Combating the Threat: Towards a Solution

Addressing the deepfake and clearfake menace requires a multifaceted approach involving technology, policy, and societal awareness, along with measures to safeguard financial security:

- AI-Powered Detection Tools: Developing AI-powered detection tools that can accurately identify deepfakes and clearfakes is crucial. These tools can be used to flag suspicious content for further scrutiny and prevent its widespread dissemination, while also helping to identify and prevent financial scams.

- Transparency and Traceability: Implementing mechanisms to ensure transparency and traceability of media content is essential. This could involve embedding digital watermarks or utilizing blockchain technology to track the origin and manipulation history of media files, while also enhancing transparency and traceability in financial transactions and communications.

- Media Literacy and Public Awareness: Educating the public about deepfakes and clearfakes is paramount. This includes teaching individuals how to identify manipulated media, understanding the limitations of AI, and critically evaluating the information they consume, while also promoting financial literacy and awareness of common financial scams.

- Regulatory Framework: Establishing a robust regulatory framework to address deepfakes and clearfakes is necessary. This could involve defining legal boundaries, establishing ethical guidelines, and imposing penalties for the malicious use of these technologies, while also implementing stricter regulations and oversight to prevent financial fraud.

AI Solution Providers: A Critical Role

AI solution providers play a critical role in combating deepfakes and clearfakes. They can contribute to the development of detection tools, enhance transparency and traceability, and promote media literacy initiatives. Additionally, AI can be used to create tools that help individuals create their own deepfakes for legitimate purposes, such as satire or education, while also providing mechanisms to label and identify them as such. Here are some specific ways AI can be utilized to address this challenge with the help of experts:

1. Deepfake and Clearfake Detection: AI-powered tools can be developed to analyze audio and video content for anomalies and patterns that indicate manipulation. These tools can be deployed in real-time or in a post-production setting to flag suspicious content for further scrutiny.

2. Media Authentication and Verification: AI algorithms can be used to verify the authenticity of media content by analyzing its metadata, such as timestamps, location data, and device information. This can help to identify manipulated content that has been forged or edited to deceive viewers.

3. Content Traceability and Provenance: AI-powered systems can be implemented to track the origin and manipulation history of media content, providing a transparent and verifiable record of its journey from creation to dissemination. This can help to identify the source of manipulated content and hold those responsible accountable.

4. User Awareness and Education: AI-driven tools can be used to create interactive training modules and educational resources that teach individuals how to identify and critically evaluate deepfakes and clearfakes. This can help to raise awareness of the issue and empower individuals to make informed decisions about the information they consume.

5. Counterfeit Content Detection and Removal: AI can be used to detect and remove counterfeit content from online platforms, such as social media, e-commerce websites, and search engines. This can help to prevent the spread of harmful and misleading content and protect consumers from fraud.

6. Multimodal Analysis and Fusion: AI can be used to combine insights from different modalities, such as audio, video, and text, to provide a more comprehensive and accurate assessment of media content. This can help to overcome the limitations of individual modalities and improve the detection of sophisticated deepfakes and clearfakes.

7. Continual Learning and Adaptation: AI systems can be designed to continuously learn and adapt to the evolving nature of deepfake and clearfake technology. This can help to ensure that AI-powered detection and prevention methods remain effective in the face of new threats and techniques.

By leveraging AI’s capabilities in these areas, enterprises can effectively defend against the threat of deepfakes and clearfakes, safeguarding their reputations, protecting their customers, and maintaining trust in the digital world.

Notable Incidents: A Wake-Up Call

Several incidents have highlighted the potential dangers of deepfakes and clearfakes:

- Barack Obama Deepfake: In 2018, a deepfake video of former US President Barack Obama delivering a fabricated speech went viral. The video showcased the technology’s ability to manipulate public figures and spread misinformation.

- Mark Zuckerberg Deepfake: In 2019, a deepfake video of Facebook CEO Mark Zuckerberg making controversial statements surfaced. The video demonstrated the potential impact of deepfakes on corporate reputations and market stability.

- Cybercriminal Scams: Deepfakes have been used in cybercriminal scams, impersonating company executives to trick employees into transferring funds or revealing sensitive information.

- Fake Endorsement: A deepfake video of a prominent politician was used to create a fake endorsement of a political candidate. The video, which was created using AI-powered tools, featured the politician endorsing a candidate in an upcoming election. The video was quickly identified as a deepfake, and the politician issued a statement disavowing the endorsement.

- Spread Misinformation: The deepfake incident involving Rashmika Mandanna occurred in October 2023, when a fabricated video of the popular Indian actress went viral on social media. The video, which was created without Mandanna’s consent, depicted her in a compromising position. The incident caused widespread distress to the actress and sparked a debate about the ethical implications of deepfake technology.

Conclusion: The Road Ahead

Deepfakes and clearfakes pose a significant challenge to our increasingly interconnected world. Addressing this threat requires a collective effort from individuals, organizations, governments, and AI solution providers. By fostering transparency, promoting media literacy, and developing robust detection tools, we can mitigate the risks and harness the potential of AI responsibly.