What is Model Context Protocol or MCP?

The Model Context Protocol or MCP is a frameworkfor maintaining, updating, and sharing contextual information across multiple AI agents, LLMs, tools, environments, and user sessions in a dynamic multi-agent system.

MCP emerged as a design pattern and architectural necessity to govern how “context” is defined, stored, retrieved, updated, and transmitted across tasks, tools, and models.

Model Context Protocol has been adopted informally across AI architecture circles, particularly by open-source multi-agent system developers, LLM orchestration tool creators such as LangChain, AutoGen, CrewAI, and enterprise AI solution architects.

It began to crystallize as a standardized mental model and framework in late 2023 and early 2024, as enterprise use cases pushed for persistent memory in AI agents, cross-agent context sharing, dynamic tool invocation with grounded context, stateful, and reusable LLM workflows.

So, we must understand that MCP is not a product or formal specification. Rather, it is a protocol design pattern, which is now becoming widely adopted and named to represent how LLMs and agents manage and exchange context.

In this blog, we will try to get deep-dived insights on Model Context Protocol for both AI developers and enterprises planning to build advanced AI solutions.

Conceptual Roots and Influences Which Backed MCP

As mentioned above, MCP was started getting adopted around 2023–2024, as a design response to LLM-based workflow limitations. No single entity invented MCP, it was evolved across open-source and enterprise projects as a protocol design pattern. Though, the concept of Model Context Protocol draws from several existing ideas and technologies.

Here are some examples:

Reason+Act or as We Know It – ReAct:They introduced reasoning with intermediate ScratchPads as context for LLMs.

LangChain:As they emerged as a popular open-source framework for building LLM-based applications, LangChain formalized memory, context injection, tool use, and prompt templating.

AutoGPT or BabyAGI:They created the concept of persistent “workspace” and task memory, which was needed for multistep agents.

Microsoft’s Semantic Kernel or Copilot Studio:Microsoft introduced the need for skills, planners, memory stores, and context-aware function execution in enterprise agents.

Anthropic’s Claude or OpenAI’s GPT Threads:They started supporting long-context memory and embedding-based context recall.

Multi-agent Systems or MAS in AI Research:From distributed AI in early 2000s, LLM engineers laid groundwork for context-sharing blackboards, message buses, etc.

Understanding the Core Components of MCP

Now that, we got a fair bit of idea on MCP, let’s break it down into its foundational building blocks to understand the core components.

Context Store

Context store is the central memory layer where all context objects are saved. The COs are structured as key-value pairs or object graphs and can be local (RAM, vector DB) or distributed (cloud, shared API).

Context Object

Context object is a unit of context, such as user history, system settings, task state, tool metadata, environment variables, long-term memory (knowledge graph, embeddings, RAG store).

Context Manager

We can take Context Manager as an orchestration layer that reads & writes context, merges conflicting contexts and resolves context scope (local vs. global).

Context Schema

Context Schema is kind of a specification of how context is structured, typed, and validated. It is often based on JSON Schema, Protocol Buffers, or OpenAPI specs. Context Schema supports nesting and modular types for scalability.

Context Binding

Context Binding takes care of how a model or agent attaches to a context, such as Temporarily (per task/session), Persistently (long-running agents) and Dynamically (based on triggers or rules).

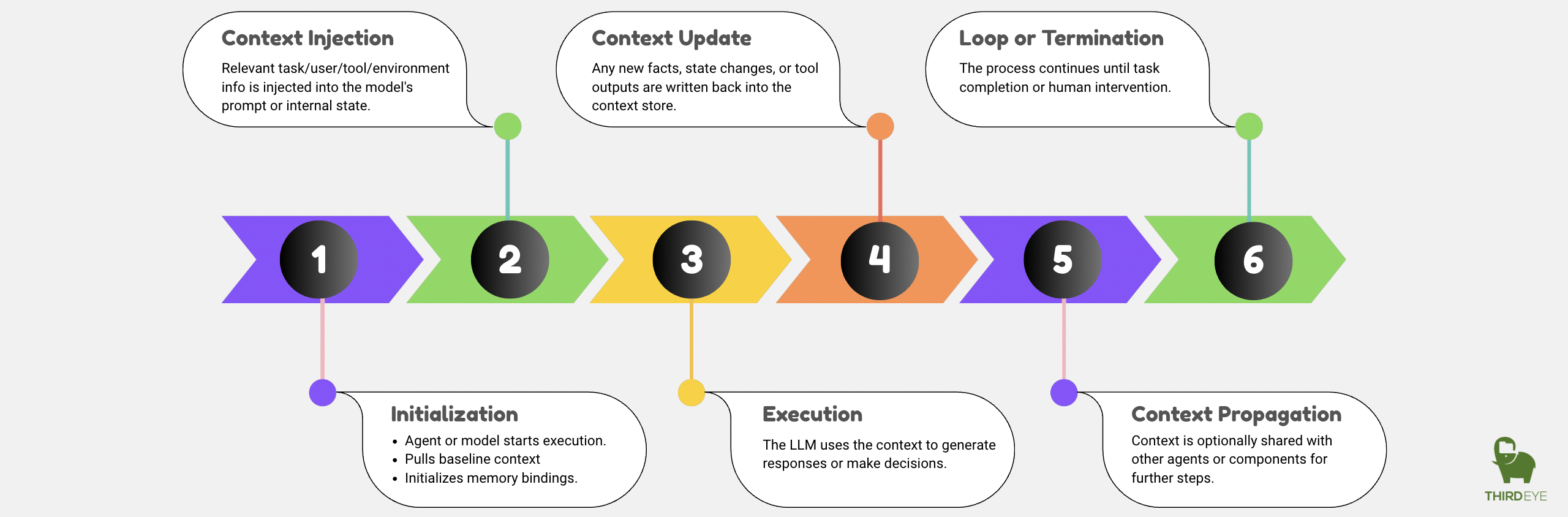

MCP Lifecycle – How MCP Works in a Real System

Insights on Model Context Protocol for AI Developers

Which are the Communication Protocols Under MCP?

Please know that MCP is not tied to one transport layer. Context exchange can happen over HTTP REST, gRPCAPIs, WebSockets, PubSubchannels, Message queues (Kafka, RabbitMQ), and Shared memory (Redis, SQLite, LangChainMemory).

Memory Architectures Supporting MCP

| Type | Description | Tools or Tech Examples |

|---|---|---|

| Short-term memory | Session context, prompt window | LangChain memory, OpenAI tool use |

| Long-term memory | Persistent storage, searchable | Pinecone, Weaviate, Chroma |

| Knowledge graphs | Semantic relationships, entity memory | Neo4j, RDF/SPARQL |

| Episodic memory | Timeline of events | Agentic memory stores, Milvus |

| Vector memory | Embeddings for similarity-based context | FAISS, Qdrant |

Primary Frameworks and Libraries Using MCP Concepts

| Framework | MCP Implementation Areas | |

|---|---|---|

| LangChain | Memory, context chains, agent state | |

| AutoGPT / BabyAGI | Workspace memory, task loops | |

| Microsofts Copilot Studio | Orchestrated context via Graph API | |

| Metas LLaMA Agents | Modular memory + tool routing | |

| CrewAI / OpenDevin | Multi-agent memory sharing | |

| OpenAIs Function Calling + Tools | Implicit context via tool output memory |

Does MCP Connected with Prompt Engineering?

Yes, MCP is directly connected with prompt engineering, especially for dynamic multi-agent systems.Prompts are part of contextand Model Context Protocol defines how promptsare dynamicallyconstructed from memory, can include scratchpads, tool instructions, past actions, and are validatedand grounded via schemas.

How MCP DesignHelps in Context Isolation and Privacy Settings?

See, in a production MCP design, context isolation and privacy are addressesby scoped access, redaction & sanitization, and audit logs.

Scoped access means only authorized agents can read/write certain context. Wherein, redaction & sanitization ensure sensitive info removed from prompts. And changes to context are tracked and timestamped through audit logs.

How Does MCP Help in Multi Agent Systems?

In a team of AI agents, each agent maintainslocalcontextand a shared blackboardor memory bus storesglobal state.Primarily, agents use MCP tosubscribe to context changes, push updatesand resolve conflicts (e.g., “last write wins”, versioning).

Advanced MCP Techniques You Need to Know

We have gathered enough information on MCP concepts, the foundational blocks, frameworks and memory architectures. Let us know the advanced MCP techniques which you need to know as an AI developer.

Temporal Context Decay: By the name, we can understand the goal of this technique. With temporal context decay, we ensure older memory is deprioritized unless reinforced.

Context Pruning: Context pruning is a popular technique for trimming irrelevant or low-priority items to fit token limits. This technique is very much in use for projects with tight budget or in PoC phase.

Context Summarization: This technique is used for leveraging LLMs to auto-summarize long memory chunks. Usually, AI developers use this technique for building AI agents or LLM-based solutions for research works.

Dynamic Context Graphs: Dynamic context graphs are used for storing context as connected nodes for reasoning. This technique is heavily used in systems where multiple AI agents, tools, users, or processes need to coordinate contextually in real time, when the context is not static, but evolves across the workflow.

Tool-context Embedding: In a simplified way, we can say Tool-Context Embedding is the process of encoding structured metadata about tools (their capabilities, parameters, usage history, success rate, etc.) into vector representations or prompt-friendly formats for matching tasks to tools via embedding similarity.

Example of an MCP Design with JSON Schema Snippet

{

“user_profile”: {

“name”: “John Doe”,

“preferences”: {

“language”: “en”,

“timezone”: “UTC+5:30”

}

},

“task_state”: {

“task_id”: “T-3921”,

“status”: “in_progress”,

“history”: [“Started”, “ToolA invoked”, “ToolB failed”]

},

“memory_snapshot”: {

“last_utterance”: “Show me the latest sales report”,

“relevant_documents”: [“doc123”, “doc456”]

}

}

Getting Insights on Model Context Protocol as an Enterprise

If I explore MCP as an enterprise, the first question to come into my mind will be Why MCP was Born, and What is the Problem it Addresses?

So, let us start from here only. Before MCP-style architectures emerged, AI systems like LLMs and multi-agent setups weresuffered from serious operational challenges. Here are some primary issues noted:

Stateless Interactions: Every interaction with an LLM was like talking to it for the first time. No memory, no understanding of prior steps.

Redundant Computation: Tasks had to be re-instructed repeatedly, wasting tokens and compute. It was making LLM-based solutions expensive for enterprises.

Fragmented Context: In multi-agent or tool-augmented systems, there was no central way to store or share relevant information. It often leaded to cross-purpose execution and interference in the system.

Scaling Failures: As more models/tools/agents entered the picture, managing “who knows what” became nearly impossible.

Lack of Accountability: There was no trackable flow of context across agents, tools, and users. Debugging became guesswork for the developers, lead to error-prone updates.

These gaps were acceptable for simple chatbots, but insufficient for production-grade, enterprise AI systems that spanned in long-running workflows, regulated environments (finance, healthcare), multi-modal interfaces, teams of collaborating AI agents, and dynamic task routing and orchestration.

MCP emerged as the architectural response to address these gaps by enabling structured, persistent, shareable, and traceable context. This is a “protocol” whichimplies standardization.

MCP was not just a coding pattern, it became a contract between components that must agree on context formats (schemas), define context lifecycles (when to fetch/update/forget), govern access (security, roles), enable traceability (logging, audits), and support flexibility (plug into various AI/ML models and tools).

To make simple, think of it like HTTP for AI agents. Just like HTTP standardized communication across the web, MCP standardizes context-sharing across intelligent components.

Capabilities of MCP You Need to Know as an Enterprise

It Enables Coherence at Scale

When deploying multiple AI tools, copilots, or agents, MCP ensures they share memory and don’t work at cross-purposes. This enables cross-department copilots (HR + IT + Finance), consistent behavior across channels (email + chat + dashboard), and seamless handoff of tasks between agents.

It Allows Modular, Composable Systems

By decoupling “what a model knows” from “what it does,” MCP supports plug-and-play components that pull memory as needed, respond to changing user needs, and integrate third-party tools, databases, and APIs.

It Is Essential for Privacy and Governance

With data privacy, audit trails, and access controls becoming critical, MCP enables scoped context (e.g., user-specific memory), context redaction/sanitization (e.g., for prompts), and logging of context usage for compliance.

This is vital for regulated industries like finance, healthcare, and defense.

It Enhances LLM Cost-Efficiency

Context injection and memory optimization prevent unnecessary prompt length growth. MCP supports pruning, summarization, and embedding-based recall. This reduces token usage, improving LLM cost-performance.

It Powers Agentic Workflows

For enterprises building advanced LLM-based solutions such as customer service AI agents, internal automation copilots, or autonomous research tools, MCP is a must-to-have framework. MCP allows agents to remember prior steps, track tools invoked, share intermediate results, and align toward a shared business goal.

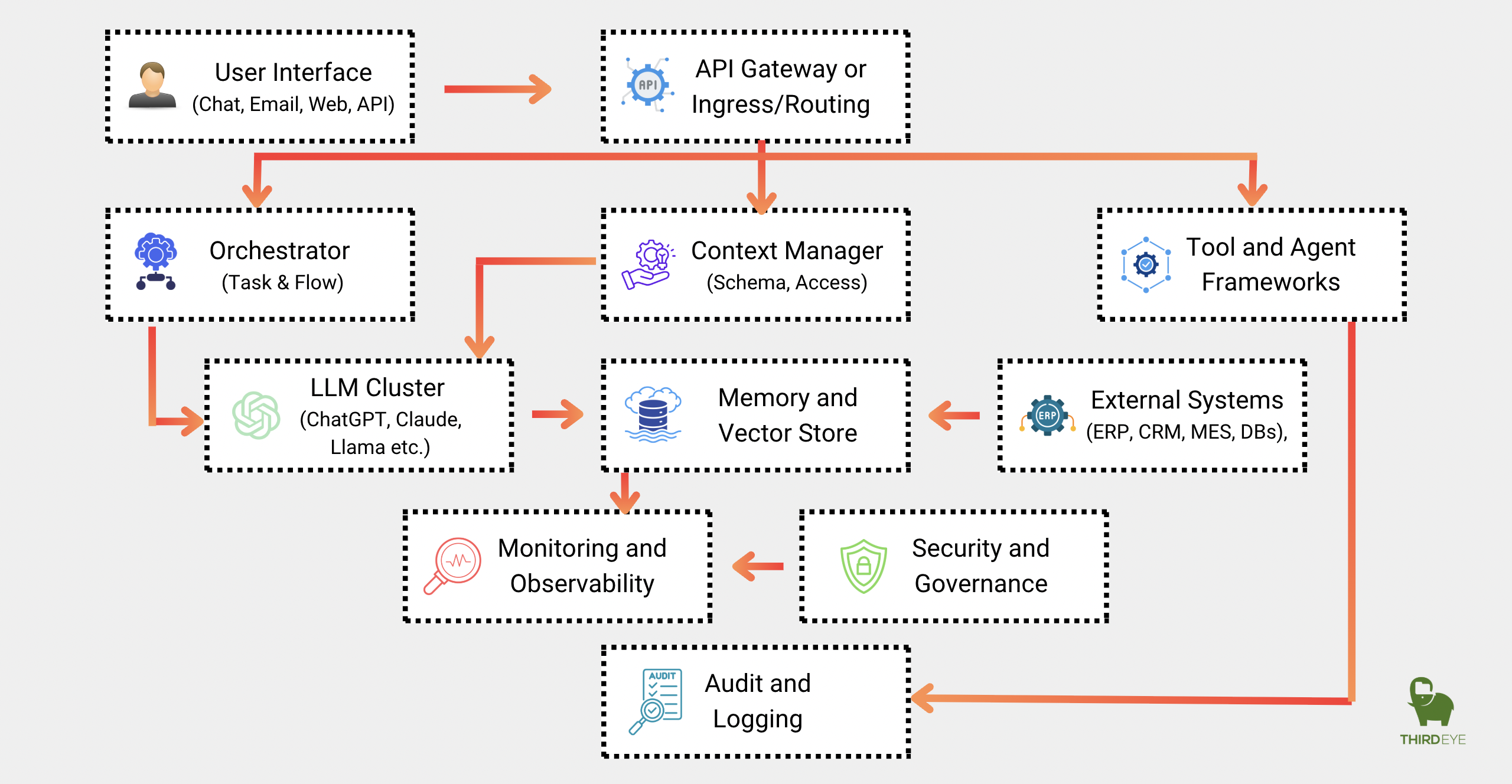

Enterprise Architecture Diagram for MCP

Key Components:

- API Gateway: Central ingress, authentication, rate‑limiting.

- Orchestrator: Coordinates multi‑step workflows and agent hand‑offs.

- Context Manager: Loads/saves context objects (schemas, bindings, access control).

- LLM Cluster: One or more large language models for reasoning and generation.

- Memory & Vector Store: Embeddings, long/short‑term memory, retrieval.

- Tool & Agent Framework: Code modules or agents that perform actions (DB queries, business‑logic).

- External Systems: ERP/MES/CRM, internal databases, third‑party APIs.

- Security & Governance: Encryption, RBAC, data redaction, compliance.

- Monitoring & Observability: Metrics, tracing, context‑change logs.

- Audit & Logging: Immutable record of context updates and agent actions.

Do All Enterprises Need to Implement MCP?

To be very honest with you, enterprises do not necessarily implement MCP from scratch. MCP-like functionality is already embedded into the popular frameworks and tools such as LangChain, Microsoft’s Copilot Studio, OpenAI’s Assistants API, Anthropic’s Claude API, AutoGen, CrewAI, OpenDevin.

But if you are building custom agentic architectures, you should define and enforce MCP as a design pattern to ensure long-term reliability and system growth.

An Ideal MCP Integration Strategy Template

We recommend using the following 4‑phase roadmap for your use case.

| Phase | Activities | |

|---|---|---|

| 1. Discovery | Identify business goals, use cases & KPIs Map existing data sources & systems Define user roles | |

| 2. Design | Define Context Schema (JSON/Proto) Select Context Store (vector DB, KV store, graph) Design Prompt Templates & Memory Policies | |

| 3. Build & Test | Implement Context Manager (CRUD API, bindings) Wire up Orchestrator ? LLMs ? Agents Develop access controls & redaction Simulate workflows end?to?end & refine prompts | |

| 4. Deploy & Operate | Deploy services (K8s / serverless) Enable monitoring & audit logging Conduct security/compliance review Train users & continuously optimize memory summaries | |

Use Cases Where MCP Adds Value for Enterprises

As mentioned in MCP integration strategy, identifying a use case is the first step enterprises need to do for leveraging MCP. Let us get data on some of the primary use cases where MCP is adding value for the enterprises in real-world.

These insights will definitely help enterprises evaluate the operational and financial advantages of adopting Model Context Protocol or MCP within their workflows.

Customer Support Automation

Enterprises are transforming customer experience through AI-powered support systems, but many struggle with chatbots that cannot retain or leverage historical interactions. With MCP, customer service agents gain the ability to remember multi-turn dialogues, refer to past tickets, and dynamically escalate issues based on sentiment or priority.

For example, a telecom company implementing MCP-enabled agents reduced average handle time (AHT) by 35% and increased first contact resolution (FCR) by 28%. Salesforce reportsthat 74% of customers expect agents to know their contact, product, and service history. This goal is made achievable via persistent memory and shared context within MCP.

Predictive Maintenance in Manufacturing

In industries reliant on high-value machinery, unplanned equipment failures can result in significant financial losses. Traditional ML models only provide isolated predictions, without coordination across systems. MCP enables manufacturing agents to access equipment history, sensor anomalies, service logs, and maintenance forecasts in context.

This connected intelligence drives timely interventions and minimizes false alarms. Enterprises like Siemens and GE reportthat predictive maintenance reduces maintenance costs by 25–30% and downtime by up to 40%. MCP’s persistent context enables these outcomes through continuous learning and context propagation between tools and agents.

AI-Powered HR Automation

HR teams often operate in silos across job postings, candidate tracking systems, and assessments. MCP adds a unified context layer that connects candidate data, recruiter preferences, JD templates, and historical decisions. This enables AI copilots to auto-generate job descriptions, match profiles based on learned hiring patterns, and surface high-quality applicants faster.

According to SHRM,the average time-to-fill a role is 36 days. With MCP, some enterprises report reducing this to under 20 days while improving candidate relevance and recruiter satisfaction. Real-world implementations show up to 50% increase in screening throughput using context-retaining agents.

Supply Chain Optimization

Volatile demand patterns, delayed shipments, and disconnected data systems pose constant threats to supply chain efficiency. MCP integrates context from demand signals, weather forecasts, supplier performance, and inventory levels, allowing AI agents to make adaptive, forward-looking decisions.

Enterprises like Unilever and P&G leverage similar architectures to improve forecast accuracy and reduce working capital lock-in. Gartner indicatesthat context-aware supply chains can reduce inventory costs by up to 20% and stockouts by 30%. MCP empowers supply chain bots to dynamically re-plan and collaborate across functions with full contextual awareness.

Employee Copilots for Internal Operations

Large enterprises face a growing burden in managing employee queries across IT, HR, finance, compliance, and onboarding workflows. Without shared memory, AI systems fall short of resolving complex, multi-departmental tasks. MCP enables employee copilots to retain structured memory across systems and sessions, creating a seamless query resolution experience.

For instance, an MCP-based IT helpdesk agent can remember past device requests and link them to asset management tools, resolving 60% of queries autonomously. Research from Deloitteshows that AI copilots with memory reduce ticket backlogs by 40–60% and boost employee satisfaction.

Legal Research Assistants

Legal workflows involve intensive reading, summarization, and reasoning across complex relationships among statutes, cases, and arguments. MCP empowers AI legal assistants to construct evolving context graphs from legal documents, track precedents, and cite sources accurately over time.

Law firms using MCP have reported a 60% reduction in research time, and tools like Casetext and Harvey AI are already applying similar models. With long-term context and traceable legal references, these agents ensure compliance, reduce human oversight, and improve confidence in AI-generated briefs and summaries.

Healthcare Diagnosis Assistants

AI in healthcare demands continuity of care and interpretability, both of which require strong contextual memory. MCP supports this by integrating electronic health records (EHR), lab results, medication history, and clinical notes into a unified patient memory graph. This allows AI assistants to provide contextually rich suggestions during diagnosis or treatment planning.

McKinsey estimatesthat AI-driven care delivery could save $300B annually in the US alone. MCP plays a foundational role by ensuring that suggestions made by LLMs are grounded in up-to-date, patient-specific information, leading to safer outcomes and better decision support.

Intelligent Document Processing or IDP

Enterprises process vast volumes of heterogeneous documents, from invoices and contracts to handwritten notes and scanned forms. MCP-based document agents learn document types, user-specific queries, and layout patterns over time. Unlike OCR-only systems, these agents retain context across documents and interactions.

For example, in Banking & Finance Industry, AI IDP systemsenhanced with MCP have demonstrated over 90% data extraction accuracy across multi-form submissions and reduced document processing time by 60–70%. This translates directly into cost savings, regulatory compliance, and operational scalability.

R&D and Strategy Agents

In dynamic R&D environments, continuity of ideas and institutional knowledge is crucial. MCP facilitates dynamic memory storage of agent hypotheses, literature reviews, experimental results, and strategic insights. AI agents working in collaborative research or consulting environments can build upon each other’s findings while maintaining traceability.

For instance, pharma companies applying MCP in molecule discovery pipelines have accelerated lead identification by 40%. In management consulting, agents equipped with context graphs of past projects reduce time-to-insight by over 50%, enabling rapid scenario planning and hypothesis validation.

Conclusion

If you’re designing LLM-powered systems, AI agents, or digital workers, MCP should be one of your foundational design layers. Without MCP, your copilots and AI agents won’t be stateful, cooperative, or context-aware.

To leverage model context protocol, you can hire an in-house team who has deep knowledge in using the features of the frameworks and libraries that supports MCP concepts. On the other hand, you can also rely on established AI-first consultancies like ThirdEye Data, Scale AI, and Bain & Companywho have begun incorporating MCP-style designs into their AI agent deployments for enterprises.