The State of Conversational AI and Consumer Trust

Conversational AI voice technology offers new opportunities to automate everyday responsibilities. Yet, people indicate a lack of trust toward these tools’ abilities to successfully complete tasks. Nearly three-quarters of people are unlikely to trust conversational AI to answer simple calls or emails, but experts say this hesitancy will shift as the technologies become more mainstream, despite security risks.

In June 2018, Google unveiled Duplex, a feature of its voice assistant that could call businesses and book reservations. Duplex’s lifelike voice and ability to adapt to human conversation stirred both amazement and fear among consumers.

Artificial intelligence (AI) voice technology offers new capabilities not only to respond to our voice commands but also to hold conversations on its own. Yet, how much will people trust AI to accomplish phone-based tasks, and how do they feel about speaking to increasingly more complex AI systems?

Clutch and Ciklum, a global digital solutions company, surveyed 501 people to understand their comfort level with tools such as Google Duplex and AI voice technology overall.

According to experts, consumers distrust AI for complex tasks that offer little monitoring ability, but they will increasingly embrace AI voice technology as it becomes more commonplace. AI-powered voice technology opens new security risks, however.

Businesses can use this report to understand how to maximize consumer comfort with conversational AI technology.

Our Findings

- About three-quarters of people (73%) say they are unlikely to trust an AI-powered voice assistant to make simple calls for them correctly. This may be due to the lack of control consumers feel they have over an AI phone call.

- Most people (81%) believe that AI-powered voice assistants need to declare they are robots before proceeding with a call, though this hesitancy will likely shift as AI voice technology becomes more integrated into everyday life.

- Nearly two-thirds of people (61%) would feel uncomfortable if they believed they spoke to a human and later learned they had spoken to AI. This discomfort may speak to people’s concern for potential security risks.

- The majority of people (70%) say they are unlikely to trust AI to correctly answer simple emails for them, such as scheduling meetings.

Most People Hesitant to Trust AI Voice Technology for Phone Calls

AI-powered voice technology presents new capabilities that consumers may be slow to adopt due to a lack of knowledge and control.

For example, nearly three-quarters of people (73%) say they are unlikely to trust an AI-powered voice assistant to make simple calls for them correctly, including 39% who are very unlikely.

Just 4% of people say they are very likely to trust AI voice assistants to make calls for them.

Google’s Duplex feature exclusively books restaurant reservations, but this limited capability can still be more responsibility than some consumers are willing to hand over to AI. This may change in the future, though.

Rajat Mukherjee, co-founder and chief technology officer of Aiqudo, a voice platform company, explained that people initially trust AI voice technology more for low-stakes situations than high-stakes situations.

“The higher the stakes, and the more significant [the consequences], the more trust you need before you can get comfortable with it,” Mukherjee said.

Asking Google Duplex to book a dinner reservation can lead to more uncontrolled errors than, say, asking your phone to set a reminder for you to make the reservation yourself.

For example, after asking your phone to set a reminder for you, you can immediately check and see if the reminder is correct with little consequence. The same is not true of phone calls; with a call, the user has fewer opportunities to monitor the situation until the call is complete.

“On the computer, you have a user interface,” said Daniel Shapiro, chief technology officer and co-founder of Lemay.ai, an enterprise AI consulting firm. “You can see what it’s doing. Over the phone, you really have no idea what it’s capable of doing. You have to just believe.”

With Google Duplex, the user must trust that the AI not only understood the correct dinner reservation request but also if the person who answered the phone accurately fulfilled the request.

The technology offers checkpoints, such as confirming the user’s requests before proceeding with a call and asking if it can move beyond the user’s initial request. For example, if the 7 p.m. reservation slot is full, it will ask you if a 7:30 p.m. reservation is also acceptable.

The user may still worry about showing up to a crowded restaurant with an incorrect reservation, though. That worry can, for now, surpass the desire to save a few minutes by letting Duplex handle the reservation.

Overall, consumers’ hesitancy toward AI voice technology such as Google Duplex may result from the fact that a phone call is a higher-stakes responsibility than people are accustomed to assigning to AI.

Most People Uncomfortable With Accidentally Mistaking AI for a Human

People also feel apprehensive about increasingly lifelike AI voices blurring the line between technology and humans. This may not remain true as the technology becomes more integrated into everyday life, though.

More than 6 in 10 people (61%) would feel uncomfortable if they believed they spoke to a human and later learned they had spoken to AI.

Yet, experts pointed out examples of people becoming comfortable with technology that overtook tasks that humans traditionally accomplished. They noted that AI voice technology may follow a similar pattern.

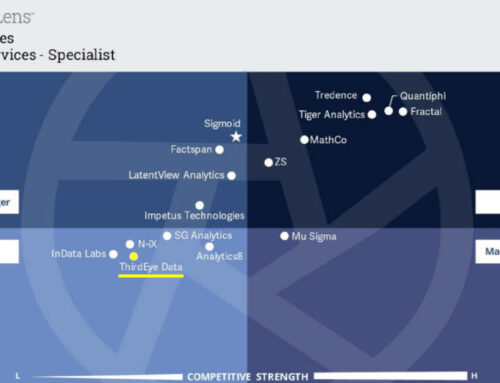

Dj Das, founder and CEO of ThirdEye Data, an AI solutions company, referred to two technologies that people initially distrusted but are now commonplace: ATMs and self-checkout lines at stores.

“When ATMs first came to this world, people were scared,” Das said. “At that time, I was in India. We never went to the ATM because we didn’t trust it.”

Similarly, self-checkout lines first arrived in stores in the early 2000s to mixed reactions but have since gained popularity.

“We’re all vulnerable to different forms of this anxiety when it comes to adjusting to new ideas or technological advances,” said Ivan Kotiuchyi, research engineer at Ciklum.

People often move on from that phobia once they become accustomed to using the technology.

Mukherjee emphasized the importance of building consumers’ trust in the abilities of conversational AI tools.

“Over time, as people get more comfortable … and [AI technology] becomes more reliable, then the pre-announcement of ‘I’m your digital assistant’ versus ‘I’m a real human being’ will become less of a need, in my opinion,” Mukherjee said.

As consumers increasingly trust AI voice technology to accomplish tasks for them, the need to understand the distinction between what is AI and what is human will dissipate because the tools will become integral to accomplishing everyday tasks.

Consumers may also want a clear distinction for AI voice technology due to the security threats it could pose, though.

Most People Want AI Voice Technology to Distinguish Itself From Humans

AI calls will offer new opportunities for hackers and robocallers to manipulate people on the receiving end of a call and steal their information by pretending to be a human.

Google Duplex is a helpful tool, but others may use the same technology for malicious purposes.

“I don’t worry about Google,” Shapiro said. “I worry about the bad actor who is going to use the same technology.”

“I don’t worry about Google. I worry about the bad actor who is going to use the same technology.”

These opportunities for manipulation may be why 81% of people want an AI-powered voice assistant such as Google Duplex to declare itself as a robot before proceeding with a call.

Currently, there are few regulations requiring AI to declare itself, but they do exist. Kotiuchyi noted that in July 2019, California will begin requiring AI-powered messages to announce they are from a bot.

Proponents of the bill say it’s meant to curb “deceptive or commercial bots.” The bill indicates that the government may be beginning to recognize the negative consequences of conversational AI.

Google Duplex’s Technology Can Enable Manipulative Voice Mimicry

How does the AI technology that fuels Google Duplex pose a security risk? Consider how the AI voice mimicry can be used to bolster robocallers’ scams.

Already, more than half of people (52%) receive at least one robocall per day and the number of robocalls worldwide reached a record-setting 26.3 billion in 2018.

Robocall scammers may see miniscule win rates. Robocallers seek new ways to trick people into picking up the phone and giving them the information they want, including calling from numbers with local area codes and using increasingly lifelike bots to trick people into thinking a real person is talking to them. These bots can call thousands of numbers at once but are often still recognizably robotic.

That’s why scammers may turn to “vishing” instead. Vishing is a combination of the words “phishing,” which is the act of sending fake emails to steal information, and “voice.”

Although the term vishing is sometimes used to refer to robocalls, it also encompasses human scammers who conduct fraudulent calls themselves. These scammers seek to steal people’s information by impersonating a familiar person or organization over the phone.

Using manipulation, these hackers often succeed where robocalls cannot by convincing people they are from a reputable source, such as their bank. From there, the hackers can ask for information such as a debit card’s PIN code or a Social Security number because those who answer the phone trust them with the information. These calls are less efficient than robocalls because they must be done manually, but they can be more effective.

AI Voice Technology Can Automate Increasingly Sophisticated Scams

Using a combination of voice mimicry and a tool such as Google Duplex poses a security risk, allowing scammers to automate the act of vishing.

Technology already exists that can replicate a person’s voice. Scammers may be able to conduct vishing calls automatically by adding conversational AI capabilities, similar to how robocallers contact thousands of people at once.

These types of calls could even trick people into thinking they are actually talking to a colleague, friend, or family member.

“Imagine a call that comes from your boss,” WatchGuard Technologies Chief Technology Officer Corey Nachreiner said in GeekWire. “It sounds like your boss, talks with his or her cadence but is actually an AI assistant using a voice imitation algorithm. Such a call offers limitless malicious potential to bad actors.”

Consumers’ distrust of AI voice technology may be justified given the myriad ways scammers can exploit its capabilities.

Das, however, believes that regulation will catch up as this technology expands.

“Whenever a new technology comes, people don’t know how to handle it … That’s very common,” Das said. “As things mature, we’ll understand what’s what and we’ll come up with data privacy and security laws.”

Conversational AI offers limitless opportunities to streamline and automate tasks, but consumers should be wary of increasingly complex scams using this technology.

Distrust of AI Communication Extends to Written Conversations

People are hesitant about not only AI-powered voice conversations but also AI-powered written exchanges.

Nearly three-quarters of people (70%) say they are unlikely to trust an AI-powered email assistant to answer simple messages for them correctly.

Conversational AI tools for written exchanges are already in widespread use, though.

X.ai is an AI virtual assistant that can schedule meetings through emails and Slack and on websites.

In emails, users add “Amy” or “Andrew” to their email exchange to find a time for a meeting. The AI steps in to converse with the respondent, creating a calendar event at the end.

Anyone can use X.ai, with plans starting as low as $8 per month, showing that this type of technology is readily available for most workers.

Another AI-powered conversation assistant is Gmail’s Smart Reply feature.

Approximately 1 in 10 emails sent via the Gmail app are now Smart Replies. Shapiro noted that many Gmail users may not even consider Smart Reply to be in the same vein as other conversational AI.

“What you see is auto-complete is not even considered AI anymore, even though it really is,” Shapiro said. “It works so well, and everybody likes it.”

Smart Reply lets users choose how to continue the conversation, potentially boosting its usage compared to more complex tools. Users feel more control over the situation because they select the reply.

There are already opportunities for people to take advantage of conversational AI for written exchanges, even if consumer trust does not differ significantly from voice technology.

Conversational AI Can Support Business Needs

Conversational AI offers more opportunities for businesses to increase their efficiency and interact with customers.

Das predicted that the technology may reshape how call centers are used, for example.

“Call centers will probably only handle [higher-level] support,” Das said. “Everything else will be done by the AI systems. You’d call in, and an AI system will understand who you are, what you’re talking about, and what they can do. It can understand the tone of your voice. It can understand if you’re angry or if you’re okay.”

Currently, automation within phone support can negatively impact the customer experience. Nearly 90% of people would prefer to speak to a live human compared to a phone menu. Conversational AI may offer businesses the automation of a phone menu with more of the personal touch of a live representative.

Kotiuchyi also noted the technology’s potential but conceded that it still needs to evolve before widespread adoption.

“It would be nice if a human-like voice assistant can also understand the feelings and mood of the user just by the user’s voice or style of writing,” Kotiuchyi said. “All those challenges are really tricky and still need to be addressed, though.”

AI voice technology such as Duplex can allow businesses to automate phone support without sacrificing as much of the customer experience but still requires more improvements.

Trust in Conversational AI Will Increase as More People Adopt AI Technology

Approximately 70% of people are hesitant to trust AI to complete simple phone calls or send emails for them. This hesitancy may be tied directly to how much control the user must give up to the AI tool in the interaction.

More than 61% of people would feel uncomfortable if they thought they spoke to a human and later learned they’d spoken to AI. Newer technologies cause consumer discomfort as they become more lifelike.

More than 8 in 10 people also believe AI voice technology should declare itself as non-human before proceeding with calls. Conversational AI could fuel more sophisticated and effective scam calls.

Conversational AI offers new opportunities for businesses to automate processes while still maintaining a more personal touch, though.

Consumers claim to show significant distrust toward these tools now, but experts believe trust will increase as the technology is more widely adopted.